tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Dec 30, 2008 9:54am

oakland crime maps XI: how close, and how bad?

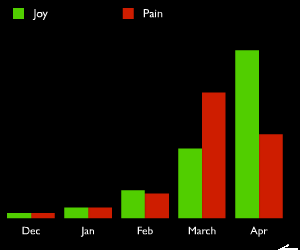

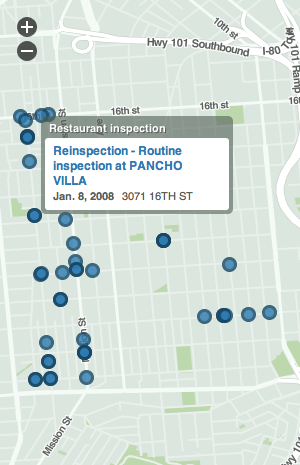

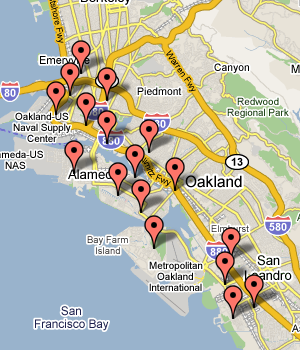

Did you know that Oakland Crimespotting is still kicking hard, with hundreds of alert subscribers and a smooth, regular flow of timely data from the Oakland Police Department? The project has essentially been on auto-pilot since we re-launched it back in March, but holiday side projects have been a favorite activity of mine for years, so this time I'm thinking about the relatively short time horizon Crimespotting offers.

The current interface offers up to a month's worth of highly granular information on individual reports, and you can quickly get a sense for how active a given neighborhood is by digging around a little, doing a few searches, and checking out details on local crime reports. What we don't have is a long view.

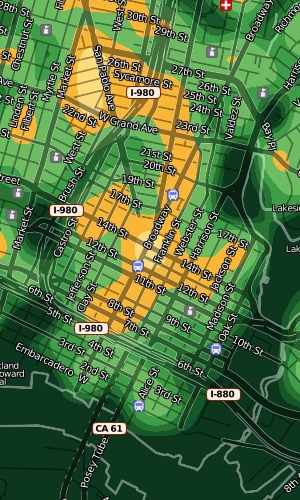

Heat maps are one effective way to present large volumes of aggregate data over a geographical area, so I've been exploring ways to make them legible for crime data.

There's a ton of existing work out there in this area to draw on, some of it good and some of it dreadful.

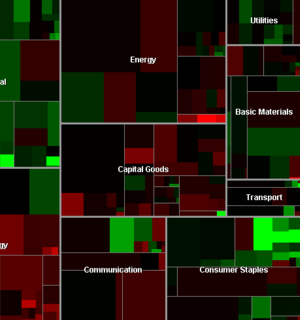

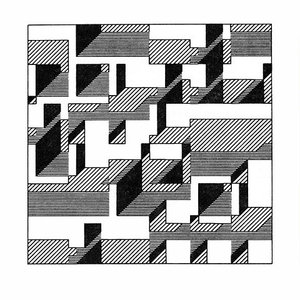

First and foremost is Martin Watternberg's seminal Map Of The Market, a live and non-geographical view of stock trading activity, that celebrated its ten-year anniversary this past year. MOTM shows volume and change over time in a tight, clean, effective package most recently notable for showing how Campbell's Soup and gold mining managed to weather the recent precipitous drops in the Dow.

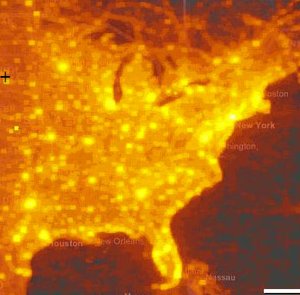

A more topical geographic example is Microsoft Research project How We Watch the City: Popularity and Online Maps. Danyel Fisher used server logs from Microsoft Virtual Earth tile servers to show viewing patterns around the world, with the beautiful results shown here.

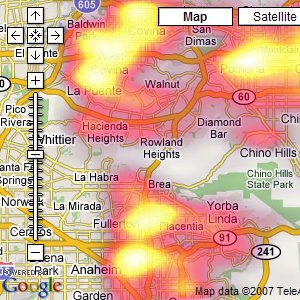

Finally, HeatMapAPI offers commercial support for making your own heat maps.

The results of HeatMapAPI's software actually illustrate a few of the things I've found weakest about geographic heat maps, a big excuse for why we've not done them for Oakland Crimespotting so far. There are two big shortfalls in the screen shot above: the data obscures the context, and simultaneously fails to communicate much in the way of specifics. The two primary questions you might want to ask of your data are "where?" and "how much?" The answers offered here are apparently "in a place near Whittier whose name I can't read" and "yellow".

So that's the starting point.

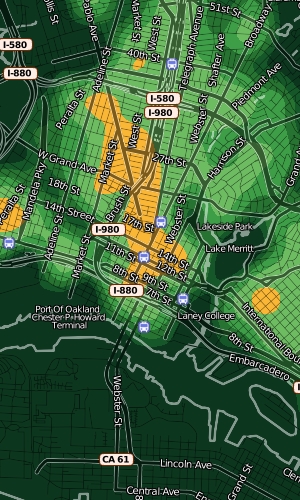

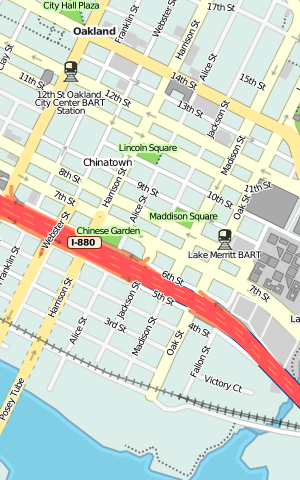

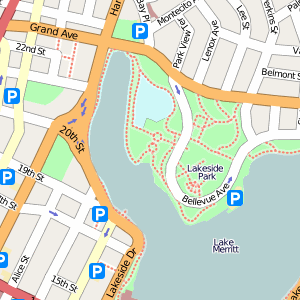

The answer I've settled on for the "where?" question is OpenStreetMap. I've been growing steadily more excited about this project for some months now, in part because it offers up the possibility of playing some beautiful visual games with high quality street data. In the HeatMapAPI example above, the context problem arises from the impossibility of manipulating Google's map data at any level more granular than their pre-rendered tiles. The overlays obscure the town and street names that help give them meaning. With OSM data and Mapnik, it's possible to create a semi-transparent streets layer specifically designed to interact well with underlaid data. It took just an afternoon's worth of modifications to my existing OSM visual design to come up with something suitable for layering with quantitative data. Gem helped tune the visual interaction between layers, so now there's a directly-overlaid set of names and icons above a translucent (25% - 50%) black street grid. Each of these layers is a separate Mapnik style, composited with the underlying color heat map.

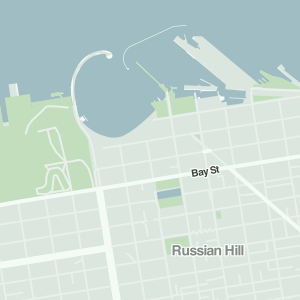

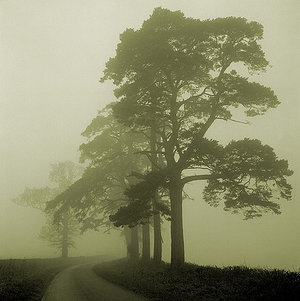

In these maps, streets have been stripped back to translucent dark stripes, with white edges showing where the shoreline of the Bay begins.

The second question, "how much?", is somewhat more interesting. The difficulty with continuous, analog data lies in communicating something of relevance and urgency in it. If the map is orange, what does that mean exactly? Will my car get broken into?

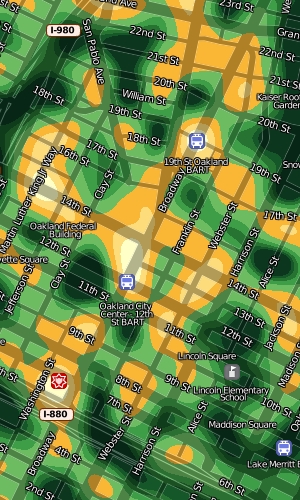

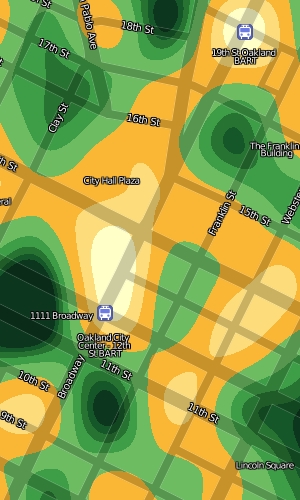

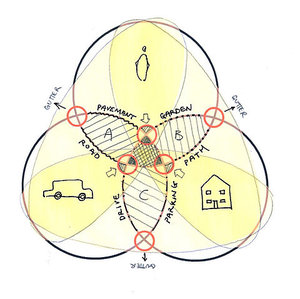

One approach I've been prodding at takes advantage of a neighborhood sense for time and space. People know how big a city block is, how it feels for a month to go by. We know something of this in our database of crime reports too, so the colors in these experimental designs are keyed to specific meanings. Orange here denotes areas where, on average, the police respond to a call once per month for every 100m x 100m city block. Inside orange, there are two more divisions shown as brighter, hotter colors: two weeks and one week. For the police to show up right on your block every week is quite heavy, and there are just a few places in town that see this kind of activity. Outside orange, there are divisions of green that represent an additional month of peace and quiet for every block at each step.

At this level, you can start to see where OpenStreetMap data really begins to shine: all those little flag icons represent Oakland public schools that I added to the OSM database specifically to have such local data available to Crimespotting. The Microsoft Virtual Earth maps we use on the current site are beautiful, but they aren't particularly helpful in the way of local, civic data relevant to a consideration of police activity.

As the map zooms in closer, large amorphous blobs particulate into smaller, more granular bleeps and bloops. When you start seeing individual blocks in the map, you can also see individual corner hot spots. Here, the two downtown Oakland BART stations, a slice of MLK between 14th and 16th streets, and the area immediately around Oakland Police headquarters on Broadway and 7th are especially hot. The colors at every zoom level continue to mean the same things: always orange for "once a month, once per block". The colors here are cribbed from Cynthia Brewer's cpt-city work, a combination of YlGn and Oranges.

I'm happy that Lincoln Elementary School seems to sit in a safe zone of relatively low crime.

At a certain point, increased granularity becomes a problem. Our data is really only accurate to the city block level, so it doesn't make sense to generate a heat map more specific than this. The smooth, swooping whorls at the highest levels of zoom help to communicate the relative imprecision of the data at this level.

Overall, I'm happy with the results so far. These images are being generated through a combination of GDAL, Mapnik, NumPy and PIL. They're not yet ready to be integrated into the Crimespotting site proper, though I imagine that the first place they would eventually show up would be on the static map beat pages. I'm interested in comments or criticisms on how to improve the beauty or clarity of these results, before they're pushed in the direction of a proper release.

Dec 9, 2008 7:09am

making friends with hill shading

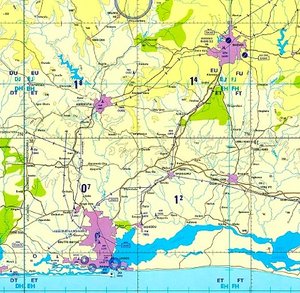

Living in a city that's quite hilly in places, street patterns make a lot more sense if you can see how they interact with the landscape. The inclusion of elevation data adds legibility to a map, and in the case of the Bay Area it's also interesting to see how overall urban development hugs the flatlands in most places. My goal here is still a beautiful map of Oakland for use with Oakland Crimespotting, with street-level details like schools, hospitals, and major buildings included.

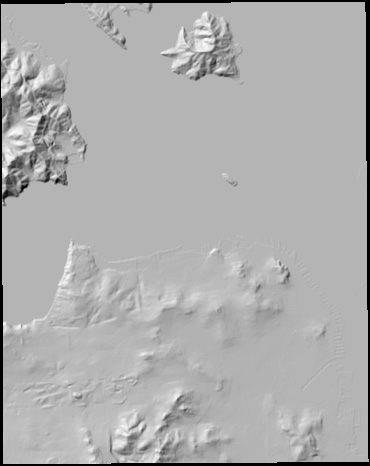

I've just pushed a major update to the Bay Area cartography I've been working on. When I last posted about it in September, I had just added the Cascadenik CSS preprocessor to Dane's mapnik-utils repository. I was inspired to investigate elevation data by Andy Allan's addition of hill coloring to his award-winning OpenCycleMap project, and spurred on by finding the USGS BARD 10-meter elevation data for the San Francisco Bay Area.

Data

Turning a bag of digital elevation model (*.dem) files into shaded hills integrated with OSM map data is a multi-step process. Each file covers a rectangular area, and contains elevation in feet or meters for each included point. This is the northern part of San Francisco with Angel Island and a small bit of Marin showing. I exaggerated the colors somewhat to make it more obvious what the data contains:

Shading

OpenCycleMap doesn't actually use elevation data to simulate shadows; instead it's used to color the ground shades of green or brown, and to provide isolines. They look like this:

Andy told me that he used PerryGeo's DEM utilities to do his coloring, so I started there. It was a bit of a hassle to get hillshade.cpp compiled (see my comment on that page from Nov. 18), but eventually I was able to convert elevation files to GeoTIFFs with shading like this:

Now I had two problems. One was that the shading algorithm trims a single pixel off the edges of its input, because it can't correctly figure out the slope on the border of an area without data. The other was that the BARD *.dem files are published in a mix of meters and feet, so some sections appeared to have an exaggerated height compared to others. Happily, the heavy lifting of dealing with geographic raster data turns out to be mostly handled by the amazing GDAL library, so it was easy to write a Python script to stitch adjoining elevation files together into larger, overlapping, normalized panels and adjust for the feet-vs.-meters problem (stitch.py, 8K). It was also easy to port the C++ hillshading program to Python, which let me fine-tune some other annoying problems around the edges (hillshade.py, 4K).

Tiling

The library I use to generate map tiles, Mapnik, has a way to get raster images into a map, but it doesn't yet support niceties like warping or smooth interpolation. I still have a giant bag of multi-purpose tiling code sitting around from all my flea market mapping experimentation, so this turned out to be an easy step. I warped and tiled all the overlapping bits of shaded hill into a smooth, grayscale tile set that covers the entire SF Bay Area up to zoom level 15.

I've posted all of these hill shaded tiles to their own S3 bucket, so they can be used in slippy maps by anyone. The URL format for these is http://hills-bayarea.s3.amazonaws.com/{zoom}-r{row}-c{column}.png, e.g. Mt. Tamalpais and Mt. Diablo seen here:

I've also included a permissive crossdomain policy file, so these can be used in Flash unencumbered.

Compositing

The other thing lacking in Mapnik's RasterSymbolizer is a way to choose how a raster image visually combines with other cartography, so this ended up being a somewhat custom operation as well. I started with the OpenStreetMap style.mml style file I included as part of Cascadenik example data. I moved some roads up and down in the layering order, and made it split cleanly into two separate styles: ground.mml for ground cover, parks, and roads at very low zoom levels, and figure.mml for labels, buildings, bridges, symbols, and so on. The idea is that figure.mml and ground.mml together should look identical to style.mml, but that the split provides a convenient place to slip in a grayscale set of hills to lighten or darken the ground as necessary.

I implemented a version of Photoshop's Hard Light transfer mode because it seemed to look best in this situation. I also added a feature request to Mapnik in the hopes that this sort of thing will be a built-in feature of the library sometime.

Ta-Da

Check out the current version of the map for the results. OpenStreetMap and OpenCycleMap's own tiles are included on that page for comparison. If you see a mistake, you can correct it yourself or just mark it as a bug.

Nov 27, 2008 11:12pm

blog all dog-eared pages: implementation

The full title of this 1973 U.C. Berkeley public planning book (recommended by A Better Oakland) is formidable: Implementation: How Great Expectations In Washington Are Dashed In Oakland; Or, Why It's Amazing That Federal Programs Work At All, Economic Development Administration As Told By Two Sympathetic Observers Who Seek To Build Morals On a Foundation Of Ruined Hopes. It seems significant that all the illustrations are excerpts of Rube Goldberg machines.

I bought this book because of the Oakland connection, but there's a lot in here that's relevant to any form of project planning and completion, especially for software developers and designers (like me) trying to figure out why it's so easy to start, and so hard to finish, a project. Other writers in the development world have touched on this before, and there's an entire discipline called Agile that seeks to cut through impediments to completion like a Gordian Knot.

There's an undercurrent of misery and pathos to the book - nothing ruins dinner like 200+ pages on fucked-out-of-the-gate early 1970's social welfare programs in a city you love. The historical framing is an EDA jobs program for the hardcore unemployed that sought to deliver funding to projects and businesses which would in turn employ economically disadvantaged Oakland residents. The late-1960's urgency behind the project stemmed from a desire to nip in the bud further urban race riots like those that had taken place in Watts and elsewhere. Oakland, home of the Black Panthers, was viewed as a potential trouble spot. Rapid flows of federal money aimed at helping the unemployed was identified as the solution. As you might expect from the title, things didn't turn out as planned: money and time were generally wasted, and few people received the promised help. The program fit the general pattern of the past 50 years: splashy introduction, front page news, energy and excitement at the outset, slow leakage of enthusiasm, and an eventual page 10 notice of cancellation several years later.

This is going to get wildly relevant in the coming years, especially in light of Obama's recently-alluded-to New New Deal: "We'll be working out the details in the weeks ahead, but it will be a two-year, nationwide effort to jumpstart job creation in America and lay the foundation for a strong and growing economy." Part of me is saying "uh oh", but a bigger, louder part of me is saying "hellz yeah, where do I sign up to help?"

The core question that authors Aaron Wildavsky and Aaron Pressman hope to answer is: why is the road to hell paved with good intentions? Is there a difference between policy and implementation that can be somehow bridged, or at least described more precisely? "Implementation" refers to that often-overlooked part of the project that happens after the ideas, funding, and excitement, but before any tangible results.

Page 87, on the complexity of joint action:

When we say that programs have failed, this suggests we are surprised. If we thought from the beginning that they were unlikely to be successful, their failure to achieve stated goals or to work at all would not cry out for any special explanation. If we believed that intense conflicts of interests were involved, if people who had to cooperate were expected to be at loggerheads, if necessary resources were far beyond those available, we might wonder rather more why the programs were attempted instead of expressing amazement at their shortcomings. The problem would dissolve, so to speak, in the statement of it.

I love this idea, and it slots in neatly with the commercial world's wisdom that successful companies create room for mistakes, if those mistakes can be used to gain experience and learn. From a project point of view, no one wants to be on the job that fails. From a societal point of view, failed experiments that are adequately described point the way toward eventual success.

Page 98, on mismatched means and ends:

When programs are not being implemented, it is tempting to conclude that the participants disagreed about the special ends they sought rather than the ordinary means for attaining them. Thinking about means and ends in isolation, however, imposes an artificial distinction, especially when more than one program is involved. One participant's ends, such as a training facility, may be another actor's means. Once innumerable programs are in operation, the stream of transactions among people who are simultaneously involved in them may evidence neither clear beginning nor end but only an ebb and flow. As the managers of each program try to impose their preferred sequence of events on the others, their priorities for the next step, which differs for each one and cannot be equally important to all, may conflict. The means loom larger all the time because they are what the action is about. Actually, it is easier to disagree about means because they are there to provoke quarrels, while ends are always around the corner.

The difference between success and failure seems to be the difference between turbulent and laminar flow. Each participant has the same end in mind, but one is relaxed while another is in a hurry, one wants to start here and another there. Even with all actors ostensibly moving in the same direction, turbulence and chaotic flow result from these seemingly-small differences in chosen velocity.

Page 113, on delay:

What had looked like a relatively simple, urgent, and direct program - involving one federal agency, one city, and a substantial and immediate funding commitment - eventually involved numerous diverse participants and a much longer series of decisions that was planned. None of the participants actually disagreed with the goal of providing jobs for minority unemployed, but their differing perspectives and senses of urgency made it difficult to translate broad substantive agreement into effective policy implementation. It was not merely the direction of their decisions - favorable or unfavorable - but the time orientation of the participants - fast or slow, urgent or indolent - that determined the prospects of completion. When so many future decisions depend on past actions, delay in time may be equivalent to defeat in substance.

Much of the process methodology behind Agile seems to recognize that priority-setting is the critical point for most friction: people must agree on what the next most important task is, and this is where most negotiation is designed to take place.

Page 133, on the need for bureaucracy:

If one wishes to assure a reasonable prospect of program implementation, he had better begin with a high probability that each every actor will cooperate. The purpose of bureaucracy is precisely to secure this degree of predictability. Many of its most criticized features, such as the requirement for multiple and advance clearances and standard operating procedures, serve to increase the ability of each participant to predict what the others will do and to smooth over differences. The costs of bureaucracy - a preference for procedure over purpose or seeking the lowest common denominator - may emerge in a different light when they are viewed as part of the price paid for predictability of agreement over time among diverse participants. The price may be too high, but the cost of accomplishing little or nothing otherwise must be placed against it.

Big, dumb bureaucracy has a lubricating effect here. Things take a long time because processes are designed to insulate actors from each others' instabilities. The computation metaphor that seems appropriate here is boundedness: CPU or I/O? What exactly are you waiting for at any given time, and how can project management help participants understand that some given task or responsibility is simply going to take a while, and maybe you should find something else to do?

Page 134, on coordination:

When one bureaucrat tells another to coordinate a policy, he means that it should be cleared with other official participants who have some stake in the matter. This is a way of sharing the blame in case things go wrong (each initial on the documents being another hostage against retribution) and of increasing the predictability of securing each agreement needed for further action. Since other actors cannot be coerced, their consent must be obtained. Bargaining must take place to reconcile the differences, with the result that the policy may be modified, even to the point of compromising its original purpose. Coordination in this sense is another word for consent.

Telling another person to coordinate, therefore, does not tell him what to do. He does not know whether to coerce or bargain, to exert power or secure consent. Here we have one aspect of an apparently desirable trait of antibureaucratic administration that covers up the very problems - conflict versus cooperation, coercion versus consent - its invocation is supposed to resolve.

Everyone wants coordination on his own terms.

This is the part where I criticize unilateral approaches like 37 Signals' Getting Real. The core tenets of Getting Real seem to essentially boil down to a pathological aversion to commitment: commitment to people ("small teams"), to goals ("flexible scope"), and to details ("ignore details", "it doesn't matter"). Generally speaking, people who believe this will have already put themselves in a position to live it: it's no accident that Stamen is seven people. The act of externalizing Getting Real makes it a process, one that's spectacularly bad at addressing coordination. Fine for small projects where everyone starts on roughly the same page, but disastrous for any situation where other actors need to give consent: managers, clients, investors, customers. The universe of Getting Real is a cramped, airless one populated by to-do list managers and communication software for tiny teams.

Where someone needs to be convinced, coerced, or seduced into cooperating with you, process gives way to sub-rational animal instinct.

Pages 165-166, on implementation-as-control:

In this view, for instance, implementers must know what they are supposed to do in order to be effective. Yet, "street level" bureaucrats are notorious for being too busy coping with their day-to-day problems to recite to themselves the policies they are supposed to apply. ... Writing about the administrative process in the regulatory commissions of the New Deal era, James Landis recalls how "one of the ablest administrators that it was my good fortune to know, I believe, never read, at least more than casually, the statutes that he translated into reality. He assumed that they gave him power to deal with the broad problems of an industry and, upon that understanding, he sought his own solutions."

The planning model recognizes that implementation may fail because the original plan was infeasible. But it does not recognize the important point that many, perhaps most, constraints remain hidden in the planning stage, and are only discovered in the implementation process.

This is what I think Agile seeks to address: the idea that requirements change because they flex and respond to previous requirements already met.

Pages 167-168, on implementation as interaction:

This view is strangely reminiscent of old syndicalist doctrines summarized in once-popular slogans like "The Railroads to the Railroadmen" and "The Mines to the Miners." The syndicalists' demand for "industrial democracy" actually concealed a view of production as an end in itself rather than a means of satisfying consumers' wants. We feel the emphasis on consensus, bargaining, and political maneuvering can easily lead (and has, in fact, led) to the conception that implementation is its own reward.

The interaction model of implementation carries interesting evolutionary overtones. The results are not predictable, an element of surprise is maintained, and the outcomes are likely to be different from those sought by any single participant.

This is where I think Agile falls apart: the manifesto promises to do away with process, but introduces process of its own. In particular, the process it introduces is fundamentally introspective, a kind of "Programming for the Programmers" frame of mind that seems to focus on the needs of the development team over the needs of the broader project. The outcomes are likely to have been bent or twisted somewhat along the way.

Page 215, on implementation as adaptation:

In a world of flux, it is only through continuous negotiation between administrators, implementers, and decision makers that any "congruence between program design and program implementation" (mentioned as essential in the literature) can ever be achieved.

"Adaptation" is Pressman and Wildavsky's final watchword for a useful view of implementation. It encodes ideas of flexibility, negotiation, while still leaving room for a deeper goal. This is not willy-nilly natural selection, but a process of constant self-evaluation. There's a lot more on this topic in a future post on Arthur Bentley's The Process Of Government.

Page 228, on learning from error:

In reaction to what is widely perceived as a dismal record, students of implementation, like the evaluators before them, have sought to guard themselves against failure. Instead of learning from error as it is occurring, they hope to prevent future failure before it takes place. Since there can be little learning without mistakes to learn from, however, the field of implementation is caught in a double bind: too much error suggests incompetence and too little inhibits learning.

Nov 19, 2008 8:09am

smule's ocarina

Earlier tonight I briefly met Spencer Salazar from Smule, the makers of the iPhone Ocarina. They have a small suite of like Sonic Boom ("turns your phone into a virtual firecracker"), Sonic Vox ("the real-time voice shifter"), and Sonic Lighter ("Sonic Lighter is a lighter") that are mostly technology gimmicks. Spencer admitted as much but I'm still completely smitten with the fact that 75% of their applications have a simple globe view that uses the network features of the phone to show you what other people, all around the world, are doing with each app right now. You can hear other people's clumsy ocarina playing, watch little explosions when other people use Sonic Boom, and see who's using the lighter app with some sense of how those people are related to you based on flame-passing connections.

We've seen this all before, in Twittervision and other such globetrotting applications. These Smule globes seem strangely different and much more interesting, largely I think because you hold the phone in your hand instead of the laptop or monitor on your desk. It's a more personal, touched engagement with the screen that makes visualizing an earth-spanning army of phone lighters and flute blowers more physically personal. In particular, the Sonic Boom visualization is like watching television: no reading, no place names, just tiny explosions with audio all over the world with the same unmediated appearance as old top-down resource gathering games like War Craft I.

Having just read Teeming Void's Against Information (a critique of "data art"), I'm thinking about direct perception of data as a way of making it more visceral. The Golan Levin and Jonathan Harris pieces referenced in the paper all suffer from various forms of indirection: Levin makes breaking up look like math and physics, while Harris jumps to all sorts of crazy conclusions based on faulty language parsing and excessively abstract visual metaphor. How can a visual representation of data make itself felt right there, in your hand? Pictures help. Sound helps.

Nov 18, 2008 9:29am

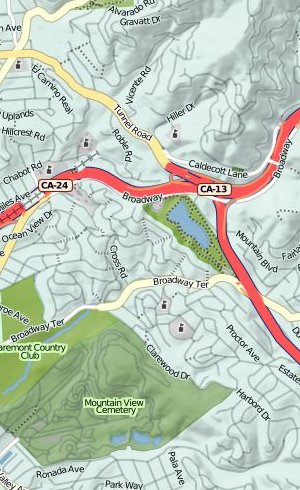

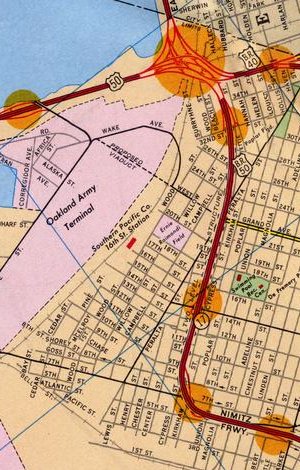

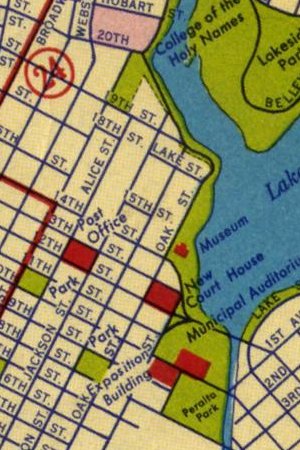

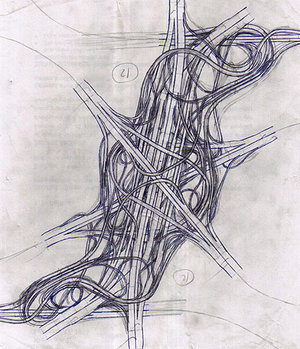

flea market mapping III: here come the freeways

I've been expanding the georeferenced collection of Oakland maps that Gem and I started back in May. Recently, I purchased a 1967 Standard Oil map of Oakland for a few bucks from EBay. I was looking for late 1960's / early 1970's, because that's when the freeway structure here really started to take shape. Previously, we looked at a switch from rail to roads. Through the 50's and 60's, the switch was accelerated with the construction of massive highways through what had formerly been residential neighbhorhoods.

Particularly interesting is the Cypress Viaduct, a raised connection between highways 880, 580 and 80 running through West Oakland. When built, it was sharply criticized for splitting the neighborhood and further isolating it from downtown Oakland. The current site of the viaduct was where I made some of my first edits to OpenStreetMap. The structure was destroyed in the 1989 Loma Prieta earthquake shortly after my family moved to California, but on this map it's a fresh addition to the landscape:

The 19th anniversary of the quake was October 17th, one month ago.

The new 1967 map is a striking constrast to the previous 1952 map. The various freeways connected to Interstate 80 are one major difference, but the cartography is also a big contrast. This map is similar to the other Gousha-designed map from 1936 in its choice of bright colors, but it also features topographic shading up in the hills and orange highlights around freeway exits. A significant piece of infrastructure still under construction at this point is the 980 / 24 connector from downtown Oakland up into the hills toward the Caldecott Tunnel. The construction areas for the southern stretch are marked, while the northern route is still a whispy dotted line through miles of backyards.

Nov 10, 2008 9:42pm

work with me

Are you a talented developer interested in supporting our data visualization and mapping practice from the server end of things? Are you interested in a full-time gig at our San Francisco office?

You'll be working with a small team of designers and engineers who will be looking to you to make their ideas feasible. You're excited by the possibility of cutting and bending data to fit it through the thin straw of the internet. You can look at a source of information and model it as resources, rows and columns, messages and queues.

...

Our technology choices lean towards open source databases, unix-flavored operating systems, and scripting languages like Python and PHP. You'll be expected to know these things, and bring something new and unexpected besides.

Read the rest of the job description and let us know! (watch the spamarrest response on that e-mail address)

Nov 10, 2008 8:03am

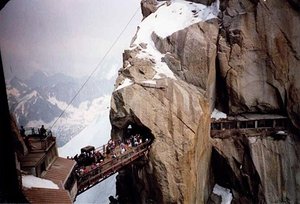

web directions east

Earlier this morning, I returned from a four-day spin through Tokyo, my second visit there, to speak at Web Directions East. This trip was wholly unexpected, as I was pinch-hitting for Jeff Veen who had to cancel at the last minute and suggested me as a replacement. Fortunately it was possible to book a last-minute flight, take over a hotel reservation, and write an hour-long keynote talk in just a few days. It was an surprise honor to even be asked, and the experience was smooth sailing all the way, including the miniature hotel room.

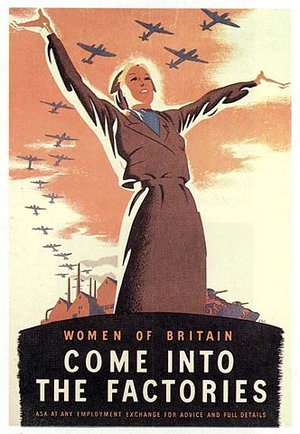

I'm not going to post exact talk notes, but I outlined a general overview of data visualization and then focused on a range of Stamen's projects from the past four years and how they illustrate some deeper trends. Elements of this should be familiar to anyone who's heard me, Eric, Shawn or Tom speak before:

- Mark Newman's 2004 election cartograms. I learned from Karl that they'd been freshly updated to reflect last week's results.

- Examples of flow-management including 538 and John Allspaw's Flickr capacity-planning ideas.

- Our 2004 MoveOn Virtual Town Hall application, for the first time in what seemed like years. It would be amazing to revisit this project again somehow, possibly in a non-political context.

- Digg Labs, in the context of "liveness", from our Live/Vast/Deep iron triangle of visualization.

- Trulia Hindsight and Snapshot, as examples of "vastness".

- The hot, fresh, new SFMOMA ArtScope as an example of "deepness". This also served as a nice digression about map tiles, something I've been thinking and talking about a bunch.

- Oakland Crimespotting to round out some ideas about stable linking and data on the web. I also heavily name-checked Tom Coates's Native To A Web Of Data and Matt Biddulph's Designing Data For Reuse here.

A few people asked after the source of a particular background photo from my slides - it was an image of the Shanghai Urban Planning Museum's city model, possibly gaffled from blog.360dgrs.nl.

In addition to meeting the other speakers and organizers, John, Oli, Andy, Jeremy, Eric, Dan, and Doug, I also had a chance to take an excellent nighttime Tokyo bicycle and beer ride with Craig and Verena that made me wish SF and Oakland maintained the pavements a bit more effectively. I now desperately want to add a third bike to my collection, a mama charion granny bike.

Nov 2, 2008 12:59am

blog some dog-eared pages: cognition in the wild

It's been a while since I've done one of these, I'm a bit rusty. I started Cognition In The Wild over a full year ago, put it down for a while, and only recently came back to finish the book. The topic is cognitive activity, and how it plays out in social situations. Sort of a behaviorist tract in a way, which is interesting because the idea that everyone loved to hate (everyone in UC Berkeley CogSci department ten years ago that is) is starting to pop back up in some odd places in my life: this book, another one about politics from 1908, Obama's economic policies, etc.

Edwin Hutchins frames his story in the context of an observational trip aboard a U.S. Navy vessel, the Palau, and its crew of sailors and navigators. Hutchins particularly concerns himself with the way in which practice and instrumentation constitutes a meta-cognitive process above the level of the individual: the observations and computations that enable the crew of the ship to steer it are carried out by a collection of participants, some of them quite inexperienced, all of them performing small pieces of a bigger task. Together, they form a complete computational process, a sort of full adder made of half adders. He's particularly interested in the instrumentation that lets these guys do their jobs: slide rules, sighting scopes, variations on the protractor, and conventions surrounding verbal communication on the bridge and over the ship's intercom.

Hutchins's theory seems to be that these devices and practices act as a form of cognitive jig, embodying complex trigonometric and geometric processes in tangible form the way a slide rule converts multiplication into a simple linear movement. I've been interested in this idea before, via David Pye's Nature and Art of Workmanship. Pye argued that a lot of what we consider to be "hand work" is actually jigged and regulated through external forces. Pye calls unregulated workmanship "the workmanship of risk", and it's an interesting contrast to the kind of cognitive risk minimization that Hutchins is describing here. There's joy in navigation by dead reckoning as in risky, dextrous workmanship, but the U.S. Navy is having none of it and prefers its interpersonal procedures immaculately specified to the finest detail.

The place where I think this touches some of my recent interests in tiling and flows is that the purpose of a jig is to turn the latter into the former, to transform fluid into constrained motion. In particular I'm thinking about by most recent favorite general-purpose example, the use of "remaining days" as a transposed operations metric by Flickr's capacity planning guru John Allspaw.

Flickr takes one kind of motion, the consumption of storage space or saturation of network bandwidth, and transposes it into another kind of motion, the number of days they're free to sit on their hands until everything falls to pieces.

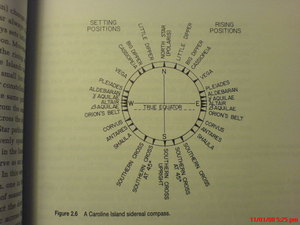

There's an extended example in Hutchins' book that's similar in spirit to this, and it forms the only coherent set of pages I bothered to dog-ear. As a counterpoint to Western-style navigation that places a moving boat in the context of a static ocean, he offers an in-depth analysis of Micronesian navigation practices that proceed along utterly different lines and yet still allow canoe navigators to travel between tiny islands out of sight of land without losing their bearings. The background to this alternate navigation frame is rote memorization of angular relationships among islands, but the surprising bit is the way it recontextualizes the navigator as a static center with respect to the sidereal compass, surrounding islands moving past him on parallel tracks to the left and the right.

These excerpts constitute my first donation to the Analogy Library. That Flickr capacity thing above is my second. Also worth a read is UPenn's Traditional Navigation in the Western Pacific: A Search for Pattern, written by 1989-2003 This Old House host Steve Thomas.

Page 66, a bit of context:

Without recourse to mechanical, electrical, or even magentic devices, the navigators of the Central Caroline Islands of Micronesia routinely embark on ocean voyages that take them several days out of the sight of land. Their technique seems at first glance to be inadequate for the job demanded of it, yet it consistently passes what Lewis has called "the stern test of landfall." ... Western researchers traveling with these people have found that at any time during the voyage the navigators can accurately indicate the bearings of the port of departure, the destination, and other islands off to the side of the course being steered, even though all of these may be over the horizon and out of sight. These navigators are also able tack upwind to an unseen island while keeping mental track of its changing bearing - a feat that is simply impossible for a Western navigator without instruments.

Page 67, on clues from what lies beneath:

The world of the navigator, however, contains more than a ser of tiny islands on an undifferentiated expanse of ocean. Deep below, the presence of submerged reefs changes the apparent color of the water. The surface of the sea undulates with swells born in distant weather systems, and the interaction of the swells with islands produces distinctive swell patterns in the vicinity of land. Above the sea surface are the winds and weather patterns which govern the fate of sailors. Seabirds abound, especially in the vicinity of land. Finally, at night, there are the stars. Here in the Central Pacific, away from pollution and artificial light, the stars shine brightly and in incredible numbers. All these elements in the navigator's world are sources of information.

Page 68, on the sidereal compass:

Seeing the night sky in terms of linear constellations is a simple representational artifice that converts the moving field of stars into a fixed frame of reference.

This seeing is not a passive perceptual process. Rather, it is the projection of external structure (the arrangement of stars in the heaves) and internal structure (the ability to identify the linear constellations) onto a single spatial image. In this superimposition of internal and external, elements of of the external structure are given culturally meaningful relationships to one another. The process is actively constructive.

Page 71, on picturing a frame of reference:

The fundamental conception in Caroline Island navigation is that a canoe on the course between islands is stationary and the islands move by the canoe. This is, of course, unlike our notion of the vessel moving between stationary islands. A passage from Gladwin (1970: 182) amplifies this:

Picture yourself on a Pulawat canoe at night. The weather is clear, the stars are out, but no land is in sight. The canoe is a familiar little world. Men sit about, talk, perhaps move around a little within their microcosm. On either side of the canoe, water streams past, a line of turbulence and bubbles merging into a wake and disappearing into the darkness. Overhead there are star, immovable, immutable. They swing in their paths across and out of the sky but invariably come up again in the same places. ... Everything passes by the little canoe - everything except the stars by night and the sun in the day.

Page 81, intersecting lines:

It is tempting to criticize the Caroline Island navigators for maintaining an egocentric perspective on the voyage when the global perspective of the chart seems so much more powerful. Before concluding that the Western view is superior, consider the following thought experiment: Go at dawn to a high place and point directly at the center of the rising sun. Return to the same high place at noon and point again to the center of the sun. That defines another line in space. I assert that the sun is located in space where those two lines cross. Does that seem wrong? Do you feel that the two lines cross where you stand and nowhere else?

...

Our everyday models of the sun's movement are exactly analogous to the Caroline Island navigator's conception of the location of the reference island. The choice of representations limits the sorts of inferences that make sense.

Page 92, on relative difficulty in frames of reference:

All navigation computations make use of frames of reference. The most prominent aspect of the Micronesian conception is the apparent motion of the etak island against the fixed backdrop of the star points defined by the sidereal compass. Here there are three elements to be related to one another: the vessel, the islands, and the directional frame. In order to preserve the the observed relationship of motion parallax, one can have the vessel and the directional frame move while the islands stay stationary (the Western solution) or one can have the vessel and the directional frame stationary while the islands move (the Micronesian solution). ... Each of these schemes makes some things easy to compute and other difficult.

Oct 10, 2008 9:49pm

dunbar's dungeon

Thought experiment for the day, spurred by jets flying overhead for fleet week. If something out of ordinary is going on, and you need to ask people around you, what do you do?

Imagine a chat system, similar to IRC or AOL - a big room where you log in and talk to lots of other people simultaneously. When you log in, your Fire Eagle account gets a ping, so that the server can know your geographic location to some level of precision. You are then dumped into a big room, with the 150 people physically closest to you in the real world. As long as there are fewer than 150 total people in the system, everyone gets to talk to one another. As more people join, you begin to see overlapping conversation bubbles. You might be in San Francisco, talking to someone in Kansas. That person in Kansas can talk to someone else in New York, but you can't. Your conversational circle is strictly limited to the nearest 150 people, some of whom drop off occasionally as they are bumped out by more proximate newcomers.

As the population of the system grows, everyone's personal horizons begin to shrink. With enough people, eventually you're talking to the people right in your neighborhood. To get a message to someone across the country, you might lie about your location, or ask that it be passed on, Milgram-style.

Would this feel like a natural way to interact with people around you, self-limited to a reasonable number of participants but always those around you? Would people lie about their location? Would it be like Usenet, dense with microscopic subcultures? Would certain people emerge as hubs, offering to post messages around between bubbles in the absence of other means of communication?

Oct 9, 2008 5:48am

design engaged 2008

The third Design Engaged happened in Montreal, just a few days ago.

Number two, held in Berlin three years ago in November, was something like a debut party for Stamen. Many of the people whose circles we're in now, we met there and then for the first time. DE is a high water mark for conference-style events, borrowing much from the DIY ethos. In Berlin, attending meant speaking, and most of the event was occupied by rapid-fire descriptions of what each participant was up to. Adam beat the drum for peak oil and copper theft, while Jack delivered a fairly stream-of-consciousness rundown of interesting comic books and metals with low eutectic melting points. This time, my personal favorite was Russell's talk on advertising and design, the upshot of which was that "it's worth not being shit."

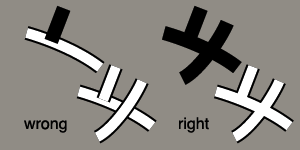

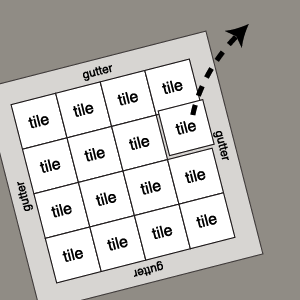

I adapted some of my favorite bits from the UX Week talk and described a tiling metaphor for the web, why it was interesting, and how we're about to hit something of an inflection point. The title of the talk is "Tiles Then, Flows Now", changed from "Tiles Now, Flows Soon" when I decided that too many people were saying too many things about the future for me to join in as well.

Three years ago, we first showed Cabspotting at Design Engaged 2005. It was one of our first map projects to combine a tiled base layer and constantly-updating data.

The tiled-map idea was not exactly new at the time, but it had just been immensely popularized by Google Maps, released earlier in 2005. The scale and coverage of GMaps was a wholly new thing.

I'd already spent plenty of time futzing with Terraserver and other satellite image sources looking down on familiar places, but those services always forced viewers to understand image resolution and source satellites. It was hard to pan around, the UI metaphor was ultimately form-based ("click here to go east").

GMaps showed how it was possible to fake the appearance of continuous flow by assembling mipmapped images on the client, and serving up simple 256x256 tile images. When it first came out, I spent an hour panning slowly around the Bay Area from San Francisco to San Jose.

This all coincided with the appearance of REST as a guiding metaphor for the web. REST gives you a lot of mileage; we faked the continuous flow of user activity on Digg by punctuating snapshots over time. Like film, you can string a number of these together to present a convincing illusion of continuity. Digg Swarm makes requests every 30-60 seconds, yet visually dribbles out activity continuously. Worries about certain events being dropped are basically swept under the rug here, the picture is lossy.

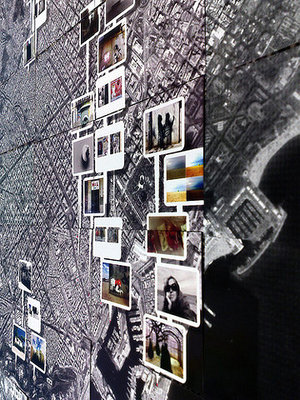

All of this is motivated by a desire to pump dynamic, flowing data through the "thin straw" of the internet. Here's another experiment that's starting to come back around, for SOM's San Francisco City Model. The monochrome renders are rich, heavy and detailed. The false-color renders below encode information about tax assessor's parcels. Combined, the maps give you a convincing form of interaction including per-building highlights and links to parcel information faked with simple images and a bit of code.

Oakland Crimespotting is a similar bit of foolishness. Initially data was harvested by scraping vintage late 1990's CrimeView map products, now it comes from Excel spreadsheets updated nightly. Yet the view we try to present is a continuous, unbroken surface for exploration. We routinely get asked about same-day crimes by people lulled into a sense of immediacy. It doesn't help that we promise to tell people what the sirens in their neighborhood are in realtime of course.

This project directly led to Modest Maps, a condensation of thoughts on good, flexible, online cartography for designers.

It also connects to this years-long meditation on Oakland's historical geography. Motivated by a project for a sustainable urban design class, my girlfriend Gem researched century-old maps of the east bay to understand why certain city streets were freakishly wide like this view of E. 21st St and 14th Ave. The thinking was that all this seemingly wasted space might be more effectively used with a permeable road surface and native vegetation to revive the natural water filtration systems disrupted by culverted creeks and concrete construction.

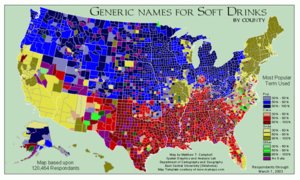

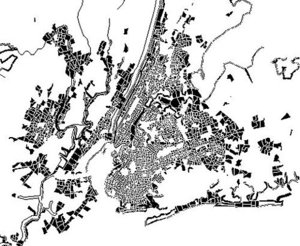

Here's a whole series of downtown Oakland maps. It's great fun to run through them, showing where construction has taken place, where rail used to run, what the oil companies considered relevant, and where the freeways were eventually dropped in. In the 1912 map you can see exactly where the old Key Route streetcars used to run, and therefore why certain roads are now uselessly wide. I've just won an EBay auction for a 1967 road map that will show the drastically modified, post 1950s landscape of raised highways.

This last Oakland map is actually sourced from OpenStreetMap, an attempt to introduce Wikipedia's crowdsourcing model to geographical data. I like that it shows a lot more pedestrian-relevant information than the Microsoft Virtual Earth one immediately before. The really interesting thing about this whole series of Oakland Maps is that they're tightly matched to the specific cartographic projection used by Google and Microsoft and everybody else: it's a standard that provides strong footing for a comparison.

This is something similar we've been working on for the London Organizing Committee for the Olympic Games. Again you can see the contrast between a map that draws attention to what's on the ground vs. one that helps your satnav get you through town unscathed.

Being able to take certain things for granted, like projections, publishing mechanisms, and display libraries, allows for enormous variations in expression. There's also a bit of Google cargo cult mentality here, looking at the technological leavings of the biggest technology company out there. For better or worse, they've set the tone for geographic publishing by using the web more effectively and deferring complexity to the client browser.

All of this is a way of showing how Roy T. Fielding's 2000 PhD thesis on Representational State Transfer has utterly dominated the discourse of publishing through the web. It's an incredibly productive architectural idea, and it's been the primary area of experimentation and development for geeks like us since the implosion of the previous web bubble.

The new thing on the horizon, though, is a promise to stop faking it with really-realtime event-based notifications. Sudden interest in Jabber / XMPP, an instant messaging protocol from ~2001, is bubbling up from the kinds of system design and operations geeks building sites like Flickr and Twitter.

There's a connection to game development here, illustrated by a favorite paper of mine called The Continuous World of Dungeon Siege. In it, author Scott Bilas describes the technical challenges inherent in presenting an illusion of seamlessness. Some of it, the way in which the environment is loaded and constructed around the ever-shifting vantage point of the player, sounds a lot like the RESTful tile-based methodology driving the maps above. A lot of it also sounds an awful lot like the pipelines and data flows I'm hearing from friends working on massive, distributed services like Flickr.

These are the cartograms published in the wake of the 2004 U.S. presidential election, they were a major influence on our geographic thinking when they were first released: imagine, using color and spatial distortion to communicate the underlying complexity of a contentious national election.

Meanwhile, these are graphics from Nate Silver's 538, a very different current approach to electoral politics from the world of continuous statistical analysis in baseball. This is a lot more like the current environment, showing constant change from multiple daily polls. Nate taps a constant stream of contradictory, voluminous data to create simulations and ultimately predictions of the election outcome in November.

They also do maps. Right now they're predicting a 90% chance of an Obama win of some form, so yay.

I'm going to close with a slide about capacity planning from Flickr's John Allspaw. I love how on the right, they're translating their capacity metrics into days-of-Flickr-remaining. REST is going to stick around for as long as it continues to be a productive metaphor that can guide action and make predictions, but we're going to see a lot of this stream-tapping behavior bubble up over the next few years.

Extra thanks to Andrew, Boris, Jenn, and Mouna for organizing the festivities. It was nice to visit Laika in person!

Sep 24, 2008 7:02am

post-ONA conference

A few weekends ago, I had the opportunity to participate in the 2008 Online News Association conference in Washington DC. Laura Cochran of the Washington Post invited me to join a panel on mapping crime, along with USAToday datacruncher Paul Overberg and LA Times power couple Sean Connelly and Katy Newton.

The conference got off to an inauspicious start when Tina Brown capped off a terrible keynote Q&A by called a journalism student an "easy lay". Fortunately, the How We Built It track featuring MSNBC, New York Times, Las Vegas Sun and others was a perfect way to spend a conference Friday. News organizations producing interactive pieces for the web described the various challenges they encountered, and it was fascinating to hear about the sausage-making process from the inside.

One of the most important things I learned in this series of talks is that no one likes their IT department, not even at the New York Times. Presenters repeatedly described ways in which they had to circumvent or overrule their own IT infrastructures to get anything interesting done. Two stood out. I asked the designers and developers at the Las Vegas Sun about the political/technical environment in their organization that allowed them to explore and refine iterative, agile production methods, and they said that it was necessary for them to go straight to the top for a mandate from the editor to give the group decision-making power over their development and deployment environment. Matt Ericson and Aron Pilhofer of the NYT described a more circuitous approach. Apparently, the NYTimes.com online election coverage is hosted entirely on Amazon's pay-as-you-go EC2 service, and totally detached from the content management and other server infrastructure at the Times. They use Ruby on Rails and other open-source software components to develop and deploy their work, and their seven-person team is wholly responsible for the care and feeding of these servers. This was a shocking thing to learn, and it raised my opinion of the NYT team by a solid order of magnitude.

Despite such a high-level of problem solving ingenuity, the majority of people in the business are journalists first and programmers last. The technical proficiency and funding available to publishers less blessed or lucky than the major dailies is substantially lower, and forces them into products like Caspio. This company had a substantial percentage of ONA attendees by the short hairs with their hosted solutions for data-driven web pages and mashups. I'm convinced that this is bad news, but I'm already predisposed to suspicion of turnkey software for this kind of work. I've also read plenty about the product in particular from journalist/technologist Derek Willis, who offers six reasons to look past Caspio in his blog archives.

The silver lining on this particular cloud is Django, the Python web framework developed by Simon Willison, Adrian Holovaty, and others. Django is finding a solid niche in the journalism world as a thoughtful, educated, D.I.Y. response to hosted rentware, and a kind of software Schelling point for journalists looking to really understand data-driven reporting.

The end-of-conference Online Journalism Awards ceremony was a parade of excellent interactive and data-driven work. The impression I got here was of deadline-motivated ingenuity on a tight budget. My co-panelists Sean and Katy especially illustrate the point with their 2007 winner Not Just A Number, a look at homicide in Oakland. Despite focusing on the same geographical area and the same topic, it's such a wildly different project from our Oakland Crimespotting. By narrowing their sights to the year's killings and entering the community itself to talk with those affected, Not Just A Number shows how narrative rigor can color statistical data with a backstory.

Overall, the conference had a distinctly different feel than the tech-oriented events I generally participate in. For one, there's an undercurrent of a siege mentality in journalism right now, with newsrooms cutting staff and print operations frozen stiff in the headlights of the internet. The focus on narrative and story gives a softer edge and an escape valve, though - this group is not primarily a tech-driven community, but they catch on to new developments quickly and bend them into the service of storytelling.

Sep 21, 2008 11:11pm

map beautiful

I'm continuing my months-long meditation on city cartography with a jump into OpenStreetMap, the "editable map of the whole world ... being built largely from scratch ... released with an open content license."

A few weeks back, I released Cascadenik, an application of cascading stylesheets to the Mapnik rendering library. The rationale for writing it in the first place was to replace the base map we're using for Oakland Crimespotting. I love the look of Microsoft's VEarth cartography, but it's missing data crucial to an understanding of urban crime: parks, schools, businesses, and transit. OpenStreetMap is the only free-as-in-speech way to create a beautiful, useful, and complete city map that can incorporate such ground truths. The NavTeqs and Teleatlases of the world where the online mapping services get their data are primarily interested in and funded by navigation, so it's not going to be in their interest to go neighborhood-deep to track locations of playgrounds or liquor licenses.

It's going to take a substantial outlay of cognitive surplus to get all this information into the map, but I've started by working on the visual appearance to get a feel for OSM's data:

(In-progress stylesheets can be found bundled with Cascadenik in mapnik-utils)

There are more than a few social decisions encoded in those styles:

- I'm trying to foreground modes of public transportation, especially rail. BART plays such a huge role in the Bay Area, and an understanding of where stations lie in relation to homes and businesses is crucial to understanding the local streetscape. For an historical view of this, check out my old flea market mapping experiment, and pay attention to the difference in appearance between the 1912 map, made to show rail coverage, and the 1936 map, made by Shell Oil to hide it.

- Taking a cue from the 1936 map and VEarth's road rendering, there's a much sharper distinction between major and minor roads, with minor roads dropping back to form a spidery matrix of connectivity between major roads and transit stops. This seems to help with the legibility of parks and other features at zoomed-out views, showing how they anchor neighborhoods and provide textural variety. It also makes room for labels on schools & parks that would otherwise be crowded out by street names.

- A lot of excess detail is being intentionally omitted. Parking lots and ATMs exist in the standard OpenStreetMap tileset, but I'm leaving those out here because I don't feel that they're helpful. I'm also omitting underground rail, it's just not relevant to surface use.

- The color of freeways is red, a fairly standard decision seen on most U.S. maps. Major roads are all fairly pale, with small variations in color around yellow and orange to make them visible but less overpowering than the blues and reds used by OpenStreetMap's own tiles.

Working with Potlatch, the Flash-based OSM editor, has been interesting. Although it does the job exceedingly well, I'd welcome an editing interface derived more from KidPix and SimCity than AutoCAD or ArcGIS. My dream is a UI that dispenses with tagging in favor of tools like "road", "school", "park", or "bulldozer".

The new tiles are being updated from fresh OSM data on an almost-daily basis, and hosting on S3 means you can hit it pretty much all you want for your nine-county Bay Area mapping needs.

Now, to get all these schools included.

Aug 31, 2008 4:34pm

tracking hurricanes

This just went out yesterday, our new Hurricane Tracker for MSNBC:

I'm so impressed with the work, co-created by Tom and Geraldine with raw data licensed from Hurricane Mapping. They're evacuating New Orleans right now, I hope the reaction to this storm isn't as tragically bungled as the last one.

Aug 30, 2008 5:24am

neocartography

Andrew just added a last-minute SXSW panel to the picker.

Here's what it's about:

Neocartography

Designers are dropping maps into their applications with little concern for usability or design and users are getting "Google Map fatigue". We need to move beyond the simple pin-dropping and consider appropriate mapping interfaces. This panel will look at the current and emerging tools to provide compelling geographic interaction and visualization.

It's going to be some combination of Andrew Turner, Aaron Cope, Paul Smith, Wilson Miner, Tom Carden, Andy Woodruff, Nathan Yau, and me.

Aug 30, 2008 5:22am

cascadenik: cascading sheets of style for mapnik

Style sheets were available in electronic publishing systems from around 1980 (see Chapter 2 and 3). Combined with structured documents, style sheets offered late binding (Reid 1989) of content and presentation where the content and the presentation are combined after the authoring is complete. This idea was attractive to publishers for two reasons. First, a consistent style could be achieved across a range of publications. Second, the author did not have to worry about the presentation of the publication but could concentrate on the content.

(Hakon Wium Lie, Cascading Style Sheets)

Mapnik, the open source map rendering library I've written about recently, uses an XML language similar in spirit to SLD for applying visual style to map vector data.

It's definitely tolerable, but otherwise not particularly good.

Having recently completed a country-wide geographic treatment of the UK for LOCOG (London Organising Committee of the Olympic Games), I've had a chance to experiment with ways to improve the state of the art in Mapnik styling. CSS, the ubiquitous format understood by all halfway-modern web browsers, offers a way forward.

I've implemented a pre-processor that accepts CSS-type stylesheets and produces traditional Mapnik stylesheets. Imagine a program that takes HTML and CSS files, sprinkles the HTML with FONT tags, and makes them viewable to Netscape 2.0 users, and you've got the idea.

Check out a brief tutorial, or grab the source code from the mapnik-utils project.

My hope here is that the characteristics of CSS that made it acceptable to designers and bumped the visual and semantic sophistication of the web will translate to the world of maps as well. Mapnik's existing styles get the job done, but are unsatisfying because they force the designer to develop and implement the kind of class-based logic that CSS makes easy. It's still early in this particular universe of concern, but the suddenly rising viability of the OpenStreetMap project is going to make map design for the web a buzzing, vibrant front in another year or two, tops.

CSS has a few properties that make it a great candidate for mapping:

- Stylesheets can live separately from the content they apply to, with many pieces of content sharing rules defined by single a source. In contrast, Mapnik's own "stylesheet" terminology refers to an XML format that blends content (the vector data that maps are made of) with appearance. This is going to be interesting as the availability of data like OpenStreetMap's creates a need for attractive CSS bases for people to work from.

- The "C" in CSS is for "Cascade", shorthand for a set of expectations governing how rules from a variety of sources can be combined. In CSS, it's possible to state the equivalent of "all text is black, but proper nouns should be highlighted with yellow." Maps present similar needs, there is often a hierarchy of feature types that need to shares some visual properties but not others: roads, toll roads, toll roads under construction, etc.

- CSS interacts with HTML largely through element types, classes and IDs. The class concept in particular makes it possible to mark content with meaningful labels, and apply visual styles based on those labels. Maps present feature classes like public buildings, various kinds of parkland, etc., yet Mapnik has no such concept of class.

- CSS clearly defines how relative addresses ought to be handled, in the case of linked files like background images. According to the CSS specs, addresses are always relative to the stylesheet in which they're used, not the content document from which the stylesheet is linked. This behavior is predictable and makes for easy centralization and re-use of visual rules. Mapnik expects that image files can be found in absolute locations, on the same computer where it's being run.

The basic improvement offered by CSS is that the linkage has been flipped around to point in the opposite direction. SLD and Mapnik both have data layers that specify how they are to be rendered via explicit connections to declarations of color, line weight, etc. It's better to do the opposite: classify data layers with meaningful categories and create separate styles that act on those categories. The style rules point to the things they apply to, e.g. "roads should be black lines, while schools should be filled in with yellow."

So far where I've gotten is a two-week-old proof of concept that generates good, clean Mapnik stylesheets. It's usable now, but there are no doubt edge cases where my handling of things like filters will need to be tweaked somewhat.

Read the quick tutorial, and grab the source code from the mapnik-utils repository.

Aug 26, 2008 6:39am

uxweek 2008

Last week was Adaptive Path's blowout annual event, UX Week. I was tremendously excited about it, and it did not disappoint. My one previous experience at this conference was in 2006, Washington DC when I gave my first talk longer than 20 minutes, Data Visualization: Why Now? That one netted us my co-presenter this year, Tom Carden, whose work on OpenStreetMap I name-checked during my hour-long survey of new data visualization work.

This time, we did two sessions. Tom and I did a really really full three hour workshop extravaganza adapted from his amazing solo show at E-Tech this year. This was awesome. I talked for 90 minutes, had great audience participation, and walked away charged and energized ... I would love to do this one again, the scale and format (small room, 30-ish people?) was perfect and the attendees asked tough, perceptive, illuminating questions that absolutely made the whole thing sing.

On Friday, I also took the main stage to deliver a bit of a departure from our usual talk topics. Generally, we talk about what we do and how we do it. This time, we put some order to a whole bag of ideas about illusion, sleight of hand, surfacing and technique that I had initially been working out for Interesting2008. I wasn't able to get to London, so I did the talk in town. This one was a sharp contrast. The material was something new and experimental for me, and the klieg light ballroom format makes for a strange speaker / audience relationship. Still, I felt like I had crossed some form of boundary and I'm anxious to polish the topic for another go.

The talk was called Greebles, Nurnies, Tiles, and Flair, and these are my slides and notes.

"Greebling" is a special effects term that makes sense if you've seen Star Wars ... all those little nubs on the Imperial Star Destroyer and other ships make it look big, and real. They're there to hide the fact that it's plywood and plaster, to help you believe that it's a mile long.

Tiles are a technique you'll be familiar with from Google Maps. The infinite, continuous road maps and satellite imagery are available over a regular broadband connection because Google serves them to you as small square images...

...that get stitched together into a seamless field by your web browser.

Sleight of hand.

Tiled image maps are a stand-in for a larger strategy for dealing with continuity. How do you use a clipped, staccato medium like the internet and the digital computer to simulate infinity?

The world of computer gaming has been dealing with these questions for some time. There's an excellent article by Scott Bilas from Gas-Powered Games called The Continuous World of Dungeon Siege. In it, he describes the technical challenges of presenting a seamless world.

It's similar in concept to the tiles slippy maps you can see in your browser: divide the world into discrete chunks, connect them to one another, and figure out how to stream everything into the play environment from outside the player's field of vision, so they are never presented with a loading screen.

There is no global coordinate system, all is relative.

This is becoming a core expectation of modern games, walk around World Of Warcraft or Grand Theft Auto for a few minutes to see.

Online mapping is a version of this in miniature. Our code library Modest Maps was developed to generalize the pattern. We started using it with geographical maps...

...but have started to apply the technique to non-geographic mappings: floorplans, ...

... and artworks, to name two.

All the Maps mashups out in the world are like portals into the Continuous World Of Google Maps - each one a square window onto the same world.

It's like looking at a blue whale through a letterbox. Nature's Timo Hannay meant this as a criticism, but Stamen's Tom Carden thinks this is awesome.

What if you could see that, as you search for driving directions from San Bruno to Marin, that someone else is simultaneously crossing your path from Oakland to SF's Sunset district?

I talk about Google because it's familiar, but there are a lot of other distributed services starting to act like this. Continuous World Of Flickr, Continuous World Of Twitter are giant services but everyone sees a very small piece at a time.

Sleight of hand again:

The magic wand is there to make the hidden coin look less conspicuous.

Greebles are the parts that "look cool, but don't actually do anything" (C3PO). There's an entire discipline here composed of special effects artists and asset designers working to hide the plywood spaceships and simple game world polygons beneath an encrusted surface texture.

Textured surface gets you several things.

One is that it's proof of reality. Check out this map of Moscow (Kosmoninki), with all the individual buildings marked and numbered. It makes the map look more like the territory.

Google Maps for Tokyo have logos for all convenience stores baked right into the imagery. I thought this was an experiment in advertising until I went there, and learned that conbini are one of the prime wayfinding mechanisms people use to figure out where they're going. The street numbering system is entirely different, so navigation takes place by landmark rather than coordinate.

With Cabspotting, we made an early decision to ditch the base map and show just the trails of each taxi. This bought us a lot of wiggle room, since the GPS trails don't match up to the roads very well and would have looked terrible. It also bought us the appearance of truth. If you can see the rush of cabs in SOMA after last call, or the dense cluster around the dispatch yard, or the thick line along Geary out to the Sunset, you believe that the data is true.

These kinds of surface signals are encountered everywhere. NASCAR without sponsor logos looks barren, everyone knows that advertising is the lifeblood of the sport, and the logos on cars and driving suits reminds you that these guys are legitimate, that someone cares about them enough to pay to be seen with them, says Adobe's Michael Gough.

AdBlock for browsers has been succeeded by ArtBlock.

Surface details like this are a kind of social signal that the textured surface is real and cared-for, that it can be grasped and held on to.

Compare and contrast the visual appearance of OpenStreetMap two years vs. now: it's more credible and therefore more useful, because it's beautiful.

Sleight of hand again:

I have been talking about surfaces and misdirection.

What's underneath?

Social sites are taken seriously when they have crowds of users, loads of data, and all the scaling problems that accompany success. "Scaling is always a catch up game, but it's the best game there is" says Flickr's Kellan Elliot McCrae.

Big data, crowds of users, sheets of information poking up through the surface.

Credibility comes from looking busy, and being continuous: having something on page two, page three, etc. You will inevitably be asked to work on "social features" - most of the labor is getting people to give a damn, and getting the details right on the unbroken layer under everything else.

Approach this by starting underneath the surface.

Aug 5, 2008 12:28am

blog all dog-eared pages: understanding media

Marshall McLuhan entered my world in 1994 or so, when I first subscribed to Wired magazine while still in high school. I still had a year before I got online, so the bits of the articles that began with "http:.." didn't yet make sense to me. I've been bathing in "medium is the message" talk since I was 16 years old, without quite knowing what it means.

I approached Understanding Media as a sort of founding work, trying to get some sense of what Web 1.0's 1960's patron saint was on about. The book is equal parts frustrating and fascinating, especially at the beginning. Right away I had difficulty with two things: McLuhan's definition of "media" (electric light is given as an example, along with the usual radio, TV, film), and his use of terms like "hot" and "cold" without explanation. Radio is a hot medium, television a cool, one. There's not a lot here to grab hold of, and I still can't quote get my head around what the temperature idea refers to.

The book is essentially a 300 page long series of metaphorical assertions. McLuhan prefaces a large number of them with "it is well-known...", "anyone could tell you...." I quickly had to acclimate to this style.

There are just a few big ideas I've walked away with.

One is the frequently-repeated image of a human nervous system extended out past the skin and body through the use of electronic communications media. The book was written well before the Internet, but the founding rhetoric of the 1990's is all there. McLuhan starts with the idea that telecommunications is a factual expansion of the human nervous system out into the world, and derives a number of metaphors on the calmness of nerves and the farming of perception to corporate interests.

Another is the following of all threads, from a technology to all its implications and outcomes. Bruno Latour used a similar "full hardware stack" approach in Artemis when pointing out that the soft, fleshy, and therefore squeezable-during-rush-hour human body is as much a design feature of public transit systems as the rails and vehicles that carry it. McLuhan focuses on perception and all the senses, showing how all the broadcast and point-to-point media imply different sensual responses, from the tactile clothing of the TV generation to the receptiveness to Hitler's rhetoric via the radio medium. In his mention of abrasiveness, I immediately thought of the "shred" / "grind" terminology in popular culture of the past 15-odd years: is there something about the skate video medium that calls up a sandpaper touch? Do the psychological effects of cocaine, ecstacy, etc. make necessary the highly-pitched fuzz of dance music? Simon Reynolds says much of techno was a functional musical form adapted to serve its physical and pharmacological environment. I don't even know how to begin applying these ideas to our emerging world of little square friends - the thought scares me.

I marked many pages than are excerpted here; McLuhan is a very quotable writer even though much of what's quoted is significant more in the reading than the writing.

Pages 65-66, on the sensitivity of the artist to technological change:

The artist can correct the sense ratios before the blow of new technology has number conscious procedures. He can correct them before numbness and subliminal groping and reaction begin. If this is true, how is it possible to present the matter to those who are in a position to do something about it? If there were even a remote likelihood of this analysis being true, it would warrant a global armistice and period of stock-taking. If it is true that the artist possesses the means of anticipating and avoiding the consequences of technological trauma, then what are we to think of the world and bureaucracy of "art appreciation"? Would it not seem suddenly to be a conspiracy to make the artist a frill, a fribble, or a Milltown? If men were able to be convinced that art is precise knowledge of how to cope with the psychic and social consequences of the next technology, would they all become artists?

Page 68:

Once we have surrendered our senses and nervous systems to the private manipulation of those who would try to benefit from taking a lease on our eyes and ears and nerves, we don't really have any rights left. Leasing our eyes and ears and nerves to commercial interests is like handing over the common speech to a private corporation, or like giving the earth's atmosphere to a company as a monopoly. Something like this has already happened with outer space, for the same reasons that we have leased our central nervous systems to various corporations. As long as we adopt the Narcissus attitude of regarding the extensions of our own bodies as really out there and really independent of us, we will meet all technological challenges with the same sort of banana-skin pirouette and collapse.

Page 158, on maps:

Prince Modupe tells in his autobiography, I Was A Savage, how he had learned to read maps at school, and how he had taken back home to his village a map of a river his father had traveled for years as a trader:

"...my father thought the whole idea was absurd. He refused to identify the stream he had crossed at Bomako, where it is no deeper, he said, than a man is high, with the great widespread waters of the vast Niger delta. Distances as measured in miles had no meaning for him.... Maps are liars, he told me briefly. From his tone of voice I could tell that I had offended him in some way not known to me at the time. The things that hurt one do not show on a map. ... With my big map-talk, I had effaced the magnitude of his cargo-laden, heat-weighted tracks."

Page 183, on clowns, bicycles, and eggs: