tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Dec 14, 2016 6:16am

landsat satellite imagery is easy to use

Helping Bobbie Johnson with the Medium Ghost Boat series about a boat of migrants that’s been missing since 2014, I had need of satellite imagery for context to illustrate a dataset of boat sightings in the Mediterranean Sea off the coast of Libya.

(near Zurawah, Libya)

Fortunately, Landsat 8 public domain U.S. government imagery exists and is easy to consume and use if you’re familiar with the GDAL collection of raster data tools. Last year, Development Seed worked with Astro Digital to create Libra, a simple browser of Landsat imagery over time and space:

At libra.developmentseed.org, you can browse images spatially and sort by cloud cover to pick the best recent Landsat products by clicking a circle and using the dated images in the right side of your browser window. Imagery is not provided as simple JPEG files, and is instead divided into spectral bands described at landsat.usgs.gov. Four of them can be used to generate output that looks like what a person might see looking down on the earth: Blue (2), Green (3), Red (4), and Panchromatic (8). Download and extract these four bands using curl and tar; a bundle of bands will be approximately 760MB:

curl -O https://storage.googleapis.com/earthengine-public/landsat/L8/038/032/LC80380322016233LGN00.tar.bz

tar -xjvf LC80380322016233LGN00.tar.bz LC80380322016233LGN00_B{2,3,4,8}.TIF

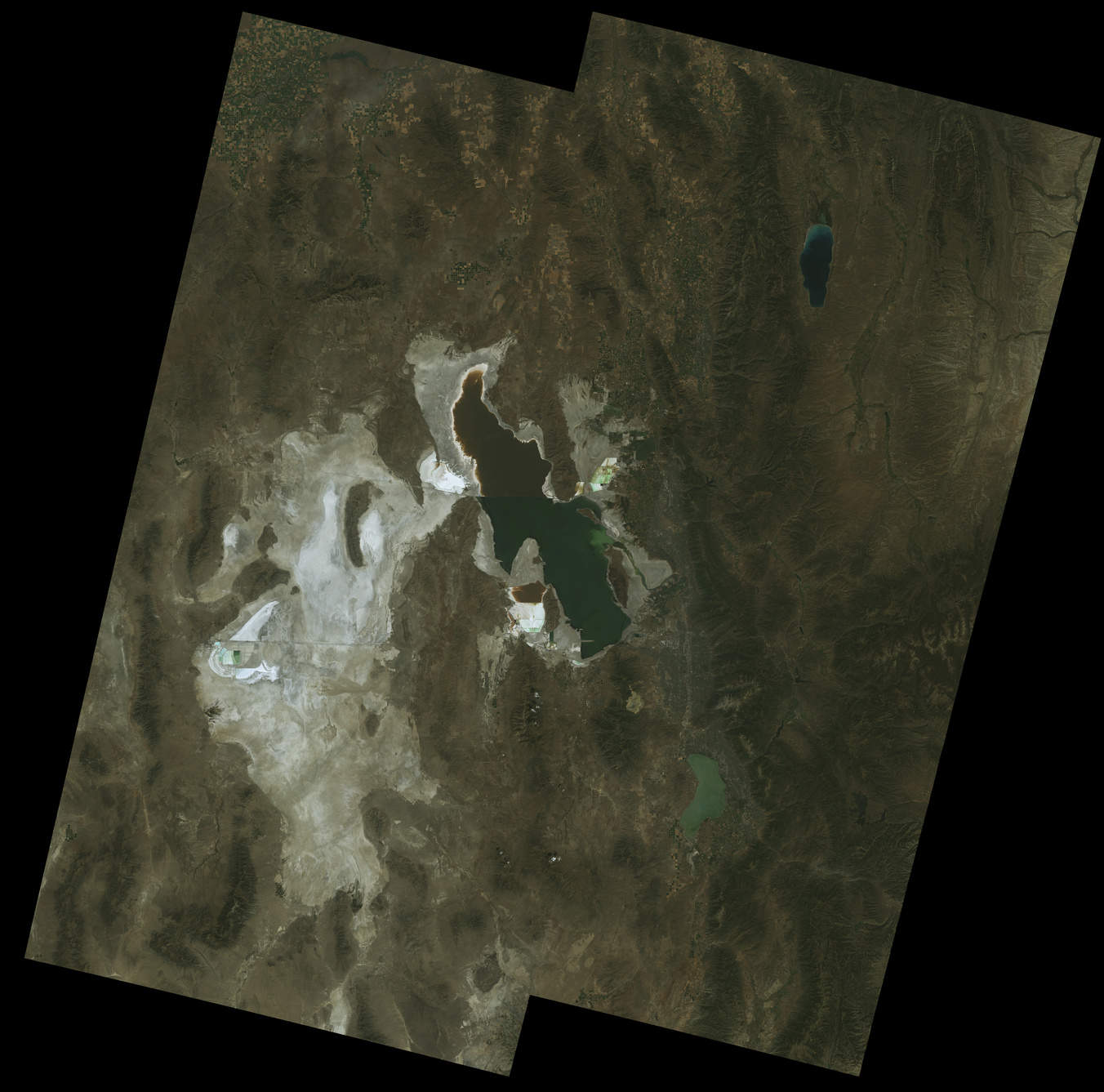

Combining these bands is possible with a processing script I’ve adapted from Andy Mason, which corrects each band before merging and pansharpening them into a single RGB output like this:

So that’s a good start, but it’s only a corner of Utah’s Great Salt Lake when you might want an image of the whole lake. Each file has large, useless areas of black around the central square. Download four images overlapping the lake and extract the four bands from each:

- LC80380322016233LGN00.tar.bz

- LC80390322016240LGN00.tar.bz

- LC80390312016240LGN00.tar.bz

- LC80380312016233LGN00.tar.bz

Use gdalwarp to convert each of the separate band raster files into a single geographic projection, in this case the common web spherical mercator:

gdalwarp -t_srs EPSG:900913 LC80380322016233LGN00_B2.TIF LC80380322016233LGN00_B2-merc.TIF gdalwarp -t_srs EPSG:900913 LC80390312016240LGN00_B2.TIF LC80390312016240LGN00_B2-merc.TIF # repeat for 14 more bands

Then, use gdal_merge to combine them into individual band mosaics that cover the whole area:

gdal_merge.py -o mosaic_B2.TIF -n 0 LC*_B2-merc.TIF gdal_merge.py -o mosaic_B3.TIF -n 0 LC*_B3-merc.TIF gdal_merge.py -o mosaic_B4.TIF -n 0 LC*_B4-merc.TIF gdal_merge.py -o mosaic_B8.TIF -n 0 LC*_B8-merc.TIF

Now, Andy’s processing script can combine a larger area and color-correct all four images together:

Jul 28, 2016 11:07pm

openstreetmap: robots, crisis, and craft mappers

The OpenStreetMap community is at a crossroads, with some important choices on where it might choose to head next. I spent last weekend in Seattle at the annual U.S. State Of The Map conference, and observed a few forces acting on OSM’s center of gravity.

I see three different movements within OpenStreetMap: mapping by robots, intensive crisis mapping in remote areas, and local craft mapping where technologists live. The first two represent an exciting future for OSM, while the third could doom it to irrelevance.

The OpenStreetMap Foundation should make two changes that will help crisis responders and robot mappers guide OSM into the future: improve diversity and inclusion efforts, and clarify the intent of OSM’s license, the ODbL.

Robot Mappers

Engineers from Facebook showed up to talk about how machine-learning and artificial intelligence (“robot”) techniques might help them produce better maps. Facebook has been collaborating for the past year with another SOTMUS attendee, DigitalGlobe, to consume and analyze high-resolution satellite imagery searching for settled areas as part of its effort to expand internet connectivity.

However, there are still parts of the world in which the map quality varies. Frequent road development and changes can also make mapping challenging, even for developed countries. … In partnership with DigitalGlobe, we are currently researching how to solve this problem by using a high resolution satellite imagery (up to 30cm per pixel). … For small geographical areas, this technique has allowed our team to contribute additional secondary and residential roads to OSM, offering a noticeable improvement in the level of details of the map.

OSM has long had a difficult relationship with so-called “armchair mapping,” and Facebook’s efforts here are a quantum leap in seeing from a distance. This form of mapping typically requires the use of third party data. For sources such as non-public-domain satellite imagery, robot mappers must be sure that licenses are compatible with derived data and OSM’s own ODbL license. Copyright concerns can make or break any such effort. Fortunately, OSM has a sufficiently high profile that contributors rarely attempt to undermine the ODbL directly. Instead the choose to cooperate with its terms, to the extent they understand the license.

Crisis Mappers

Meanwhile, representatives of numerous crisis mapping organizations showed to talk about the use of OSM for mapping vulnerable, typically remote populations. Dale Kunce of the Red Cross Missing Maps project gave the second day keynote, while Lindsey Jacks gave an update on her work with Field Papers (Stamen’s ongoing product I originally called Walking Papers) and numerous members of organizations like Humanitarian OpenStreetMap Team (HOT), Digital Democracy, and others attending workshops on collecting data.

Disaster response and crisis mapping organizations take a more direct, on-the-ground approach to map features that can’t be seen remotely or require local knowledge to interpret. While these efforts have often used remote data, as in the satellite-aided Haiti earthquake response in 2010, that data has always been paired with ground efforts in the area concerned.

When major disaster strikes anywhere in the world, HOT rallies a huge network of volunteers to create, online, the maps that enable responders to reach those in need. … HOT supports community mapping projects around the world and assists people to create their own maps for socio-economic development and disaster preparedness.

The populations most in need of crisis-response mapping efforts are typically furthest from OSM’s W.E.I.R.D. founding core. Such efforts will succeed or fail based on their participation. OSM should do a better job of welcoming them.

Craft Mappers

Historically, OpenStreetMap activity took place in and around the home areas of OSM project members, as a kind of weekend craft gathering winding up in a local pub. OSM originated from a mapping party model. Western European countries like England, Germany, and France achieved high coverage density early in the project’s history due to active local mappers carrying GPS units, riding bikes to collect data, and getting together at a pub afterward. Mapping pubs and similar amenities was a cultural touchstone for the project’s founding participants. The project has always featured better-quality data in areas where these craft mappers lived, for example near universities with computer science or information technology programs.

Many historic OSM tendencies, such as aversion to large-scale imports and a distinctly individualist technical worldview, are the result of this origin. They mirror the histories of other open source and open data projects, which often start as itch-scratching projects by enthusiastic nerds. Former OSM Foundation board member Henk Hoff’s 2009 “My Map” keynote is a great example of OSM’s early focus on areas local to individual mappers. The founding work of this branch of the community treats the project as a kind of large-scale hobby, like craft brewing or model railroading.

At its current stage of development, OSM’s public communications channels seem to be divided amongst these communities. I heard much frustration from crisis mappers about the craft-style focus of the international State Of The Map conference in Brussels later this year, while the hostility of the public OSM-Talk mailing list to newcomers of any kind has been a running joke for a decade. The robot mappers show up for conferences but engage in a limited way dictated by the demands of their jobs. Craft mapping remains the heart of the project, potentially due to a passive Foundation board who’ve let outdated behaviors go unexamined.

It’s a big downer to see a fascinating community mutely sitting out important discussions and decisions about the community’s future. Left to the craft wing, OSM will slide into weekend irrelevance within 5-10 years.

Two Modest Proposals

Two linked efforts would help address the needs of the crisis response and robot mapping communities.

First, U.S. technical gatherings like PyCon have been invigorated over the past years by codes of conduct and other mechanisms intended to welcome new participants from under-represented communities. State Of The Map US has done a great job at this, but the international conference and foundation seem to be engaging only in passive efforts, if any. A prominent code of conduct for in-person and online events would bring OSM in-line with advances in other technology forums, who have chosen to value the contributions of diverse participants. With the appearance of crisis and disaster mapping on the OSM landscape over the past five years, we need to appeal to people of color disproportionately affected by crises and disasters, so they understand they are welcome within OSM’s core. Ignoring this or settling for “#FFFFFF Diversity” is a copout.

Ashe Dryden says this about CoC’s at events with longtime friends running the show:

We focus specifically on what isn't allowed and what violating those rules would mean so there is no gray area, no guessing, no pushing boundaries to see what will happen. "Be nice" or "Be an adult" doesn't inform well enough about what is expected if one attendee's idea of niceness or professionalism are vastly different than another's. On top of that, "be excellent to each other" has a poor track record. You may have been running an event for a long time and many of the attendees feel they are "like family", but it actually makes the idea of an incident happening at the event even scarier.

Second, the license needs to be publicly and visibly explained and defended for the benefit of large-scale and robot participants. ODbL FUD (“fear, uncertainty, and doubt”) is a grand tradition since the community switched licenses from CC-BY-SA to ODbL in 2012. I support the share-alike and attribution goals of the license. But passive communication about its intent and use has left the door wide open for unhelpful criticisms. OSM Foundation publishes community guidelines on a separate wiki rather than a proper website. It’s not enough: the license needs to be promoted, defended, and consistently reaffirmed. Putting it under active discussion may even make it possible to adapt to new needs via a mechanism like Steve Coast’s license ascent, where “work starts out under a restrictive and painful license and over time makes its way into the public domain.”

I’ve struggled to write this post without overusing the word “actively,” but it’s the heart of what I’m suggesting. OSMF Board has been at best a background observer of project progress, while OSM itself has slowly moved along Simon Wardley’s “evolution axis” from a curiosity to a utility:

A utility like today’s OSM requires a different form of leadership than the uncharted and transitional OSM’s of 2005-2010. There are substantial businesses and international efforts awkwardly balanced on a project still being run like a community garden, without visible strategy or leadership.

Thanks to Nelson, Mike, Kate, and Randy for their input on earlier drafts of this post. I am an employee of Mapzen, a Samsung-owned company that features OSM and other open data in our maps, search, mobility, and data products.

Jul 14, 2016 3:52am

quoted in the news

I’ve been quoted in a few recent news stories, which happens from time to time but this is unusually a lot.

If You Can’t Follow Directions, You’ll End Up on Null Island (Wall Street Journal)

Science reporter Lee Hotz got in touch after talking to my coworker Nathaniel Kelso about the Null Island geo-meme. It’s a short piece, but I hear it’s supposed to run on A-1 tomorrow and Lee did an impressive amount of interviewing to make sure the story was right. Nathaniel included Null Island in the wildly popular Natural Earth dataset around the time he worked at Stamen. We had just included Null Island in a basemap style for GeoIQ where Kate Chapman, another interviewee, had worked at the time. I was happy to lead Lee to Steve Pellegrin from Tableau, who was the initial creator of the meme that birthed a thousand t-shirts.

Stronger Together: Could Data Standards Help Build Better Transportation Systems? (Govtech)

Ben Miller digs into data availability for transportation systems. At Mapzen, we host Transitland for transit data based on Bibiana McHugh’s pioneering work on the General Transit Feed Specification (GTFS) at Portland TriMet. It’s the reason you can find a bus ride in both Google Maps and Mapzen Turn-By-Turn!

The App That Wants to Simplify Postal Addresses (The Atlantic)

Robinson Meyer writes one of the few non-sycophantic, critical articles about What3Words and addresses generally. I’ve got a few friends who work there so I hate to be mean, but in my eyes What3Words is an anti-institutional bet whose success, like the price of gold, will correlate strongly with the unraveling of basic public infrastructure. I can’t believe Meyer got the part-owner of privatized Mongol Post to cop to having heard about W3W at the Davos cartoon supervillian convention. Thinking about it makes me mad enough that I’ll change topics and leave you with this amazing quote from another Robinson Meyer article on word processors and history, from English professor Matthew Kirschenbaum:

Another interesting story that’s in the book is about John Updike, who gets a Wang word processor at about the time Stephen King does, in the early 1980s. I was able to inspect the last typewriter ribbon that he used in the last typewriter he owned. A collector who had the original typewriter was kind enough to lend it to me. And you can read the text back off that typewriter ribbon—and you can’t make this stuff up, this is why it’s so wonderful to be able to write history—the last thing that Updike writes with the typewriter is a note to his secretary telling her that he won’t need her typing services because he now has a word processor.

Jun 19, 2016 2:03am

dockering address data

I’ve been on a Docker vision quest for the past week, since Tom Lee made this suggestion in OpenAddresses chat:

hey, slightly crazy idea: @migurski what would you think about defining an API for machine plugins? basically a set of whitelisted scripts that do the sorts of things defined in openaddresses/scripts with configurable frequency (both for the sake of the stack and bc some data sources are only released quarterly).

Mostly, OpenAddresses sources retrieve data directly from government authorities, via file downloads or ESRI feature server APIs. Each week, we re-run the entire collection and crawl for new data. Some data sources, however, are hard to integrate and they require special processing. They might need a session token for download, or they might be released in some special snowflake format. For these sources, we download and convert to plainer cached format, and keep around a script so we can repeat the process.

Docker seemed like it might be a good answer to Tom’s question, for a few reasons. A script might use a specific installed version of something, or some such similar particular environment. Docker can encapsulate that expectation. Using “docker run”, it’s possible to have this environment behave essentially like a shell script, cleaning up after itself upon exit. I am a card-carrying docker skeptic but this does appear to be right in the sweet spot, without triggering any of the wacko microservices shenanigans that docker people seem excited about.

(docker is the future)

Waldo Jaquith followed up to report that it worked well for him in similar situations: “For my purposes, Docker’s sweet spot is periodically running computationally-intensive batch processes in a strictly-defined environments.”

So, I decided to try with three OpenAddresses sources.

Australia

Australia’s G-NAF was my first test subject. It’s distributed via S3 in two big downloadable files, and there is a maintained loader script by Hugh Saalmans. This was easy to make into a completely self-contained Dockerfile even with the Postgres requirement. The main challenge with this one was disk usage. Docker is a total couch hog, but individual containers are given ~8GB of disk space. I didn’t want to mess with defaults, so I learned how to use “docker run --volume” to mount a temporary directory on the host system, and then configured the contained Postgres database to use a tablespace in that directory.

No big challenges with Australia, it’s just a big dataset and takes a lot of time and space to handle.

Here’s the script: openaddresses/scripts/au.

New Zealand

Downloading data from Land Information New Zealand (LINZ) had a twist. The data set must be downloaded manually using an asynchronous web UI. I didn’t want to mess with Selenium here, so running the NZ script requires that a copy of the Street Address data file be provided ahead of time. It’s not as awful as USGS’s occasional “shopping cart” metaphor with emailed links, but it’s still hard to automate. The docker instructions for New Zealand include instructions for this manual process.

With the file in place, the process is straightforward. At this point I learned about using “docker save” to stash complete docker images, and started noting that they could be loaded from cache in the README files.

Here’s the script: openaddresses/scripts/nz.

Tennessee

The Tennessee Property Viewer website is backed by a traditional ESRI feature service, but it requires a token to use. The token is created in Javascript… somehow… so the Tennessee docker directions include a note about using a browser debug console to find it.

The token is passed to docker via the run command, though I could have also used an environment variable.

Here’s the script: openaddresses/scripts/us/tn.

What Now?

These scripts work pretty well. Once the data is processed, we post it to S3 where the normal OpenAddresses weekly update cycle takes over, using the S3 cache URL instead of the authority’s original.

With some basic direction-following anyone can update data dependencies for OpenAddresses. As the project moves forward and manually-created caches fall out of date, we’re going to be seeing an increasing number of sources in need of manual intervention, and I hope that they’ll be easier to work with in the future.

Jun 6, 2016 11:37pm

blog all dog-eared pages: the best and the brightest

David Halberstam’s 1972 evisceration of the Vietnam War planning process during Kennedy and Johnson’s administrations has been on my list to read for a while, for two reasons. Being born just after the war means that I’ve heard for my whole life about what a mistake it was, but never understood the pre-war support for such a debacle. Also, I’m interested in the organizational problems that might have led to the entanglement in a country fighting for its independence from foreign occupations over centuries. How did anyone think this was a good idea? According to Halberstam, the U.S. government had limited its own critical capacity due to McCarthyist purges after China’s revolution, and Johnson in particular was invested in Vietnam being a small, non-war. In the same way that clipping lines of communication can destroy a conspiracy’s ability to think, the system did not really “think” about this at all. Many similarities to Iraq 40 years later.

The inherent unattractiveness of doubt is an early theme; the people who might have helped Kennedy and later Johnson make a smarter move by preventing rash action did not push to be a part of these decisions. Sometimes it’s smartest to make no move at all, but the go-getters who go get into positions of power carry a natural bias towards action in all situations. Advice from doubters should be sought out. I’m grateful for the past years of Obama’s presidency who characterized his foreign policy doctrine as “Don’t do stupid shit.”

The locus of control is another theme. Halberstam shows how the civilian leadership thought it had the military under control, and the military leadership thought it had the Vietnam campaign under control. In reality, the civilians ceded the initiative to the generals, and the generals never clearly saw how the Northern Vietnamese controlled the pace of the war. Using the jungle trails between the North and South and holding the demographic advantage and a strategy with a clear goal, the government in Hanoi decided the pace of the war by choosing to send or not send troops into the south. America was permanently stuck outside the feedback loop.

I would be curious to know if a similar account of the same war is available from the Northern perspective; were the advantages always obvious from the inside? How is this story told from the victor’s point of view?

Here are some passages I liked particularly.

On David Reisman and being a doubter in 1961, page 42:

“You all think you can manage limited wars and that you’re dealing with an elite society which is just waiting for your leadership. It’s not that way at all,” he said. “It’s not an Eastern elite society run for Harvard and the Council on Foreign Relations.”

It was only natural that the intellectuals who questioned the necessity of America purpose did not rush from Cambridge and New Haven to inflict their doubts about American power and goals upon the nation’s policies. So people like Reisman, classic intellectuals, stayed where they were while the new breed of thinkers-doers, half of academe, half the nation’s think tanks and of policy planning, would make the trip, not doubting for a moment the validity of their right to serve, the quality of their experience. They were men who reflect the post-Munich, post-McCarthy pragmatism of the age. One had to stop totalitarianism, and since the only thing the totalitarians understood was force, one had to be willing to use force. They justified each decision to use power by their own conviction that the Communists were worse, which justified our dirty tricks, our toughness.

On setting expections and General Shoup’s use of maps to convey the impossibility of invading Cuba in 1961, page 66:

When talk about invading Cuba was becoming fashionable, General Shoup did a remarkable display with maps. First he took an overlay of Cuba and placed it over the map of the United States. To everybody’s surprise, Cuba was not a small island along the lines of, say, Long Island at best. It was about 800 miles long and seemed to stretch from New York to Chicago. Then he took another overlay, with a red dot, and placed it over the map of Cuba. “What’s that?” someone asked him. “That, gentlemen, represent the size of the island of Tarawa,” said Shoup, who had won a Medal of Honor there, “and it took us three days and eighteen thousand Marines to take it.”

On being perceived as a dynamic badass in government, relative to the First Asian crisis in Laos, 1961-63, page 88:

It was the classic crisis, the kind that the policy makers of the Kennedy era enjoyed, taking an event and making it greater by their determination to handle it, the attention focused on the White House. During the next two months, officials were photographed briskly walking (almost trotting) as they came and went with their attaché cases, giving their No comment’s, the blending of drama and power, everything made a little bigger and more important by their very touching it. Power and excitement come to Washington. There were intense conferences, great tensions, chances for grace under pressure. Being in on the action. At the first meeting, McNamara forcefully advocated arming half a dozen AT6s (obsolete World War Ⅱ fighter planes) with 100-lb. bombs, and letting them go after the bad Laotians. It was a strong advocacy; the other side had no air power. This we would certainly win; technology and power could do it all. (“When a newcomer enters the field [of foreign policy],” Chester Bowles wrote in a note to himself at the time, “and finds himself confronted by the nuances of international questions he becomes an easy target for the military-CIA-paramilitary-type answers which can be added, subtracted, multiplied or divided…”)

On questioning evidence for the domino theory, page 122:

Later, as their policies floundered in Vietnam, … the real problem was the failure to reexamine the assumptions of the era, particularly in Southeast Asia. There was no real attempt, when the new Administration came in, to analyze Ho Chi Minh’s position in terms of the Vietnamese people and in terms of the larger Communist world, to establish what Diem represented, to determine whether the domino theory was in fact valid. Each time the question of the domino theory was sent to intelligence experts for evaluation, the would back answers which reflected their doubts about its validity, but the highest level of government left the domino theory alone. It was as if, by questioning it, they might have revealed its emptiness, and would then have been forced to act on their new discovery.

On the ridiculousness of the Special Forces, page 123:

All of this helped send the Kennedy Administration into dizzying heights of antiguerilla activity and discussion; instead of looking behind them, the Kennedy people were looking ahead, ready for a new and more subtle kind of conflict. The other side, Rostow’s scavengers of revolution, would soon be met by the new American breed, a romantic group indeed, the U.S. Army Special Forces. They were all uncommon men, extraordinary physical specimens and intellectuals Ph.D.s swinging from trees, speaking Russian and Chinese, eating snake meat and other fauna at night, springing counterambushes on unwary Asian ambushers who had read Mao and Giap, but not Hilsman and Rostow. It was all going to be very exciting, and even better, great gains would be made at little cost.

In October 1961 the entire White House press corps was transported to Fort Bragg to watch a special demonstration put on by Kennedy’s favored Special Forces, and it turned into a real whiz-bang day. There were ambushes, counterambushes and demonstrations in snake-meat eating, all topped off by a Buck Rogers show: a soldier with a rocket on his back who flew over water to land on the other side. It was quite a show, and it was only as they were leaving Fort Bragg that Francis Lara, the Agence France-Presse correspondent who had covered the Indochina War, sidle over to his friend Tom Wicker of the New York Times. “Al; of this looks very impressive, doesn’t it?” he said. Wicker allowed as how it did. “Funny,” Lara said, “none of it worked for us when we tried it in 1951.”

On consensual hallucinations, shared reality, and some alarming parallels to Bruno Latour’s translation model, page 148:

In 1954, right after Geneva, no one really believed there was such a thing as South Vietnam. … Like water turning into ice, the illusion crystallized and became a reality, not because that which existed in South Vietnam was real, but because it became powerful in men’s minds. Thus, what had never truly existed and was so terribly frail became firm, hard. A real country with a real constitution. An army dressed in fine, tight-fitting uniforms, and officers with lots of medals. A supreme court. A courageous president. Articles were written. “The tough miracle man of Vietnam,” Life called [Diem]. “The bright spot in Asia,” the Saturday Evening Post said.

On the difficulty of containing military plans and the general way it’s hard to get technical and operations groups to relinquish control once granted, page 178:

The Kennedy commitment had changed things in other ways as well. While the President had the illusion that he had held off the military, the reality was that he had let them in. … Once activated, even in a small way at first, they would soon dominate the play. Their particular power with the Hill and with hawkish journalists, their stronger hold on patriotic-machismo arguments (in decision making the proposed the manhood positions, their opponents the softer, or sissy, positions), their particular certitude, made them far more powerful players than men raising doubts. The illusion would always be of civilian control; the reality would be of a relentlessly growing military domination of policy, intelligence, aims, objectives and means, with the civilians, the very ones who thought they could control the military, conceding step by step, without even knowing they were losing.

On being stuck in a trap of our own making by 1964, page 304:

They were rational men, that above all; they were not ideologues. Ideologues are predictable and they were not, so the idea that those intelligent, rational, cultured, civilized men had been caught in a terrible trap by 1964 and that they spent an entire year letting the trap grow tighter was unacceptable; they would have been the first to deny it. If someone in those days had called them aside and suggested that they, all good rational men, were tied to a policy of deep irrationality, layer and layer of clear rationality based upon several great false assumptions and buttressed by a deeply dishonest reporting system which created a totally false data bank, they would have lashed out sharply that they did indeed know where they were going.

On the timing of Robert Johnson’s 1964 study on bombing effectiveness (“we would face the problem of finding a graceful way out of the action”), page 358:

Similarly, the massive and significant study was pushed aside because it had come out at the wrong time. A study has to be published at the right moment, when people are debating an issue and about to make a decision; then and only then will they read a major paper, otherwise they are too pressed for time. Therefore, when the long-delayed decisions on the bombing were made a year later, the principals did not go back to the Bob Johnson paper, because new things had happened, one did not go back to an old paper.

Finally and perhaps most important, there was no one to fight for it, to force it into the play, to make the other principals come to terms with it. Rostow himself could not have disagreed more with the paper; it challenged every one of his main theses, his almost singular and simplistic belief in bombing and what it could accomplish.

On George Ball’s 1964 case for the doves and commitment to false hope, page 496:

Bothered by the direction of the war, and by the attitudes he found around him in the post-Tonkin fall of 1964, and knowing that terrible decisions were coming up, Ball began to turn his attention to the subject of Vietnam. He knew where the dissenters were at State, and he began to put together his own network, people with expertise on Indochina and Asia who had been part of the apparatus Harriman had built, men like Alan Whiting, a China watcher at INR; these were men whose own work was being rejected or simply ignored by their superiors. Above all, Ball was trusting his own instincts on Indochina. The fact that the others were all headed the other way did not bother him; he was not that much in awe of them, anyway.

Since Ball had not been in on any of the earlier decision making, he was in no way committed to any false hopes and self-justification; in addition, since he had not really taken part in the turnaround against Diem, he was in no way tainted in Johnson’s eyes.

On slippery slopes for “our boys,” page 538:

Slipping in the first troops was an adjustment, an asterisk really, to a decision they had made principally to avoid sending troops, but of course there had to be protection for the airplanes, which no one had talked about at any length during the bombing discussion, that if you bombed you needed airfields, and if you had airfields you needed troops to protect the airfields, and the ARVN wasn’t good enough. Nor had anyone pointed out that troops beget troops: that a regiment is very small, a regiment cannot protect itself. Even as they were bombing they were preparing for the arrival of our boys, which of course would mean more boys to protect our boys. The rationale would provide its own rhythm of escalation, and its growth would make William Westmoreland almost overnight a major player, if not the major player. This rationale weighed so heavily on the minds of the principals that three years later, in 1968, when the new thrust of part of the bureaucracy was to end or limit the bombing and when Lyndon Johnson was willing to remove himself from running again, he was nevertheless transfixes by the idea of protecting our boys.

On Robert McNamara making shit up, page 581:

Soon they would lose control, he said; soon we would be sending 200,000 to 250,000 men there. Then they would tear into him, McNamara the leader: It’s dirty pool; for Christ’s sake, George, we’re not talking about anything like that, no one’s talking about that many people, we’re talking about a dozen, maybe a few more maneuver battalions.

Poor George had no counterfigures; he would talk in vague doubts, lacking these figures, and leave the meetings occasionally depressed and annoyed. Why did McNamara have such good figures? Why did McNamara have such good staff work and Ball such poor staff work? The next day Ball would angrily dispatch his staff to come up with the figures, to find out how McNamara had gotten them, and the staff would burrow away and occasionally find that one of the reasons that Ball did not have the comparable figures was that they did not always exist. McNamara had invented them, he dissembled even within the bureaucracy, though, of course, always for a good cause. It was part of his sense of service. He believed in what he did, and this the morality of it was assured, and everything else fell into place.

On the political need to keep decisions soft and vague, page 593:

If there were no decisions which were crystallized and hard, then they could not leak, and if they could not leak, then the opposition could not point to them. Which was why he was not about to call up the reserves, because the use of the reserves would blow it all. It would be self-evident that we were really going to war, and that we would in fact have to pay a price. Which went against all Administration planning; this would be a war without a price, a silent, politically invisible war.

On asking for poor service, page 595:

Six years later McGeorge Bundy, whose job it was to ask questions for a President who could not always ask the right questions himself, would go before the Council on Foreign Relations and make a startling admission about the mission and the lack of precise objectives. The Administration, Bundy recounted, did not tell the military what to do and how to do itl there was in his words a “premium put on imprecision,” and the political and military leaders did not speak candidly to each other. In fact, if the military and political leaders had been totally candid with each other in 1965 about the length and cost of the war instead of coming to a consensus, as Johnson wanted, there would have been vast and perhaps unbridgeable differences, Bundy said. It was a startling admission, because it was specifically Bundy’s job to make sure that differences like these did not exist. They existed, of course, not because they could not be uncovered but because it was a deliberate policy not to surface with real figures and real estimates which might show that they were headed toward a real war. The men around Johnson served him poorly, but they served him poorly because he wanted them to.

May 27, 2016 4:20pm

five-minute geocoder for openaddresses

The OpenAddresses project recently crossed 250 million worldwide address points with the addition of countrywide data for Australia. Data from OA is used by Mapbox, Consumer Finance Protection Bureau, and my company, Mapzen.

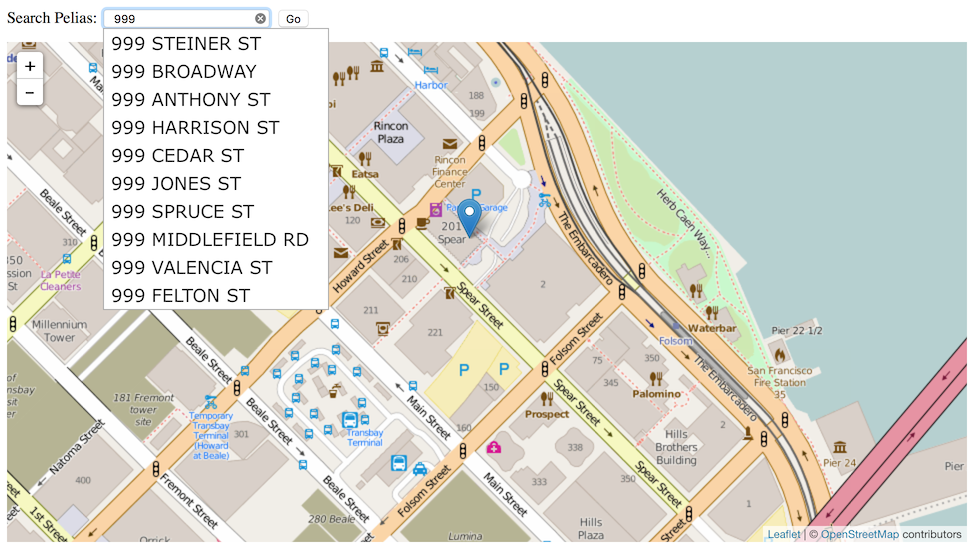

Now, you can use OpenAddresses in a high-quality geocoder yourself. “Geocoding” is the process of transforming input text, such as an address, or a name of a place to a geographic location on the earth's surface. Every time you search for a destination on your phone, you’re geocoding. Mapzen’s Search service uses an open source server we call Pelias, and if you’re using the popular Ubuntu Linux operating system, you can get it set up and serving addresses in just a few minutes.

Start with a clean server running a current version of Ubuntu LTS (long-term support); either 14.04 or 16.04 will work. Amazon has readymade Ubuntu images available on EC2, or a local copy running under Virtualbox will do for testing. Both the address import process and the Elasticsearch index are hungry for lots of memory, so pick a server with 4-8GB of memory to prevent failures.

Next, install the Pelias software using instructions from OpenAddresses:

# Tell Ubuntu where to find packages:

add-apt-repository ppa:openaddresses/geocoder -y

wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | apt-key add -

echo "deb http://packages.elastic.co/elasticsearch/1.7/debian stable main" | tee -a /etc/apt/sources.list.d/elasticsearch-1.7.list

# Install Pelias and dependencies:

apt-get update && apt-get install peliasThis installs the Pelias geocoder, the OpenAddresses importer, a simple web-based map search interface, and the underlying Elasticsearch index.

After installation, you will need to import data. Visit results.openaddresses.io and pick a processed zip file to download. Start small with a city like Berkeley, CA to test the process. Download and unzip it in the directory `/var/tmp/openaddresses` where Pelias expects to find CSV files, then run `pelias-openaddresses-import` to index the data.

# Get a sample file of address data:

cd /var/tmp/openaddresses

curl -OL https://results.openaddresses.io/latest/run/us/ca/berkeley.zip

apt-get install unzip && unzip berkeley.zip

# Index the addresses:

pelias-openaddresses-importThat’s it!

Pelias includes many neat features out of the box, such as reverse geocoding and autocomplete. Read the docs on Github.

The Mapzen Search service includes some additional features that aren’t yet covered here. For example, to include administrative areas like cities or states in searches, it’s necessary to do an admin lookup while importing, and to include data from Who’s On First. I’m also interested to learn more about tuning Elasticsearch for smaller-sized servers with less system RAM. It should be possible to run a geocoder with 1-2GB of memory, and Elasticsearch may require adjustments to make this possible.

Links to more information about geocoding with OpenAddresses:

May 2, 2016 12:40am

notes on debian packaging for ubuntu

I’ve devoted some time over the past month to learning how to create distribution packages for Ubuntu, using the Debian packaging system. This has been a longstanding interest for me since Dane Springmeyer and Robert Coup created a Personal Package Archive (PPA) to easily and quickly distribute reliable versions of Mapnik. It’s become more important lately as programming languages have developed their own mutually-incompatible packaging systems like npm, Ruby Gems, or PyPi, while developer interest has veered toward container-style technology such as Vagrant or Docker. My immediate interest comes from an OpenAddresses question from Waldo Jaquith: what would a packaged OA geocoder look like? I’ve watched numerous software projects create Vangrantfile or Dockerfile scripts before, only to let those fall out of date and eventually become a source of unanswerable help requests. OS-level packages offer a stable, fully-enclosed download with no external dependencies on 3rd party hosting services.

The source for the package I’ve generated can be found under openaddresses/pelias-api-ubuntu-xenial on Github. The resulting package is published under ~openaddresses on Launchpad.

What does it take to prepare a package?

The staff at Launchpad, particularly Colin Watson, have been helpful in answering my questions as I moved from getting a tiny “hello world” package onto a PPA to wrapping up Mapzen’s worldwide geocoder software, Pelias.

I started with a relatively-simple tutorial from Ask Ubuntu, where a community member steps you through each part of wrapping a complete Debian package from a single shell script source. This mostly worked, but there are a few tricks along the way. The various required files are often shown inside a directory called “DEBIAN”, but I’ve found that it needs to be lower-case “debian” in order to work with the various preparation scripts. The control file, debian/control, is the most important one, and has a set of required fields arranged in stanzas that must conform to a particular pattern. My first Launchpad question addressed a series of mistakes I was making.

- My final debian/control file: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/debian/control

- Introduction to control files: https://www.debian.org/doc/manuals/maint-guide/dreq.en.html#control

- Deeper documentation for control file fields: https://www.debian.org/doc/debian-policy/ch-controlfields.html

The file debian/changelog was the second challenge. It needs to conform to an exact syntax, and it’s easiest to have the utility dch (available in the devscripts package) do it for you. You need to provide a meaningful version number, release target, notes, and signature for this file to work. The release target is usually a Debian or Ubuntu codename, in this case “xenial” for Ubuntu’s 16.04 Xenial Xerus release. The version number is also tricky; it’s generally assumed that a package maintainer is downstream from the original developer, so the version number will be a combination of upstream version and downstream release target, in my case Pelias 2.2.0 + “ubuntu” + “xenial”.

- My final debian/changelog file: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/debian/changelog

- An explanation of version numbering for Ubuntu: http://www.ducea.com/2006/06/17/ubuntu-package-version-naming-explanation/

A debian/rules file is also required, but it seems to be sufficient to use a short default file that calls out to the Debian helper script dh.

- My debian/rules file: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/debian/rules

- Another sample rules file: https://www.debian.org/doc/manuals/maint-guide/dreq.en.html#defaultrules

I have not been able to determine how to test my debian director package locally, but I have found that the emails sent from Launchpad after using dput to post versions of my packages can be helpful when debugging. I tested with a simple package called “hellodeb”; here is a complete listing of each attempt I made to publish this package in my “hello” PPA as I learned the process.

My second Launchpad question concerned the contents of the package: why wasn’t anything being installed? The various Debian helper scripts try to do a lot of work for you, and as a newcomer it’s sometimes hard to guess where it’s being helpful, and where it’s subtly chastising you for doing the wrong thing. For example, after I determined that including an “install” make target in the default project Makefile that wrote files to $DESTDIR was the way to create output, it turned out that my attempt to install under /usr/local was being thwarted by dh_usrlocal, a script which enforces the Linux filesystem standard convention that only users should write files to /usr/local, never OS-level packages. In the end, while it’s possible to simply list everything in debian/install, it seems better to do that work in a more central and easy-to-find Makefile.

- My Makefile, with a dummy “all” target and a critical “install” target writing to $DESTDIR: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/Makefile#L11-L13

Finally, I learned through trial-and-error that the Launchpad build system prevents network access. Since Pelias is written in Node, it is necessary to send the complete code along with all dependencies under node_modules to the build system. This ensures that builds are more predictable and reliable, and circumvents many of the SNAFU situations that can result from dynamic build systems.

- Complete code for Pelias API with all dependencies is included in our package: https://github.com/openaddresses/pelias-api-ubuntu-xenial/tree/2.2.0-ubuntu1-xenial4/pelias-api

- Makefile includes some rules on how to regenerate the contents of pelias-api anyway: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/Makefile#L3-L9

- I had to make some maintainer tweaks to one package that made incompatible assumptions about where it’s allowed to touch the filesystem: https://github.com/openaddresses/pelias-api-ubuntu-xenial/blob/2.2.0-ubuntu1-xenial4/patches/cluster2-pids-logs.patch

Rolling a new release is a four step process:

- Create a new entry in the debian/changelog file using dch, which will determine the version number.

- From inside the project directory, run debuild -k'8CBDE645' -S (“8CBDE645” is my GPG key ID, used by Launchpad to be sure that I’m me) to create a set of files with names like pelias-api_2.2.0-ubuntu1~xenial5_source.*.

- From outside the project directory, run dput ppa:migurski/hello "pelias-api_2.2.0-ubuntu1~xenial5_source.changes” to push the new package version to Launchpad.

- Wait.

Now, we’re at a point where a possible Dockerfile is much simpler.

Apr 28, 2016 1:17am

guyana trip report

Guyana is about to celebrate its 50th anniversary of independence from Great Britain. It’s not a big country, but it’s two-thirds rain forest and contains the oldest exposed rocks on earth, aged about two billion years. In the inland south of the country, the Wapichan and Macushi Amerindian tribes are asserting their place in the modern world using a combination of political, cultural, and technological means to map their territory. I just spent seven days there observing the material support work of Digital Democracy (Dd), where I’m a board member.

Saddle Mountain is culturally and spiritually significant to the tribe

Dd’s work in Guyana is arranged with the district council of elected leaders, and focuses on a group of environmental monitors covering this enormous 3,000 square mile savannah and the 7,000 square mile forest to the east. The monitors do two kinds of work: they map static sites using OpenStreetMap and Esri tools to compile a replacement for decades-old low-accuracy official maps, and they record evidence for economic/environmental abuses such as cross-border cattle rustling from Brazil to the west and destructive gold mining in the hilly forest rivers to the east. There are just a handful of monitors covering this area: Ezra, Tessa, Gavin, Timothy, Angelbert, and Phillip. They cover the entire Rupununi savannah, and their work typically centers on the northern village of Shulinab where we were guests of Nicholas and Faye Fredericks. Nick is the elected head of the village; he recently accepted the Equator Prize from the UN Development Program to indigenous organizations working on climate change issues.

The guest house where we stayed, newly-built to encourage visitors and possibly tourism

The monitoring work uses drones and phones to collect aerial imagery, and is definitely the more charismatic of the two efforts. Gold mining operations along the rivers in the hills alter the natural habitats of economically-important species, particularly through mercury dumping that can poison un-mined sections of downstream river. Dd’s Gregor MacLennan has been visiting Guyana for the past ten years, and has experimented with a variety of imagery techniques to create a temporal portrait of mining operations along the rivers in the hills. Due to persistent cloud cover, satellite imagery is only intermittently useful, while cheap, low-flying drones can be used in more kinds of weather. The current technology challenge centers on image-stitching; the best results we’ve achieved have come from the expensive Pix4D package, which demands an active internet connection for piracy control purposes. QZ recently wrote an excellent article about the monitoring work.

Dismantled Parrot quadcopter

Border monitoring relies on data collection with OpenDataKit (ODK) and smartphones. The smartphones work well in the savannah, especially now that the monitors have begun using motorcycle-to-USB charging kits to keep batteries powered. ODK is just okay; it’s a clumsy system, likes to have a central server for reporting and collating data, and seems to be designed for a institutional use-case that doesn’t match the need here.

Ron, Ezra, and the monitor team huddling around a smartphone demonstration of new ODK input forms

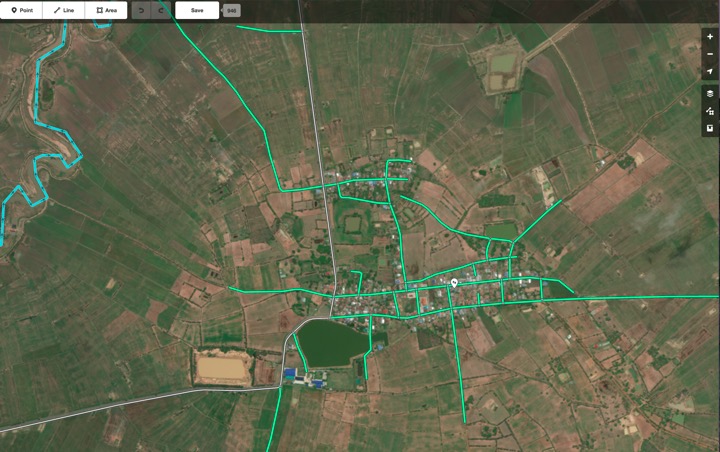

The static mapping effort is less exciting for outsiders, but more directly useful for the community. ODK has been in use here as well, but we’re working on some new concepts in peer-to-peer OSM editing using distributed logs with James Halliday a.k.a. Substack. James has previously been involved in Max Ogden’s Dat Project, he’s the author of a bunch of popular Node things including Browserify, and p2p architectures are his current jam. This part of the work, dubbed Peermaps, is super promising, and implements a number of concepts borrowed from Git and other distributed data tools. Gregor and James have submitted a talk proposal to State Of The Map U.S. The end goal here is a map of the territory, something the group has been collecting in Esri ArcMap format for the past few years. We saw a wall-sized laminated version of the map, and several area schools have requested print versions for use in classrooms. The data features accurate river lines, and numerous points of interest for cultural and natural features significant to the community. Mapping these features allows the community to support their territorial claims in discussions with the natural government more effectively than previous narrative descriptions.

Tessa, considered the most computer-savvy of the monitors, explained the territory map for me

Probably the most surprising thing about this trip for me was the sophistication of the political organization behind this effort. I had some “white savior complex” worries before I showed up because it’s often hard to tell from secondhand stories who’s really pushing the work, and whether partners are passive or active. In Guyana, the local community is organized into a district council of elected leaders (each called a Toshao) from the seventeen villages, strategy is determined through deliberation, and decisions are made and supported consistently. I spoke with people in Georgetown about the effort and word of Nick and Faye’s energy and creativity here has definitely gotten around. I was honored to be their guest. Dd provides material support, but credit for the recognition of the importance of data collection and the decision to support it through the monitoring program belongs to the community.

Angelbert, Ezra, Timothy, and the monitor team working on the data collection kit; James is on the far right

Ezra, one of the monitors, said at one point that “photos justify our land.” This phrase stuck with me.

Feb 15, 2016 6:27am

openaddresses population comparison

Lots going on with OpenAddresses since I last wrote about it in July. The continuous integration service is alive and well, we have downloadable collections of all addresses, the dot map is updating regularly, and the full collection has ticked over 220 million records.

Last year, Tom Lee (of Sunlight Foundation and Mapbox) made an offhand estimate of two people per address which has had me thinking about completeness and coverage estimation. At that number, we’re at approximately 3% of global addresses. Fortunately, there are a few gridded population datasets with worldwide coverage that make it possible to estimate coverage more precisely:

- NASA Earthdata Gridded Population of the World (GPW) offers a 30” raster product.

- Global Rural-Urban Mapping Project (GRUMP) offers additional processing over GWP.

- Yale Geographically Based Economic Data (G-Econ) offers a 1° gridded spreadsheet data product, broken down by country.

G-Econ is the smallest data set, and as a simple spreadsheet it’s the easiest to get started with. It has estimates through 2005 which is close enough for an experiment, and a number of interesting data columns beyond simple population counts about economic output, soil type, availability of water, and climate.

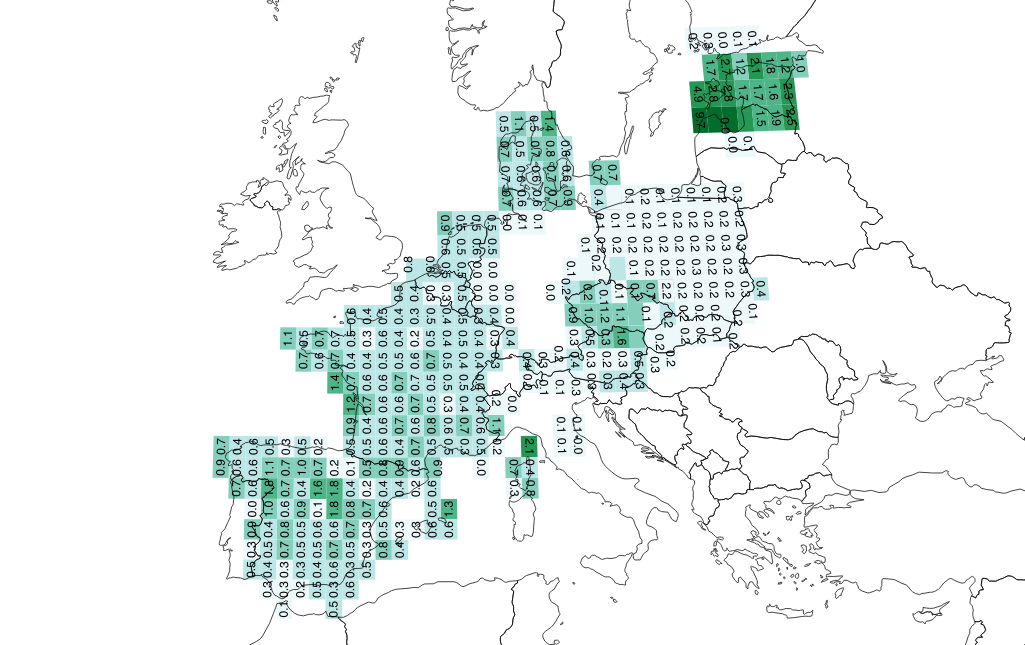

Using G-Econ, it’s possible to generate address/person comparisons for places where OpenAddresses has data, such as Europe:

From this image, it looks like Tom’s estimate of 0.5 addresses per person holds for much of France, Spain, and Denmark, but not at all for Poland where it’s lower at 0.2 (five people per address). The values for Estonia are strange, with almost two addresses per person in certain grid squares.

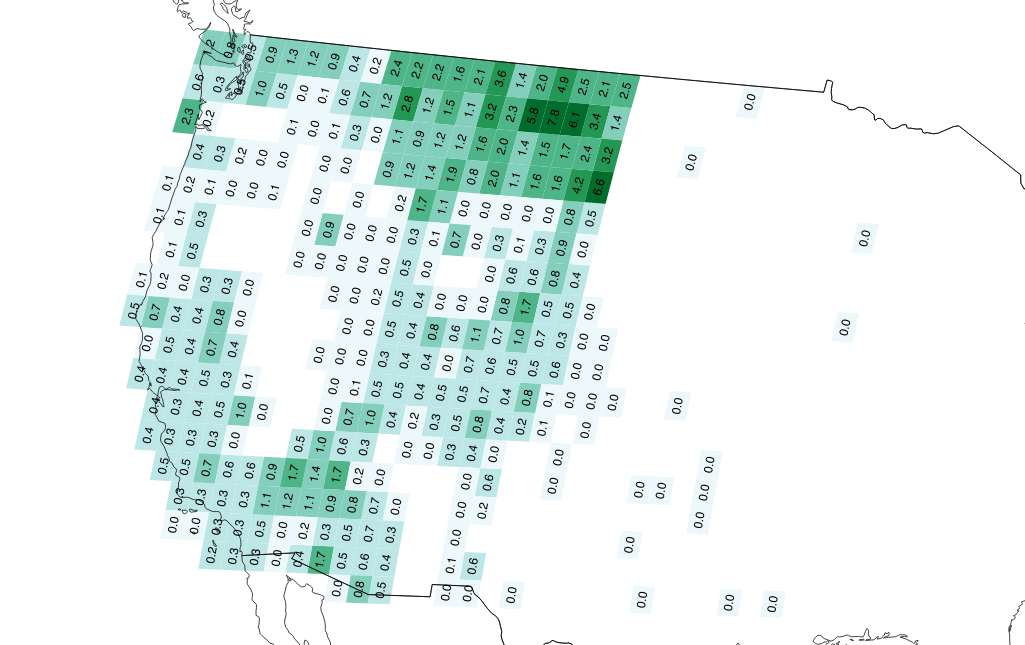

In the Western U.S., we can see the effect of overcounting Montana addresses via overlapping statewide and county sources:

I’m just getting started on this analysis, and hope to create fresh data on a regular basis as we generate scheduled downloads of OA data. I’d like to understand more about the relationships between population density and address availability, and potentially switch to the more complex and current GPWv4 dataset if this is interesting.

For now, check out these two things:

Feb 1, 2016 4:59am

blog all oft-played tracks VII

This music:

- made its way to iTunes in 2015,

- and got listened to a lot.

I’ve made these for 2014, 2013, 2012, 2011, 2010, and 2009. Also: everything as an .m3u playlist.

1. Grimes: Flesh without Blood

2. Trust: Shoom

3. Klatsch!: God Save The Queer

4. Zed’s Dead: Lost You

5. The Communards: Disenchanted

6. Delia Gonzalez & Gavin Russom: Relevee (Carl Craig Remix)

7. The Smiths: Rubber Ring

8. Hot Chip: Need You Now

9. The All Seeing I: 1st Man In Space

10. Missy Elliott: WTF (Where They From) ft. Pharrell Williams

%20ft.%20Pharrell%20Williams.jpg)