tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Dec 30, 2014 7:05am

2015 fellowship reader

The 2015 Code for America fellows show up next week from all over. In 2013, CfA published the book Beyond Transparency. I heard from last year’s class that many had read it cover-to-cover before starting. So, we gathered-I-mean-curated a pile of essays and blog posts on design, culture, and code, assembled them into a 400 page reader, and shipped one to each of our 24 incoming fellows.

We included gems like Leisa Reichelt’s Help Joy Help You, Dan Milstein’s Coding, Fast and Slow, the GDS banned words list, timeless design classic Deep Inside Taco Bell’s Doritos Locos Taco, and dozens of other bits of propaganda that we thought might come in handy this year.

We assembled the materials via Github and delivered scrubbed HTML files. Interior design was handled by Noel Callego via oDesk, and I made the cover. Lulu.com did the printing; overall turnaround was a little over three weeks for design, printing, and shipping.

Here are some photos by Frances:

Dec 9, 2014 7:28am

bike ten: schwinn touring

Between the yellow cargo bike and the green commuter bike, I have a mid-90s Trek that I use for errands around town. It’s not very interesting, so it’s time to replace it with a new project like the others.

Earlier in the fall, I picked up this 1985 Schwinn Tour De Luxe frame:

Here it is in its original glory, minus the Surly fork and Box Dog sticker. I’m borrowing a page from the religious fervor for agile/lean development process at work and building this one up starting with the pile of bike parts I already have in the garage.

I had to pick up the stem, front wheel, brake levers, and backwards-mounted bullhorn handlebars. Everything else is from other bike projects. I’ve started riding it a bit this week, and I’m really happy with the stiff frame and upright riding position. I did find one problem with the rear brake bosses: one of them is slightly loose, so I’m using a caliper brake instead of a cantilever until I can get a welder to help.

The back wheel is clearly about to fall apart. I want to replace it with an internally-geared hub, probably one of the Sturmey-Archer 5-speeds that can fit in the 126mm of rear spacing.

Dec 3, 2014 5:37am

more open address machine

I spent a substantial portion of Thanksgiving break working on Openaddresses Machine and publishing new data to data.openaddresses.io. Introducing any kind of reliable automation to a process like this is going to be a bumpy ride.

I like Ivan Sagalaev’s take:

Ever since I made an automatic publishing infrastructure for highlight.js releases… there wasn't a single time when it really worked automatically as planned!

There was always something: directory structure changes that require updates to the automation tool itself, botched release tagging, out of date dependencies on the server, our CDN partners having their own bugs with automatic updates, etc. … You can't really automate anything. You just shift maintenance from your thing to your automation tools. But! It still makes sense because by introducing automation you can do more complex things at the next level and keep maintenance essentially constant.

So that’s where the non-daylight hours of my holiday weekend went: shifting maintenance from the thing to the automation tool so OA can do more complex things. Previously, I was running the OA data process using a multi-step process:

- Start up a new EC2 server, stepping through the console wizard.

- Clone the machine code to the new server.

- Run chef to install all the pieces.

- Run openaddr-process and wait until it completes.

- Kill the server.

I’ve introduced a new script, openaddr-ec2-run, that pulls the steps above into just one, and learned a bunch of annoying things along the way.

On Monday, I encountered the excitement of Ruby dependency hell (“either you or the maintainer of a gem you depend on will fuck up the dependencies at some point”) for the first time when Opscode released ohai 7.6.0 and ruined a bunch of people’s days. Running an automation process that relies on external services like RubyGems or NPM can be a risky business, but on balance I prefer this type of risk to the delaying strategy of virtualual images, Docker, and friends. It’s a way to keep maintenance constant as Ivan says. Opscode fixed the problem, I removed my workaround, and only my already-frayed trust in the Ruby ecosystem was harmed.

Today, I encountered a set of finicky NPM issues connected to machine’s use of EC2’s user data shell scripts. iconv, or really node-gyp I guess, really wants HOME to be present in the environment variables and will not build if it’s not found. Fixing this took a bit of debugging with env, and I discovered some more Ruby derp along the way:

This error happens because { } has two different meanings in Ruby: Hash value expressions and method blocks. If a procedure is called in poetry mode (no parens) then there is an ambiguity if the parser encounters a { after a method name.

“Poetry mode” is a thing in Ruby, and it will fuck your shit up because Matz didn’t read PEP 20:

There should be one - and preferably only one - obvious way to do it.

Anyway.

Boto and Amazon Web Services are blessedly stable; I was able to copy-paste code from three years ago and have it just work to guess a reasonable EC2 spot request bid and start up a server instance. One interaction I introduced was the result of finding a runaway 8xlarge EC2 instance from earlier this month that I had forgotten about and continued to pay for (it adds up): the instance terminates itself when it’s done, the run script monitors the instance on a loop, and canceling the script with a KeyboardInterrupt at any point will immediately terminate the instance and cancel the reservation. Just because a computer is in the sky doesn’t mean I don’t want a convincing illusion of running it in my own terminal.

Nov 20, 2014 6:07am

open address machine

The OpenAddresses project is super-interesting right now:

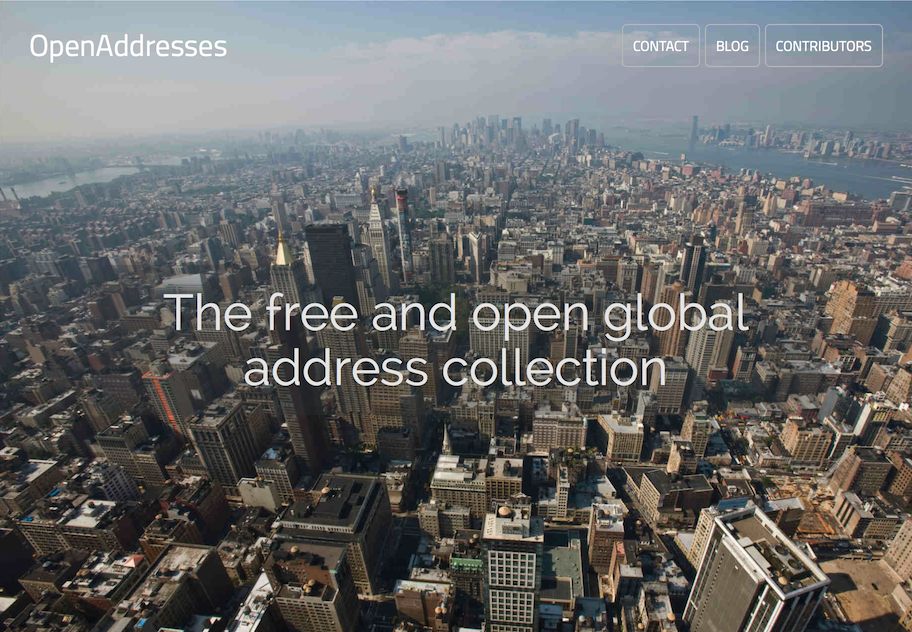

OpenAddresses is a global repository for open address data. In good open source fashion, OpenAddresses provides a space to collaborate. Today, OpenAddresses is a downloadable archive of address files, it is an API to ingest those address files into your application and, more than anything, it is a place to gather more addresses and create a movement: add your government’s address file and if there isn’t one online yet, petition for it. —Launching OpenAddresses.

OA is the free and open global address collection, but it’s just getting off the ground. Ian Dees of longtime OpenStreetMap involvement kicked off the project early this year when OSM balked at bulk address imports. It’s more sensible as a separate project anyway.

I’ve been working on data.openaddresses.io to make the project more legible and responsive.

I’m about six months late to the party, but there’s a ton to do right now. Thinking back on my own involvement in OSM, I remembered that around 2006 the street map tiles were being updated infrequently, and my own willingness to add data was gated by the turnaround time of seeing my input on the real, live map. I’d add some stuff, then twiddle my thumbs for days (or weeks) while the render refreshed. My satisfaction from adding data improved with every advance in OSM’s rendering stack re-render time. Seeing your effect on the data set is an important motivational factor.

OA has a similar issue for me. It’s implemented as a giant bag of JSON files stored in Github, so it’s not immediately obvious where the data lives, how up-to-date it is, or (if you’re submitting new files) whether a data source even works. The processing code works, but it’s not immediately obvious how to make all the pieces fit together.

I have been working on machine, a harness for running the whole process on a more regular cycle. There’s a bunch of interesting moving pieces.

I’ve taken Andy Allan’s chef advice to heart and created a chef recipe collection for preparing OA to run on a bare Ubuntu 14.04 machine. Chef is a no-brainer for me now, and I use it for everything that stands any chance of being important. Andy says:

Configuration management really kicks in to its own when you have dozens of servers, but how few are too few to be worth the hassle? It’s a tough one. Nowadays I’d say if you have only one server it’s still worth it – just – since one server really means three, right? The one you’re running, the VM on your laptop that you’re messing around with for the next big software upgrade, and the next one you haven’t installed yet.

If you want to add a skeletal chef script to any existing repository, start here:

git pull https://github.com/migurski/chefbase.git master

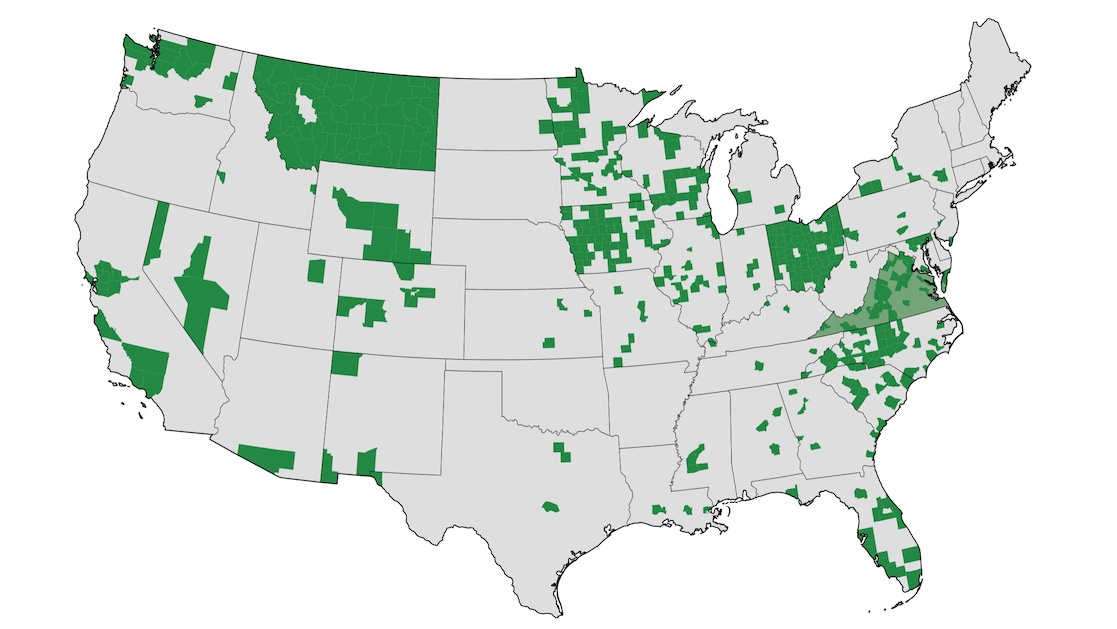

The whole OA codebase is now possible to run on a scratch machine, which means that once each week I can start an EC2-XXXL server and have it set up with complete OA code in minutes. It takes a few hours to run everything. We can keep data.openaddresses.io up-to-date with the status of the data, including a fresh map of data from US states and counties (even though OA is international), a complete listing of cached and processed status for all data, and small data samples to provide hints for correctly mapping (“conforming”) source data to OA’s needs.

There remains a lengthy ticket backlog, but I am hoping that OA provides a way to better expose and unify the world’s municipal government spatial data. Today, addresses. Tomorrow, parcels.

Apr 24, 2014 4:07pm

making the right job for the tool

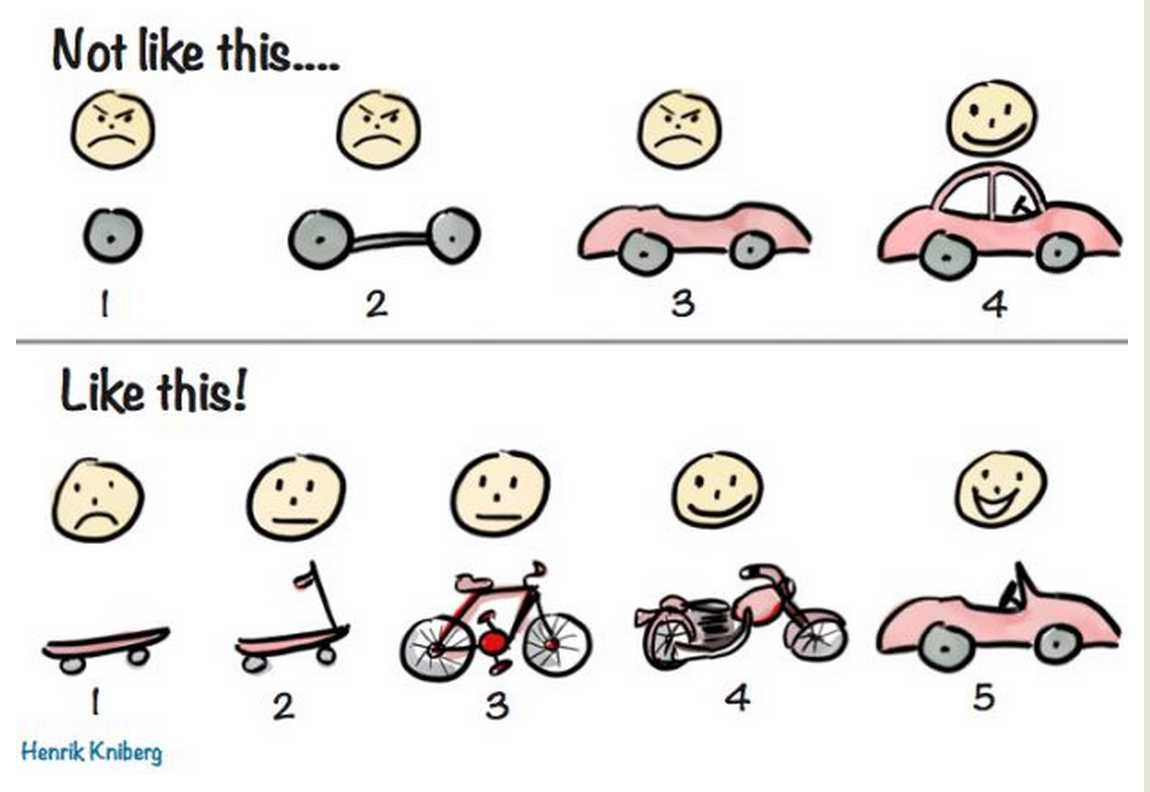

Near the second half of most nerd debates, your likelihood of hearing the phrase “pick the right tool for the job” approaches 100% (cf. frameworks, rails, more rails, node, drupal, jquery, rails again). “Right tool for the job” is a conversation killer, because no shit. You shouldn’t be using the wrong tool. And yet, working in code is working in language (naming things is the second hard problem) so it’s equally in-bounds to debate the choice of job for the tool. “Right tool” assumes that the Job is a constant and the Tool is a variable, but this is an arbitrary choice and notably contradicted by our own research into the motivations of idealistic geeks. Volunteers know the tools they know, and are looking for ways to use their existing powers for good. They are selecting a job to fit the tool. Martin Seay’s brilliant essay on pop music, Ke$ha’s TiK ToK, pro wrestling and conservatism critiques the type of realist resignation that assumes the environment (the job) is immutable:

It is a sterling example of what a number of commentators—I’ll refer you to k-punk—have characterized as the fantasy of realism: an expedient and comfortable confusion of what is politically difficult with what is physically impossible. … This kind of “realism” offers something even more desirable than a clear-eyed assessment of your current circumstances, namely the feeling that you’ve made such an assessment, and that you’ve come away with the conclusion that this is as good as it gets. … This is professional wrestling again: the comforting notion that you know what you need to know, that everything is clear.

At some level, our tools come preselected. At Day Job, we have tool guy Eric Ries on our board, and by design stick to the universes of web scripting languages and user needs research. Going in to a government partnership, we know that the set of jobs for which we are suited is bounded by time and scale. Instead, we look for opportunities where governments are creating the wrong jobs based on the tools they have available. One example is Digital Front Door, an emerging project on publishing and content management where we’re looking at the intertwined evils of CMS software and omnibus vendor contracts. Given a late 90s consensus on content publishing, it seems inevitable that every website project must result in a massive single-source RFP, design, and migration effort. So much risk to pile onto a single spot. How would a city government change the scope of a job if it knew it had other tools available? Would the presence of static site generators and workflows based on git-style sharing models influence the redefinition of the job to be smaller, lower-risk, more agile? I think yes.

“Pick the right tool” is common-sense advice that elides a more interesting set of possibilities. When you can redefine the job, the best tool may be the one you already have.

Apr 13, 2014 5:03am

the hard part

The hard part of coming to State of the Map is that I’m only a little bit connected to the OpenStreetMap project right now, and not spending most of my time on geospatial open source like I used to. I’ll come back to it, but today I’ve had a number of conversations about projects of mine, their status, and whether I have abandoned them. Metropolitan Extracts have not yet been run during 2014, TileStache is stable but has a few outstanding pull requests, and it’s high time I merged Walking Papers with Stamen’s more-stable Field Papers offshoot. Thankfully Vector Tiles remain happily running on the US OSM server.

I wish I could say I had easy answers for these projects; they seem genuinely useful to people but not something I can maintain at the moment and not something I can exactly delagate at CfA.

Apr 5, 2014 6:36pm

end the age of gotham-everywhere

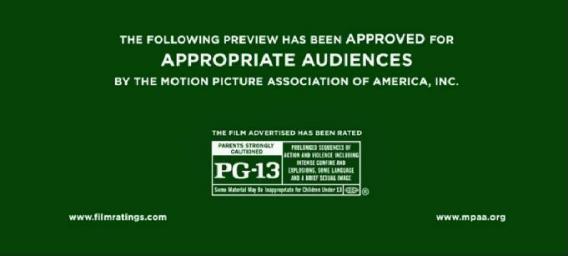

If you’ve attended a movie or generally looked at things in the past five years, you’ll know that we’re in the age of ubiquitous gotham.

The MPAA has switched to Gotham for their ratings screens. If you pay attention to the previews before a movie, it’s now the go-to font for all movie titles. Obama’s 2008 campaign standardized on Gotham and Sentinel (another H&FJ face) for their celebrated visual identity. In 2012, Obama switched things up and standardized on Sentinel and Gotham. Code for America uses these two excellent fonts on our website via the H&FJ cloud service.

Gotham is the inception horn of typefaces.

It’s a major, inescapable part of the visual landscape, and I think it needs a boycott.

You might be interested to know that the celebrated type foundry Hoefler & Frere-Jones who created the typeface is going through an acrimonious divorce right now. Do yourself a quick favor and read Tobias Frere-Jones’s opposition to Hoefler’s motion to dismiss. The short version is that Frere-Jones joined Hoefler’s foundry over ten years ago, and brought with him a set of “dowry” fonts that he had developed for his previous employer. In return, Hoefler allegedly promised 50% of the company, changed its name to Hoefler & Frere-Jones, and spent a complete decade referring to Frere-Jones as “partner” while quietly stalling on making the status official. Frere-Jones finally got fed up waiting and forced the issue, things went pear-shaped, and the studio is now called Hoefler Company.

I’ve had my issues with H&FJ in the past, but this current situation is an object lesson in the perils of half-assing legal relationships. Hoefler is a celebrated designer himself, but in this story plays the role of jerkface suit. Frere-Jones is probably too naïve for his own good, but here he is serving as a critical example for why you should always get it in writing, even just a one-sentence napkin scrawl of intent. I left my own former company Stamen Design in 2012 after nine years, and my experience working with Eric and Shawn and eventually departing was a cakewalk thanks to Eric’s above-board handling of my 25-year-old self in 2003.

So, to draw attention to the need for businesses to treat designers with respect, and the need for designers to insist that business processes happen by the book, I think it’s time we put an end to the age of Gotham everywhere.

Apr 2, 2014 5:14am

on this day

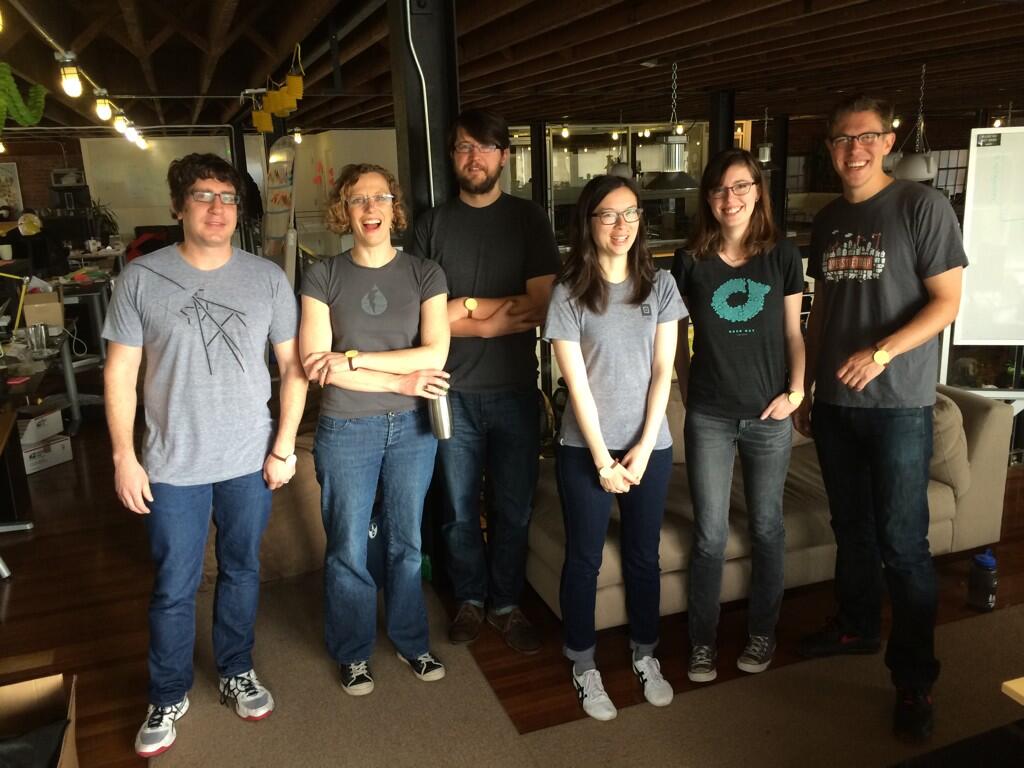

Today, we wrapped up a bunch of fake cities from the goofy tail end of our Code for America site redesign process into a 2015 cities April Fools joke.

Today, everyone on the tech team dressed up as me, right down to the yellow DURR.

Today, Frances and I merged streams and made progress on a proof-of-concept we’ve been thinking about for the Digital Front Door project. Lane wrote that linked post.

Today, I participated on a panel hosted by Zipfian Academy on Data Science For Social Good, with folks who work in education, power, petitions, and health. These are my notes:

Code for America works at the source of data: cities, governments, and the primary source data they produce.

Governments are famously behind on technology, while Silicon Valley is famously out front. So, the biggest technical challenge we face is the bridging the trough of disillusionment rollercoaster ride of the Gartner Hype Cycle.

We try to build that bridge by working on things that matter to cities, like public records, public services, and communications.

At the same time, we are building and supporting an international community of civic hackers, through projects like the Brigade and a new API for collecting and hosting information about civic tech projects.

Right now, one of the emergent data science issues we see is ETL or Extract/Transform/Load. At our weekly Open Oakland Brigade hack night, people like former fellow Dave Guarino are helping city staff publish ethics commission data.

Come to Open Oakland meeting every Tuesday, 6:30pm at city hall to participate. Search Google “ETL for America” to learn more.

If you live in SF, come to SF Brigade meeting every Wednesday, 6:30pm at Code for America 9th & Natoma.

All in all a good day.

Mar 17, 2014 12:49am

managers are awesome / managers are cool when they’re part of your team

Apropos the Julie Ann Horvath Github shitshow, I’ve been thinking this weekend about management, generally.

I don’t know details about the particular Github situation so I won’t say much about it, but I was present for Tom Preston-Werner’s 2013 OSCON talk about Github. After a strong core message about open source licenses, liability, and freedom (tl;dr: avoid the WTFPL), Tom talked a bit about Github’s management model.

Management is about subjugation; it’s about control.

At Github, Tom described a setup where the power structure of the company is defined by the social structures of the employees. He showed a network hairball to illustrate his point, said that Github employees can work on what they feel like, subject to the strategic direction set for the company. There are no managers.

This bothered me a bit when I heard it last summer, and it’s gotten increasingly more uncomfortable since. I’ve been paraphrasing this part of the talk as “management is a form of workplace violence,” and the still-evolving story of Julie Ann Horvath suggests that the removal of one form of workplace violence has resulted in the reintroduction of another, much worse form. In my first post-college job, I was blessed with an awesome manager who described his work as “firefighter up and cheerleader down,” an idea I’ve tried to live by as I’ve moved into positions of authority myself. The idea of having no managers, echoed in other companies like Valve Software, suggests the presence of major cultural problems at a company like Github. As Shanley Kane wrote in What Your Culture Really Says, “we don’t have an explicit power structure, which makes it easier for the unspoken power dynamics in the company to play out without investigation or criticism.” Managers might be difficult, hostile, or useless, but because they are parts of an explicit power structure they can be evaluted explicitly. For people on the wrong side of a power dynamic, engaging with explicit structure is often the only means possible to fix a problem.

Implicit power can be a liability as well as a strength. In the popular imagination, implicit power elites close sweetheart deals in smoke-filled rooms. In reality, the need for implicit power to stay in the shadows can cripple it in the face of an outside context problem. Aaron Bady wrote of Julian Assange and Wikileaks that “while an organization structured by direct and open lines of communication will be much more vulnerable to outside penetration, the more opaque it becomes to itself (as a defense against the outside gaze), the less able it will be to “think” as a system, to communicate with itself. The more conspiratorial it becomes, in a certain sense, the less effective it will be as a conspiracy.”

Going back to the social diagram, this lack of ability to communicate internally seems to be an eventual property of purely bottoms-up social structures. Github has been enormously successful on the strength of a single core strategy: the creation of a delightful, easy-to-use web UI on top of a work-sharing system designed for distributed use. I’ve been a user since 2009, and my belief is that the product has consistently improved, but not meaningfully changed. Github’s central, most powerful innovation is the Pull Request. Github has annexed adjoining territory, but has not yet had to respond to a threat that may force it to abandon territory or change approach entirely.

Without a structured means of communication, the company is left with the vague notion that employees can do what they feel like, as long as it’s compliant with the company’s strategic direction. Who sets that direction, and how might it be possible to change it? There’s your implicit power and first point of weakness.

This is incidentally what’s so fascinating about the government technology position I’m in at Code for America. I believe that we’re in the midst of a shift in power from abusive tech vendor relationships to something driven by a city’s own digital capabilities. The amazing thing about GOV.UK is that a government has decided it has the know-how to hire its own team of designers and developers, and exercised its authority. That it’s a cost-saving measure is beside the point. It’s the change I want to see in the world: for governments large and small to stop copy-pasting RFP line items and cargo-culting tech trends (including the OMFG Ur On Github trend) and start thinking for themselves about their relationship with digital communication.

Mar 2, 2014 7:47am

bike eight: french parts

For about six years, this blue Univega Sportour harvested from the dumpster across 17th Street was my primary get-around bike:

In October, I was riding to work on the first day of the Bart strike. The F Transbay bus had no room on the front rack, so I took the Caltrans bike shuttle instead. I used to do this every day, typically sharing the van with fourteen other riders. Many of them tend to be messengers and other working cyclists, and I was out of practice. Someone scolded me for putting my bike into the wrong spot on the trailer, and my already off-kilter morning was thrown further out of balance. The bike shuttle no longer stopped at the Transbay terminal, and instead let us off at the foot of Harrison. I rode east, through a city confused by extra traffic and new patterns. Hours later, I heard how the whole city seemed to be more on-edge than usual, stress building up in the face of Bart riders forced to find other means of getting around.

As I crossed 7th Street, I was struck from the left by a taxicab and thrown 20 feet to the ground. I was taken to the emergency room at SF General, while the police took the bike to my office. It spent the next several months in the basement of Code for America as my back and shoulder healed so I could ride again. The Univega was a true trash treasure, and I wanted to salvage the parts I had added to it over time.

The frame was a total loss. The wheels were twisted out of shape, though the hubs were fine. The handlebars could be bent back, the stem was fine, the seat survived, the brake looked good, and I had some hope for the cranks.

A few weeks of bike shopping turned up this bright green Motobecane in Berkeley:

I’d never worked on a French bike before, and wasn’t sure entirely what to expect. It looked like any other steel bike boom frame, but when I got to work and stripped it down to the frame, I found a few important differences.

The brake hole on this frame wasn’t wide enough for a recessed mounting, typical of newer bikes and my existing brake. I looked at buying some nutted-mount caliper brakes, and briefly considered drilling out the back of the fork crown to make room for the wider bolt. I gave up on using the caliper front brake from the Univega and instead cleaned up the center-pull brakes that came with the Motobecane. I got most of the gunk off and put them back on as I had received them, but found that the adjustment of a center-pull brake is a serious pain. There’s a tool called a “third hand” you can use to make this easier; I didn’t get one.

The dimensions of the spindle holding the cranks turned out to be important. My older cranks were made for a longer spindle, and when I first assembled everything and tightened the cranks on, they would not turn. Oops. I pulled apart the bottom bracket from the Univega to see if I could salvage parts, but they weren’t transferrable to the new bike. I did a bit of research on bottom brackets, and learned that French frames are different from other frames. The difference is not always apparent by sight. I ended up buying a complete, sealed, French-threaded bottom bracket from Velo Orange, along with the tool to install it.

Finally, when I added my old cranks to the new frame I realized that they were slightly bent from the accident. It wasn’t visually obvious, but felt completely wrong when riding. So, I had to buy a new set of Sakae cranks with a neat-looking tornado shape. Cool, but the overall costs really added up.

One of the fun initial steps of building this bike was the chance to make wheels from parts. My friend Adam is a bicycle mechanic, and taught me how to build traditional spoked wheels on a truing stand. I’d never done this before, but with his direction even the one that I mostly built turned out great. Actually, I couldn’t have done half this stuff without regularly asking Adam for help. From him I learned that 1.375” looks a lot like 35mm, 24 threads-per-inch looks a lot like 1mm spacing, stems come in 22mm and 22.2mm diameters, and slightly-bent cranks go in the recycling with the beer bottles.

Now I own this great bike, and a bag of spare parts for the Bike Kitchen:

Feb 18, 2014 7:01am

being a client

We released the new Code for America website on Friday, and now it is live.

We’ve been using this project as a testbed for new content management and design process ideas that we think might be helpful to our government partners. In conversations with cities, I’ve learned that no one is ever happy with their content management system, and no one ever thinks they have control over their own presence. Meanwhile, GOV.UK has shown off the importance and value of working on the website first, and the central role it plays in government presence. “Website” is such an uncompelling word, but a site is the default digital front door for any government. This makes city sites interesting to Code for America.

One of the great joys of this project lay in playing the role of the client, something new for me. At Stamen I spent nine years on the other side of the table, and here we got to commission Brighton’s Clearleft and Colorado’s Dojo4 agencies to do visual design and front-end code. Clearleft’s pattern deliverables are the special-special that made the final work so strong. Jon Aizlewood’s introduction to the concept convinced me to contact Clearleft. Jeremy Keith’s interest in design systems kicked off the process, and Anna Debenham’s fucking rock star delivery brought it all home.

Clearleft has publicized their copy of the patterns, while we’ve taken it one step further by hosting our stylesheets directly from the pattern library. We have a far-flung, increasingly-worldwide Brigade program with sites of their own to run, and I’m excited to have a normal website redesign process result in a shareable public utility for the future sites created by our local communities.

I’m currently procrastinating on a blog post detailing the way we blended Github, Jekyll, Google Docs, and Wordpress to create a heterogenous CMS for the site. If you find any typos, bugs, or problems on the site, send us a pull request via Github with a fix.

Jan 28, 2014 4:41am

bike seven: building a cargo bike

This Nishiki Colorado is the seventh bicycle I’ve owned in my life (I’m not counting the blown-plastic bigwheel I rode in Ansonia, CT in 1982). In an effort to further stave off car ownership, I’ve outfitted it as a cargo bike using an Xtracycle FreeRadical X1:

I mostly use it for food shopping. With the cargo bags on either side, I can move a four-bag load of groceries the 2.3 mile distance between my house and the grocery store.

The conversion was relatively simple.

The Nishiki Colorado base came from Craigslist, where late 80s/early 90s mountain bikes are currently easy to find. This one was $90, in excellent condition. I had expected to get a beater bike and just use the frame, but the components on the bike were all in great shape. The wheels did require a bit of spoke adjustment, something that I chose to learn to do myself instead of asking a bike store to quickly do it. I bought the FreeRadical at Tip Top, a bike store near my house in North Oakland, for $500.

The FreeRadical extension attaches to the frame at three points: one bolt attaches near the kickstand mounting plate just behind the bottom bracket, and two additional bolts fit into the dropouts where the wheel normally connects. The connection is strong and stiff, and makes a single extra-long frame for the bike as a whole. The cargo extension has its own additional dropouts, and the rear wheel attaches to those about 18” back from where it would normally sit. Xtracycle includes detailed instructions and there are a few adjustments for different types of base bikes.

There were three challenging steps to completing the connection to the rear wheel: moving the rear brake, moving the rear derailleur, and lengthening the chain.

The FreeRadical requires a linear-pull brake, different from the center-pull cantilever brakes that might typically come with this kind of bike. The linear-pull has to be bought separately, and uses a slightly different length of cable to engage the brake. The bike store advised me to get a new brake lever to account for the difference in length, but I found that the “mushy” feel with the old lever was tolerable so I left it alone.

The rear derailleur is a fiddly part, and since it’s so much further to the back of this bike I also had to use an extra-long cable to attach it. The longer cables are usually marketed to tandem bike owners, and Tip Top had it included with the FreeRadical in the box. Adjusting a derailleur can be a pain, but fortunately the ones produced in past ~25 years are relatively standard and easy to work with. Once I had it all connected and set the high and low limits, it took about fifteen minutes of riding up and down the street with a screwdriver in my hand, stopping every few feet to adjust the derailleur indexing until it all clicked into place.

Lengthening the chain requires a bike chain tool, and you have to buy two normal-length chains and connect them end-to-end.

Here’s what the bike looked like when I first brought it home:

I’m happy with the goofy blue & yellow color, and I’m glad that the new brake and derailleur placement still let me keep the color-matched blue cable housings up at the front. I’ve taken it on BART once, and I didn’t attempt to move it up the stairs and used the station elevators instead. I got a few weird looks and comments on the bike.

Jan 21, 2014 6:06am

blog all video timecodes: how buildings learn, part 3

Following a tip from Jason Kottke, we’ve been watching the six-part BBC series How Buildings Learn, narrated by Stewart Brand and based on his book. The third part, Built For Change, should be required viewing for any designer or developer. It’s rich with advice now quietly part of the software canon. This includes several cameos from architect Christopher Alexander, famous to the software world as the inspiration for the concept of design patterns. What’s most interesting here is the explicit relationship between longevity and lean, repair-like methods.

“All buildings are predictions; all predictions are wrong.”

On San Francisco’s numerous victorians, including the famous painted ladies: “Right angles, flat walls, and simple pitched roofs make them easy to adapt and maintain.”

Montréal’s McGill University Next Home project is an example of homes designed for modification. They’re fairly standard-looking row houses, “easily modified, customized as finances allow … easily adaptible, flexible, and most all affordable.” Each building is sold half-finished, with new owners expected to finish parts of the house beyond the core living area:

Once you get comfortable with the idea that a building is never really finished, then it comes naturally to build for flexibility. For instance, in a new building you can have some areas that are left half-completed, the way attics used to be.

Professor Avi Friedman, on revisiting the homes:

5,000 homes have been built in Montréal since the idea was first introduced. When we came back to the homes three years later, to our surprise and joy we found that 92% of all the buyers changed, modified, improved the homes.

The theme of architects returning to a completed project, to check on how it’s been used and learn from its post-construction history, is one that Brand returns to many times. Lots of congruence with standard software UX practice there.

On conservatism:

Most buildings that are designed by architects to look radical, wind up pretty conservative. A better approach is to start conservative and sensible, and then let the building become radical by being responsive.

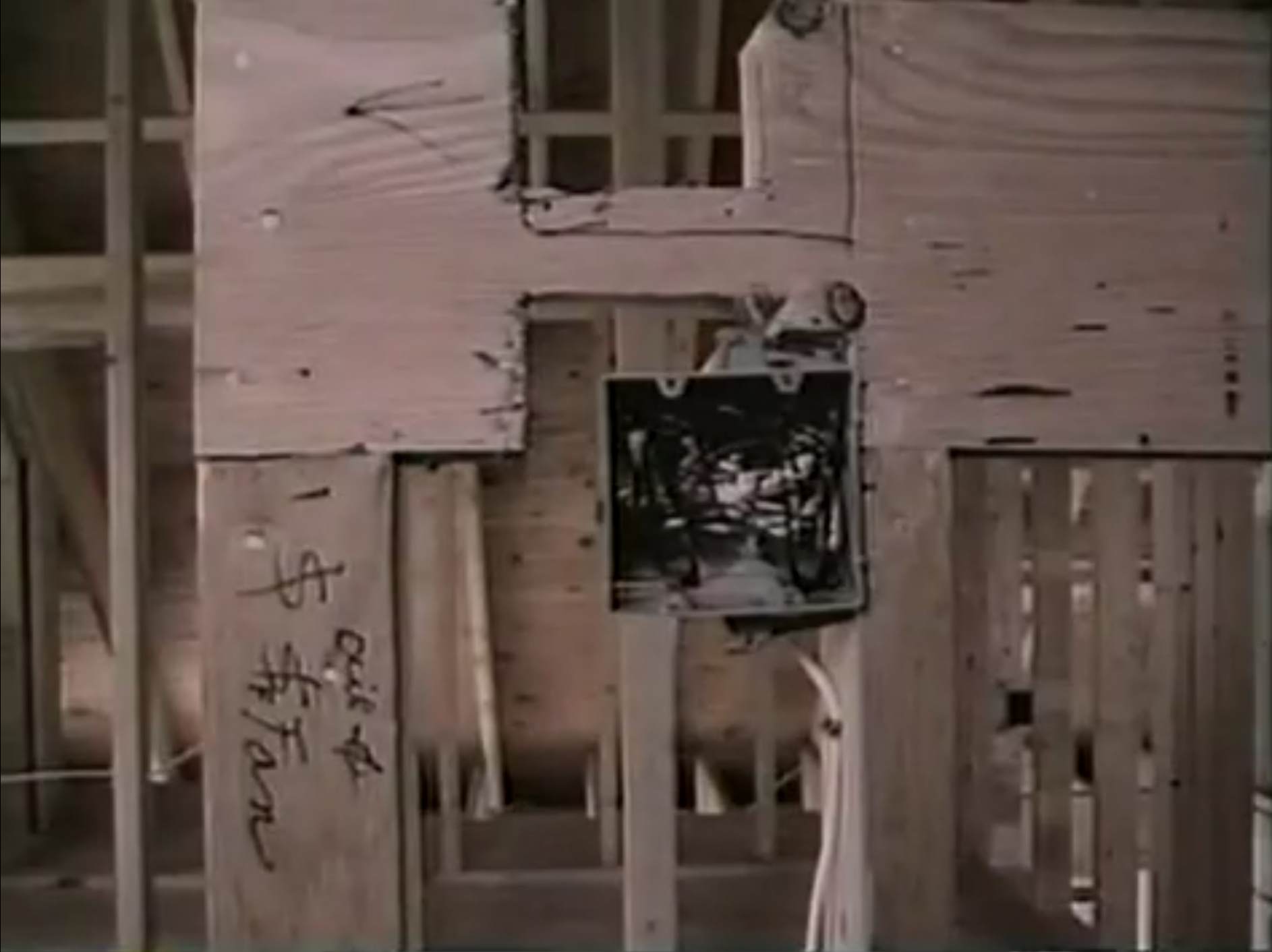

The episode finishes up with architect John Abrams talking about his company’s fascinating practice of meticulously documenting every home they construct in photographs, prior to finishing. The photos show plumbing, framing, electrical, and other construction details prior to the addition of drywall:

We started this process years ago. What happens is, we take those photographs, put them in a book, and that book stays with the house. Presumably forever. It becomes very useful to the carpenters and tradespeople, but then it increases in value over time … there’s this document, it’s like x-ray vision into the walls.

“A building is not something you finish, a building is something you start.”

Jan 20, 2014 7:26am

talk notes, urban airship speaker series

Nolan Caudill extended an invitation to come speak at the Urban Airship Morning Liftoff Speaker Series last week, and I was happy to have the chance to put into words and pictures some of what I’ve been working on at Code for America. Our amazing class of 2014 fellows just started, and we’ve been rushing to fill their heads with stuff before sending them off to their city residencies for the month of February. I’m so excited about the current class; it’s the first time I’ve been here for the full year (I joined in May of last year).

The notes below are a partial summary of my talk from Friday morning. I adapted some elements from my November keynote at Citycamp Oakland, and some thoughts that I previously put into my keynote at TransportationCamp West.

Coding with government is special. Government operates at longer time scales than startups, and remembers a time when information technology used to mean paper.

Paper is easy to mock. Jon Stewart has been taking the Veterans Administration to task for months now, about their massive backlog of outstanding medical claims. The floor under these boxes is supposedly having structural problems:

Paper is easy to mock, but it takes people with hearts and brains to move it around, and often the human processes around paper form communities of practice to get things done better and more effectively than simply computerizing everything.

A useful question, then, is how our current code and technology choices at CfA might better preserve what’s good about the old while still introducing the innovation of the new?

Three important technical goals can help: resiliency to environments and expectations, error-friendliness for developers and users, and sustainability for makers and caretakers.

I’m thinking of resiliency to two factors: environments, and expectations. I love this photo of Carlos Valenciano shutting down Kansas City’s IBM mainframe, the same one that he turned on almost two decades ago:

The technology environments used by local governments are changing, from heavy mainframes to smaller, more nimble systems, often open source like the work we do at CfA. This year’s project with Louisville, the Jail Population Management Dashboard, was developed with portability across environments in mind. Shaunak, Marcin, and Laura developed a supple and gorgeous visualization environment, and backed it with a conservative, cleanly-documented backend layer written in PHP and SQL Server. Those are two decidedly un-sexy technologies, but the bright seam between front-end and back-end allowed Denver to adapt the dashboard to their own purposes with their own compliant backend. Resiliency.

Adapting to new expectations means riding the border between commerce and culture. I’m not yet comfortable with the right wording here, but CfA has benefited greatly from the positive perception of helpful techies planning civic hackathons. Can this perception last? Or isn’t it our mission to work on stuff that matters so we can push the work to the unsexy societal gaps where needs are unaddressed?

Error-friendliness is term I’ve borrowed from Ezio Manzini’s paper on suboptimality: “low specialisation or ‘suboptimality’, regarding a certain purpose, is a strategic valuable property for biological organisms, summoned to confront themselves, in time, with potential environmental changes.” Developer error friendliness is permission to deliver work that’s suboptimal in strategic ways that allow it to be touched, tweaked, and modified piecemeal over time. The enemy here is premature efficiency: the Don’t Repeat Yourself or Convention Over Configuration principles, when taken to extremes, are two ways in which we rob ourselves of clues to intent in code.

David Edgerton’s Shock Of The Old offers additional hints:

As the British naval officer in charge of ship construction and maintenance in the 1920’s put it: “repair work has no connection with mass-production.”

City information technology is more like repair work than mass-production. Code that addresses user needs must be fit to those needs, and there’s rarely a mass-market product to be found that can do this without adjustment and tweaks. This similarity to repair is an advantage: the approach is more craft-like, more custom, and therefore carries with it more opportunities for learning in the agile/lean sense. For what it’s worth, this is also a summary of what I think went wrong with the 2013 relaunch of Healthcare.gov: a commodity-style purchasing mentality applied to a totally new service, with no opportunity for experimentation and learning hiding in that massive price tag.

User error-friendliness is a related concept. Try searching for a birth certificate in our Oakland public records application RecordTrac, and you’ll be immediately pointed toward the County of Alameda's Clerk-Recorder's Office (510-272-6362 or acgov.org/auditor/clerk) for this commonly-encountered mistake. Small-scale, repair-like moves continually nudging users toward the things they actually want.

Sustainability is about who can modify a system, and who can verify that it’s working. Github and “learn to code” are necessary but not sufficient answers to this problem, and it’s not enough for us to simply throw code over the fence when we’re done. Care must be taken that README documents say the right things to the right audiences, that basic architectural principles of the web are honored, and that we’re not landing anyone in hot water by delivering indecipherable weird (a.k.a. “Node for America”).

We’ve built some minimalist instance monitoring services and adapted a repository testing app to help make this last part a reality, and we’ve got a full plate of work planned for this year to help our fellows do their best work.

Jan 10, 2014 6:12am

john mcphee on structure

John McPhee’s Structure made a big impression on me when I read it last year, so I’m happy to see it out from behind the New Yorker paywall. It’s a longform investigation of thought process and order and tools and approach to writing, and I’ve nicked some of the scissors & paper ideas for my own organization.

On crisis:

The picnic-table crisis came along toward the end of my second year as a New Yorker staff writer (a euphemistic term that means unsalaried freelance close to the magazine). In some twenty months, I had submitted half a dozen pieces, short and long, and the editor, William Shawn, had bought them all. You would think that by then I would have developed some confidence in writing a new story, but I hadn’t, and never would. To lack confidence at the outset seems rational to me. It doesn’t matter that something you’ve done before worked out well. Your last piece is never going to write your next one for you. Square 1 does not become Square 2, just Square 1 squared and cubed. At last it occurred to me that Fred Brown, a seventy-nine-year-old Pine Barrens native, who lived in a shanty in the heart of the forest, had had some connection or other to at least three-quarters of those Pine Barrens topics whose miscellaneity was giving me writer’s block. I could introduce him as I first encountered him when I crossed his floorless vestibule—“Come in. Come in. Come on the hell in”—and then describe our many wanderings around the woods together, each theme coming up as something touched upon it. After what turned out to be about thirty thousand words, the rest could take care of itself. Obvious as it had not seemed, this organizing principle gave me a sense of a nearly complete structure, and I got off the table.

On order:

When I was through studying, separating, defining, and coding the whole body of notes, I had thirty-six three-by-five cards, each with two or three code words representing a component of the story. All I had to do was put them in order. What order? An essential part of my office furniture in those years was a standard sheet of plywood—thirty-two square feet—on two sawhorses. I strewed the cards face up on the plywood. The anchored segments would be easy to arrange, but the free-floating ones would make the piece. I didn’t stare at those cards for two weeks, but I kept an eye on them all afternoon. Finally, I found myself looking back and forth between two cards. One said “Alpinist.” The other said “Upset Rapid.” “Alpinist” could go anywhere. “Upset Rapid” had to be where it belonged in the journey on the river. I put the two cards side by side, “Upset Rapid” to the left. Gradually, the thirty-four other cards assembled around them until what had been strewn all over the plywood was now in neat rows. Nothing in that arrangement changed across the many months of writing.

On attention:

So I always rolled the platen and left blank space after each item to accommodate the scissors that were fundamental to my advanced methodology. After reading and rereading the typed notes and then developing the structure and then coding the notes accordingly in the margins and then photocopying the whole of it, I would go at the copied set with the scissors, cutting each sheet into slivers of varying size. If the structure had, say, thirty parts, the slivers would end up in thirty piles that would be put into thirty manila folders. One after another, in the course of writing, I would spill out the sets of slivers, arrange them ladderlike on a card table, and refer to them as I manipulated the Underwood. If this sounds mechanical, its effect was absolutely the reverse. If the contents of the seventh folder were before me, the contents of twenty-nine other folders were out of sight. Every organizational aspect was behind me. The procedure eliminated nearly all distraction and concentrated only the material I had to deal with in a given day or week. It painted me into a corner, yes, but in doing so it freed me to write.

On software:

He listened to the whole process from pocket notebooks to coded slices of paper, then mentioned a text editor called Kedit, citing its exceptional capabilities in sorting. Kedit (pronounced “kay-edit”), a product of the Mansfield Software Group, is the only text editor I have ever used. I have never used a word processor. Kedit did not paginate, italicize, approve of spelling, or screw around with headers, wysiwygs, thesauruses, dictionaries, footnotes, or Sanskrit fonts. Instead, Howard wrote programs to run with Kedit in imitation of the way I had gone about things for two and a half decades. … Howard thought the computer should be adapted to the individual and not the other way around. One size fits one. The programs he wrote for me were molded like clay to my requirements—an appealing approach to anything called an editor.

Jan 5, 2014 8:02pm

blog all oft-played tracks V

The thing about not blogging for six months is that you lose your sense of what’s worth talking about. For the most part, I’ve been using Twitter, Pinboard, Tumblr, and Code for America’s own mailing lists to let out my daily allowance of words and pictures. However, I remain a believer in Jeremy Keith’s suggestion that in a universe of pre-baked publishing platforms, there’s no “more disruptive act than choosing to publish on your own website,” so here I am making an effort to write on this site again in 2014.

This music:

- made its way to iTunes in 2013,

- and got listened to a lot.

I’ve made these for the past four years, lately. Also: everything as an .m3u playlist.

1. Mark Reeve: Planet Green

One track from Cocoon’s excellent compilation series.

2. Trust: Candy Walls

Came to me by way of Sha’s mixtapes for friends.

3. Laika: If You Miss (Laika Virgin Mix)

Spookiest, most earworming thing on 1995 Macro Dub Infection compilation.

4. Hot Chip: These Chains

5. Metallica: The Four Horsemen

From when Metallica was still playing screechy speed metal in the East Bay, 1983.

6. Burial: Truant

Loner might be a better track, but this one made the 2013 number-of-listens cut.

7. Fuck Buttons: The Lisbon Maru

Thanks Nelson for the recommendation. Surf Solar is also excellent, like a bad dream.

8. Grimes: Oblivion

9. John Tejada: Stabilizer (Original Mix)

Perfectly reminiscent of Orbital.

10. T-Power: Elemental

1994-ish proto-jungle.