tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Feb 21, 2009 9:11pm

walking papers

It'd be awesome to be able to mark up OpenStreetMap on paper, while walking around, and still have the benefit of something that others could trace when you're done. Right now, you can throw a GPS in your bag and upload your traces for others to turn into actual road data. If you mark your notes on paper, though, it's a more tedious and lonely process to get that data into the system. For U.S. users, most of the raw roads are in the system already. Largely, what's left to be done consists of repositioning sloppy TIGER/Line roads and adding local detail like parks, schools, zoning, and soon addresses.

It'd be interesting for generated printouts of OSM data to encode enough source information to reconnect the scanned, scribbled-on result back with its point of origin, and use it as an online base map just like GPS traces and Yahoo aerial imagery.

A round trip through the papernet.

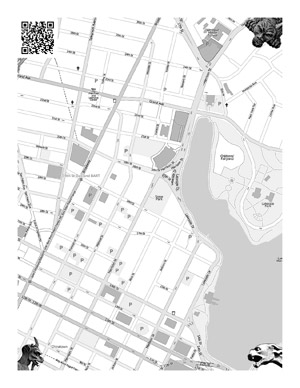

You might print out something that looks like this:

Maybe you'd print out a bunch of them, one for each neighborhood where you'll be walking and making local notes. You could fold them up and stick them in your pocket, photocopy them for other people, whatever. When you were done, you'd scan them in and a web service would figure out where the map came from, and make it available as a traceable base layer in Potlatch or JOSM.

How would the service know where the map came from?

I've been looking at SIFT (Scale Invariant Feature Transform), a computer vision technique from UBC professor David Lowe. The idea behind SIFT is that it's possible to find "features" in an image and describe them independently of how big they are, or whether they've been rotated.

That's why the picture above has gargoyles in the corner.

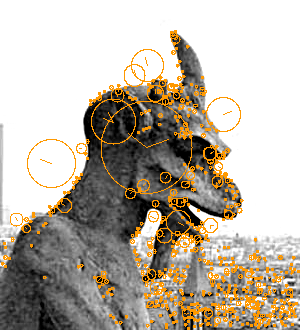

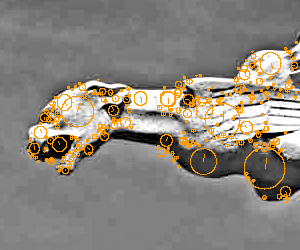

They don't have to be gargoyles, they just have to look unique enough so you could tell them apart and recognize them separately from the map. SIFT can look at an original image, and find features in it like this:

Check out the examples in Lowe's paper - he describes how detected features can be used to reconstruct entire objects.

When a new scan is uploaded, SIFT finds all three gargoyles and then it knows exactly where the corners of the original image are, even if the person scanning it did it upside or sideways. It also knows where to find the QR barcode, the square thing that looks like static in the top left corner. Aaron's been thinking about QR codes for at least two years now, and he's got a simple decoder ring webserver that he banged together out of Java. The code would say something simple like "this map came from 37d48'N, 122d16'W", and that would be just about enough to make the scan into a base map that people could trace in an OpenStreetMap editor.

I got an implementation of SIFT from Andrea Vedaldi and Brian Fulkserson, computer vision students at UCLA. Their implementation makes .sift files like this one or this one. The code to read them isn't over complicated once you know what all the numbers mean - here's a short script, markup.py, that reads the first four values from each line of a .sift file (x, y, scale, rotation) and draws a little circle on the source image. Easy. The remaining 128 numbers on each line are like a signature, you can determine whether features from two different images are the same thing by looking at a simple squared, summed distance between the two.

Of course, it's also possible to forego SIFT entirely and pay random strangers a tenth of a penny to find gargoyles for you, or just have the people uploading the scanned maps point them out.

All that's left is to build the thing to do the stuff. =)

Comments (20)

I was thinking about doing something like this, with an Asterisk front end so you could call in and a map would be faxed to you, and then you could fax it back and then the magic would happen. Thought it might be neat for rural fieldwork. It's been one of those "maybe I'll have something running for WhereCamp" things that I haven't gotten around to. I was just planning to encode the bounds of the map into bar/Q codes and rely on a distinctive border around the map image to find its boundaries. The SIFT idea is pretty cool though. I think there might be some interesting things in diff'ing the scanned/faxed image and the original map tiles, then trying to pull features out of it.

Posted by Marc Pfister on Saturday, February 21 2009 10:09pm UTC

This is excellent. You could also request that notes be made in a specific color, say red, and then use some magic on the channels of the image to extract the annotations without having to suffer through manual tracing. I'm curious, is there a compelling reason (beyond aesthetics) to use an arbitrary image as corner markers rather than putting a Micro QRs in each corner with that corner's coords encoded?

Posted by bryan on Sunday, February 22 2009 8:56pm UTC

@Marc - the fax thing had not occurred to me at all, and the infrastructure for faxing stuff around is much more prevalent than that for printing & scanning (I imagine). @Bryan - sounds like you're also thinking about automatically scrubbing out the base map bits. It makes sense - one thing I've considered is designing a tileset for print that uses light shades of cyan or magenta, so it's easier to differentiate the added bits when the result is scanned. The reason for the corner images is that I'm not sure SIFT would be able to detect arbitrary QR codes in unknown locations. I'm thinking that the images are much less likely to collide with map features, they help you figure out where the QR is on the page in order to read it. I won't deny that the aesthetics are starting to grow on me as well. =) I poked some more at SIFT this morning, and made some good progress. This is the result of a script that accepts two images and their descriptors, and tries to look for instances of one (the needle) in the other (the haystack); the needle is on the far right and the orange boxes show where it was detected: http://mike.teczno.com/img/qrgoyles/matchedup.png The code to generate the above is http://mike.teczno.com/img/qrgoyles/matchup.py

Posted by Michal Migurski on Sunday, February 22 2009 9:38pm UTC

@mike - printing the map in C/M makes a ton of sense. very clever. I've been really enjoying a whitelines notebook for the past few months. light gray pages with white lines = the grid disappears when you scan. http://www.whitelines.se/en/ ...and that SIFT sample image is a little frightening. I'd also like to note the irony of this approach: the QR code which is designed to be machine-readable is in fact only such when you use arbitrary imagery to help the machine find it. this gives me some hope that skynet is not on the horizon as soon as I would have thought!

Posted by bryan on Sunday, February 22 2009 9:52pm UTC

I should also mention I considered storing a UID in the QR code which would reference map information (bounds, layers, etc) in a database to locate the map image. How about having little stickers printed out with something easily SIFTable and sticking them to the map to indicate locations. Maybe different stickers have symbolic icons for different diseases, and health care workers of limited literacy could indicate where the diseases were on a village map. Faxing it back would enter the locations of the disease and join them to the table of huts/shanties/etc. If you can null out the original map tiles, then you open up the potential for OCR - it would be neat for a census to just write the number of occupants on top of the location, and then have it automagically get slurped into your GIS. I really like the idea of an easy way to get paper in and out of OSM, but the pessimist in me says that anything scribbled on a map is low enough in accuracy that it could be just visually digitized. However, the optimist in me likes the possibility of Turking it, and also thinks it needs to happen just to see what unpredictable things result when this opportunity is unleashed on other user groups.

Posted by Marc Pfister on Monday, February 23 2009 2:25am UTC

It occurred to me that if you're encoding a QR code that references a stored UID, then you could also store the SIFT fingerprint of that QR code, and then you'd have things to search for to find the location of the QR code (say "top left"). Hmmmm....

Posted by Marc Pfister on Monday, February 23 2009 5:47am UTC

@Bryan - I've not seen those notebooks, they look really useful. I did see a pad of paper the other day with widely-spaced, almost invisible yellow rules. It didn't seem like anything special or expensive, just unusual. I'm pretty sure SkyNet is taking note of your lack of faith and will deal with you appropriately when the time comes. @Marc - I agree that it's low in accuracy. The reason I think it might be worthwhile is because of the kinds of local points that most need mapping now: I often make note of features or businesses when walking around, but I can only hold a few reliably in my head. I like the idea that paper provides an intermediate transport mechanisms for things too numerous to remember, and invisible in aerial photographs. I'm turning your recursive QR code idea over and over. =) I suppose SIFT might happily detect the invariant bits of a code, such as position and alignment marks: http://en.wikipedia.org/wiki/QR_Code#Overview - I'd hate to part with the gargoyles so quickly!

Posted by Michal Migurski on Monday, February 23 2009 7:17am UTC

I meant store the SIFT descriptors in a database, not in the QR itself. But the idea is intriguing, though too freaky for this hour of the morning.

Posted by Marc Pfister on Monday, February 23 2009 3:32pm UTC

Now if you could use the alignment marks in the QR code to scale, align, and locate the whole image!

Posted by Marc Pfister on Monday, February 23 2009 8:26pm UTC

Does the QR say "uri=example stuff goes here"? I took a the quarter-quarter crop of your sample image and ran it through the online ZXing decoder and that's what it returned. So one could get the 1/16 of the image in each corner, check it for a QR code, and if found get the position information for the three position points. You could store the lat/lon position of of one of the QR position points in the QR, and then use the other two to do the transform. I wonder what kind of tolerance you get in the QR code position? Unfortunately, none of the Python QR libraries seem to give position. Though if your maps are based on a cached tileset, why not just run something like Astronometry.net is using on the tiles. Then you don't need QR codes or gargoyles.

Posted by Marc Pfister on Tuesday, February 24 2009 6:30am UTC

I've also found that the QR code libraries I'm using don't give position, which is a huge drag. It *is* possible to feed an entire scan and have it extract the code, which is fine, but it seems to not help much with the overall context, esp the image is upside down or just crooked. I think the main advantage Atrometry has over OSM is that generally, the stars don't move, while roads in OSM do get updated and shifted around. Eric and Shawn reminded me this morning of a similar project that did geolocation based on sidewalk gum positions. I think roads themselves may actually be quite problematic: they move in the OSM database, and there are a lot of places in the world where the road network is identical, e.g. US cities with regular street grids. One interesting thing about the QR codes is that it might provide more context for the map - date printed, for example, or something like a user ID.

Posted by Michal Migurski on Tuesday, February 24 2009 7:12am UTC

So I got it to work with just a QR code for alignment: http://marc.pfister.googlepages.com/georeferencingwithqrcodes

Posted by on Friday, March 6 2009 8:22pm UTC

Marc, that's awesome - can you explain a little more about how you were able to find the registration points with the QR reader? That's the missing piece for me, so far I've only been able to access the content of the code, not its location. FWIW, current versions of the thing above have ditched gargoyles in favor of explanatory text that doubles as a SIFT target. =)

Posted by Michal Migurski on Friday, March 6 2009 8:56pm UTC

You have to use Zxing - just use the Result.getResultPoints() function. It returns an array of the points. I modified the CommandLineRunner example to get the points returned as text. I'll post a link to it on my page once I retrieve it from my laptop. Now the challenge is to figure out the least hoopty way to get Python and Java to work together.

Posted by Marc Pfister on Saturday, March 7 2009 12:12am UTC

The power of HTTP, for sure: http://www.aaronland.info/java/ws-decode/

Posted by Michal Migurski on Saturday, March 7 2009 1:12am UTC

Yeah, I saw the decoder server when I was browsing the Zxing source and figured I could always tweak it to kick back points. I was hoping for something more, uh, direct, but I guess since it's built and done might as well take it and run with it. At least I don't have to port it all to Java. And weirdly enough, I remember reading Aaron's article, but had forgotten it until today.

Posted by Marc Pfister on Saturday, March 7 2009 2:05am UTC

Eagerly awaiting a working solution for this :-) When running mapping parties we want lots of people to take part but while those people often have an interest in walking about and gathering data they aren't always interested or technically competent enough to quickly add their data to the map database. That's a waste. We cant make people edit but we can help others to use the data that's collected. Each time I've tried to edit someone else's collected information I have found it difficult, mainly because they were not in printed map form. Having a tool that works with a printed map makes it a whole lot simpler and more likely to happen.

Posted by Andy Robinson on Tuesday, March 10 2009 3:36pm UTC

Wow, yeah, this looks REALLY REALLY neat especially for doing things like capturing street names, or business names, or addressing. REALLY neat, especially if you could put the image into a WMS server.

Posted by Russ Nelson on Wednesday, March 11 2009 7:31pm UTC

Here's a basic proof-of-concept for the front end. Needs more work but the pieces are falling together: http://drwelby.net/qr-osm.html

Posted by Marc Pfister on Saturday, March 14 2009 1:48am UTC

Marc, I love the way the QR code self-updates as you pan around.

Posted by Michal Migurski on Saturday, March 14 2009 4:48pm UTC

Sorry, no new comments on old posts.