tecznotes

Michal Migurski's notebook, listening post, and soapbox. Subscribe to ![]() this blog.

Check out the rest of my site as well.

this blog.

Check out the rest of my site as well.

Jun 9, 2017 10:31pm

blog all dog-eared pages: human transit

This week, I started at a new company. I’ve joined Remix to work on early-stage product design and development. Remix produces a planning platform for public transit, and one requirement of the extensive ongoing process is to have read Jarrett Walker’s Human Transit cover-to-cover. Walker is a longtime urban planner and transit advocate whose book establishes a foundation for making decisions about transit system design. In particular, Walker advocates time and network considerations in favor of simple spatial ones.

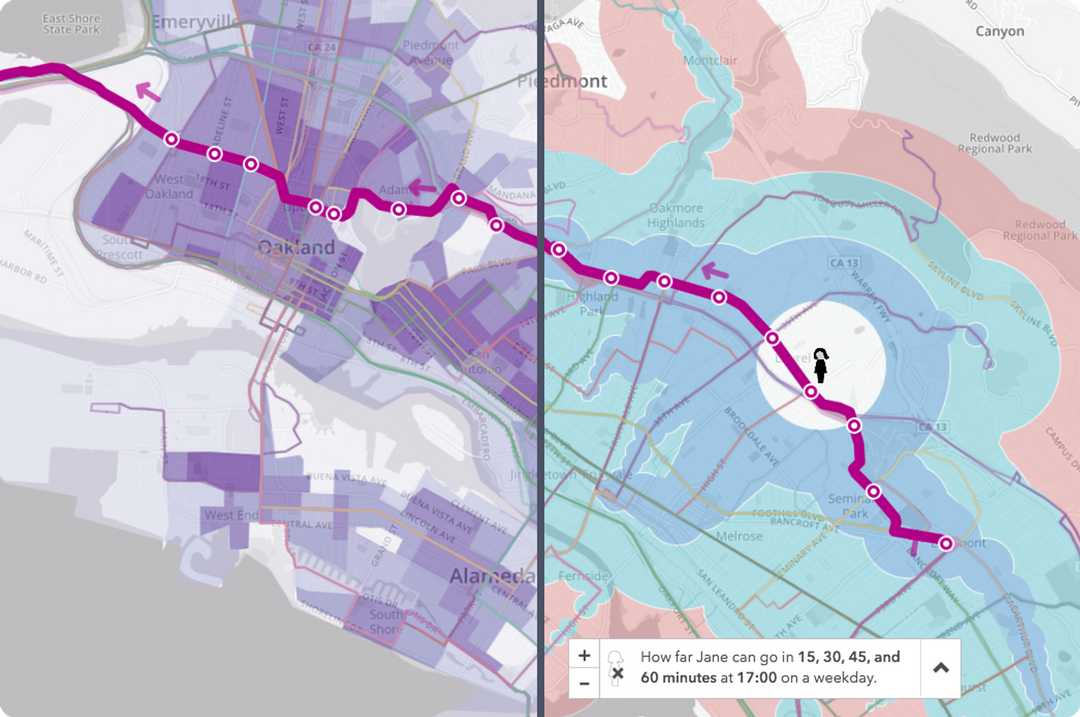

Many common public ideas about transit system design are actually misapplied from road network design when the two are actually quite different. For example, the frequency of transit vehicles (headway) has a much greater effect than their speed on the usability of a system. Uninformed trade-offs between connected systems and point-to-point systems can lead to the creation of unhelpful networks with long headways. Interactions between transit networks and the layout of streets they’re embedded in can undermine the effectiveness of transit even when it exists. All of this suggests that new visual mapmaking tools would be a critical component of better transit design that meets user needs, like the “Jane” feature of the current Remix platform showing travel times through a network taking headways into account. Here’s how far a user of public transit in Oakland can move from the Laurel Heights neighborhood on a weekday:

There’s an enormous opportunity here to apply statistical, urban, and open data to the problems of movement and city design.

These are a few of the passages from Human Transit that piqued my interest.

What even is public transit, page 13:

There are several ways to define public transit, so it is important to clarify how I’ll be using the term. Public transit consists of regularly scheduled vehicle trips, open to all paying passengers, with the capacity ti carry multiple passengers whose trips may have different origins, destinations, and purposes.

On the seven demands for a transit service, page 24:

In the hundreds of hours I’ve spent listening to people talk about their transit needs, I’ve heard seven broad expectations that potential riders have of a transit service that they would consider riding: 1) It takes me where I want to go, 2) It takes me when I want to go, 3) It is a good use of my time, 4) It is a good use of my money, 5) It respects me in the level of safety , comfort, and amenity it provides, 6) I can trust it, and 7) It gives me freedom to change my plans. … These seven demands, then, are dimensions of the mobility that transit provides. They don’t yet tell us how good we need the service to be, but they will help us identify the kinds of goodness we need to care about. In short, we can use these as a starting point for defining useful service.

On the relationship between network design and freedom, pages 31-32:

Freedom is also the biggest payoff of legibility. Only if you can remember the layout of your transit system and how to navigate it can you use transit to move spontaneously around your city. Legibility has two parts: 1) simplicity in the design of the network, so that it’s easy to explain and remember, and 2) the clarity of the presentation in all the various media.

No amount of brilliant presentation can compensate for an overly-complicated network. Anyone who has looked at a confusing tangle of routes on a system map and decided to take their car can attest to how complexity can undermine ridership. Good network planning tries to create the simplest possible network. Where complexity is unavoidable, other legibility tools help customers to see through the complexity and to find patterns of useful service that may be hidden there. For example, chapter 7 explores the idea of Frequent Network maps, which enable you to see just the lines where service is coming soon, all day. These, it turns out, are not just a navigation tool but also a land use planning tool.

On the distance between stops, pages 62-63:

Street network determines walking distance. Walking distance determines, in part, how far apart the stops can be. Stop spacing determines operating speed. So yes, the nature of the local street network affects how fast the transit line can run!

How do we decide about spacing? Consider the diamond-shaped catchment that’s made possible by a fine street grid. Ideal stop spacing is as far apart as possible for the sake of speed, but people around the line have to be able to get to it. In particular, we’re watching two areas of impact.

First, the duplicate coverage area is the area that has more than one stop within walking distance. In most situations, on flat terrain, you need to be able to walk to one stop, but not two, so duplicate coverage is a waste. Moving stops farther apart reduces the duplicate coverage area, which means that a greater number of unique people and areas are served by the stops.

Second, the coverage gap is the area that is within walking distance of the line but not of a stop. As the move stops farther apart, the coverage gap grows.

We would like to minimize both of these things, but in fact we have to choose between them. … Which is worse: creating duplicate coverage area or leaving a coverage gap? It depends on whether your transit system is designed mainly to meet the needs of transit-dependent persons or to compete for high ridership.

On Caltrain and misleading map lines, pages 79-80:

Sometimes, commuter rail is established in a corridor where the market could support efficient two-way, all-day frequent rapid transit. Once that happens, the commuter rail service can be an obstacle to any further improvement. The commuter rail creates a line on the map, so many decision makers assume that the needs are met, and may not understand that the line’s poor frequency outside the peak prevents it from functioning as rapid transit. At the same time, efforts to convert commuter rail operations to all-day high-frequency service (which requires enough automation to reduce the number of employees per train to one, if not zero) founder against institutional resistance, especially within labor unions. (Such a chance wouldn’t necessarily eliminate jobs overall, but it would turn all the jobs into train-driver jobs, running more trains)

This problem has existed for decades, for example, around the Caltrain commuter rail line between San Francisco and San Jose. This corridor has the perfect geography for all-day frequent rapid transit: super-dense San Francisco at one end, San Jose at the other, and a rail that goes right through the downtowns of almost all the suburban cities in between. In fact, the downtowns are where they are because they grew around the rail line, so the fit between the transit and urban form could not be more perfect.

…

Caltrain achieves unusually high farebox return (percentage of operating cost paid by fares) because it runs mostly when it’s busy, but its presence is also a source of confusion: the line on the map gives the appearance that this corridor has rapid transit service, but in fact Caltrain is of limited use outside the commute hour.

On cartographic emphasis and what to highlight, pages 88-89:

If a street map for a city showed every road with the same kind of line, so that a freeway looked just like a gravel road, we’d say it was a bad map. If we can’t identify the major streets and freeways, we can’t see the basic structure of the city, and without that, we can’t really make use of the map’s information. What road should a motorist use when traveling a long distance across the city? Such a map wouldn’t tell you, and without that, you couldn’t really begin.

So, a transit map that makes all lines look equal is like a road map that doesn’t show the difference between a freeway and a gravel road.

…

Emphasizing speed over frequency can make sense in contexts where everyone is expected to plan around the timetable, including peak-only commute services and very long trips with low demand. In all other contexts, though, it seems to be a common motorist’s error. Roads are there all the time, so their speed is the most important fact that distinguishes them. But transit is only there if it’s coming soon. If you have a car, you can use a road whenever you want and experience its speed. But transit has to exist when you need it (span) and it needs to be coming soon (frequency). Otherwise, waiting time will wipe out any time savings from faster service. Unless you’re comfortable planning you life around a particular scheduled trip, speed is worthless without frequency, so a transit map that screams about speed and whispers about frequency will be sowing confusion.

On the effects of delay in time, page 98:

In most urban transit, what matters is not speed by delay. Most transit technologies can go as fast as it’s safe to go in an urban setting—either on roads or on rails. What matters is mostly what can get in their way, how often they will stop, and for how long. So when we work to speed up transit, we focus on removing delays.

Delay is also the main source of problems of reliability. Reliability and average speed are different concepts, but both are undermined by the same kinds of delay, and when we reduce delay, service usually runs both faster and more reliably.

Longer-distance travel between cities is different, so analogies from those services can mislead. Airplanes, oceangoing ships, and intercity trains all spend long stretches of time at their maximum possible speed, with nothing to stop for and nothing to get in their way. Urban transit is different because a) it stops much more frequently, so top speed matters less than the stops, and b) it tends to be in situations that restrict its speed, including various kinds of congestion. Even in a rail transit system with an unobstructed path, the volume of trains going through imposes some limits, because you have to maintain a safe spacing between them even as they stop and start at stations.

On fairness, usage, and politics, page 105:

On any great urban street, every part of the current use has its fierce defenders. Local merchants will do anything to keep the on-street parking in front of their businesses. Motorists will worry (not always correctly) that losing a lane of traffic means more congestion. Removing landscaping can be controversial, especially if mature trees are involved.

To win space for transit lanes in this environment, we usually have to talk about fairness. … What if we turned a northbound traffic lane on Van Ness into a transit lane? We’re be taking 14 percent of the lane capacity of these streets to serve about 14 percent of the people who already travel in those lanes, namely, the people already using transit.

On locating transit centers at network connection points, pages 176-177:

If you want to serve a complex and diverse city with many destinations and you value frequency and simplicity, the geometry of public transit will force you to require connections. That means that for any trip from point A to point B, the quality of the experience depends on the design of not just A and B but also of a third location, point C, where the required connection occurs.

…

If you want to enjoy the riches of your city without owning a car, and you explore your mobility options through a tool like the Walkscore.com or Mapnificent.net travel time map, you’ll discover that you’ll have the best mobility if you locate at a connection point. If a business wants its employees to get to work on transit, or if a business wants to serve transit-riding customers, the best place to locate is a connection point where many services converge. All these individual decisions that generate demand for especially dense development—some kind of downtown or town center—around connection points.

…

In the midst of these debates, it’s common to hear someone ask: “Can’t we divide this big transit center into two smaller ones? Can’t we have the trains connect here and have the buses connect somewhere else, at a different station?” The answer is almost always no. At a connection point that is designed to serve a many-to-many city, people must be able to connect between any service and any other. That only happens if the services come to the same place.

On the importance of system geometry, page 181:

We’ve seen that the ease of walking to transit stops is a fact about the community and where you are in it, not a fact about the transit system. We’ve noticed that grids are an especially efficient shape for a transit network, so that’s obviously an advantage for gridded cities, like Los Angeles and Chicago, that fit that form easily. We’ve also noticed that chokepoints—like mountain passes and water barriers of many cities—offer transit a potential advantage. We’ve seen how density, both residential and commercial, is a powerful driver of transit outcomes, but that the design of the local street network matters too. High-quality and cost-effective transit implies certain geometric patterns. To the extent that those patterns work with the design of your community, you can have transit that’s both high-quality and cost-effective. To the extent that they don’t, you can’t.

On looking ahead by twenty years, page 216:

Overall, in our increasingly mobile culture, it’s hard to care about your city twenty years into the future, unless you’re one of a small minority who have made long-term investments there or you have a stable family presence that you believe will continue for generations.

But the big payoffs rest in strategic thinking, and that means looking forward over a span of time. I suggest twenty years as a time frame because almost everybody will relocate in that time, and most of the development not contemplated in your city will be complete. That means virtually every resident and business will have a chance to reconsider its location in light of the transit system planed for the future. It also means that it’s easier to get citizens thinking about what they want the city to be like, rather than just fearing change that might happen to the street where they live now. I’ve found that once this process gets going, people enjoy talking thinking about their city twenty years ahead, even if they aren’t sure they’ll live there then.

Jun 2, 2017 5:39am

the levity of serverlessness

As tech marketing jargon goes, “serverless” is a terrible word. There’s always a server and the cloud is just other people’s computers, it’s only a question of who runs it. I like Kate Pearce’s take:

The next time you try and use the word “serverless” just remember it’s like calling takeout “kitchenless”.

Still, Amazon’s supposedly-serverless Lambda offering has some attractive qualities so I’ve used it on a selection of projects during the past few months. I’ve learned a bit about making Lambda work in a Python development flow. Having already put my head through this wall, maybe this post will help you find it easier?

The key differences between running code on Lambda instead of a server you manage emerge in costs, downtime, heavy usage, and development constraints. You pay for the resources you consume measured in milliseconds. With a virtual server, you pay for uptime measured in hours. Lambda is free when it’s not in active use, unlike a virtual server that costs money when sitting idle waiting for requests. Lambda can accept large concurrent request volumes. Virtual servers may instead need to be spun up over a period of minutes to deal with increased demand. In some ways it’s closer to Google’s old App Engine service than Amazon’s EC2, similar to Heroku’s platform service, and definitely closer to my own assumptions about EC2 at the time it first launched a decade ago (I didn’t realize EC2 was regular Linux boxes in the sky). The heavy cost for these scaling properties comes in several constraints: a Lambda function can only run for a few minutes and consume a small amount of memory, and must be written in one of a limited number of languages.

I’ve used it in three projects of increasing complexity: a script for reposting images from my Tumblr account to my Mastodon account, a simple form-based data collector, and a new service for scoring legislative district plans (more on that in a future post).

Some things worked as-advertised.

Stuff That Just Worked

- Python 3.6

- Execution limits

- Different invocation types

- Integrations with other AWS services

- AWS CLI, the command line client

For a while, only Python 2.7 was supported by AWS Lambda. This made it kind of a toy — anything serious I do in Python now, I do in version 3 to get the advantage of good unicode support. Sometime last month, Python 3.6 support was added to Lambda making it immediately compatible with my own development preferences. The Python 3.6 support is real, and comes with the full standard library you’d expect anywhere else. It’s possible to write serious code and deploy it now.

The documented limits seem to work as promised: functions can reliably run for up to five minutes, and the provided Context object will tell you how much time you have remaining in milliseconds. When you go over time (or over memory, though I have not experienced this) the function is halted without notice. Execution logs go to Cloudwatch, where the amount of billed time is recorded.

There are two invocation types, “Event” and “RequestResponse”. The first is used in situations where you want to trigger a function and you don’t care about its return value, such as scheduled tasks. The second is used when you need the response immediately, and is especially useful together with API Gateway for writing functions that can be called by users via an HTTP request. Event invocation is pretty useful: you can’t run a Lambda function directly from a queue, but you can invoke the function as an Event and let it run for an indeterminate period of time while responding immediately to a user request. It’s a useful way to get queue-like behaviors cheaply.

Generally, interactions with other AWS services work well. I’m new to Cloudwatch Logs, but it’s the only output mechanism available for debugging a Lambda function running on the platform. When a function is retried and fails twice, a warning message can be sent via SNS or pushed to a queue. Lambda functions are not ordinarily accessible from the public web, but the API Gateway service makes it possible to map a URL to a function so it can be used on a website. All basic stuff, but it works together effectively. I’ve found it useful to keep numerous browser tabs open with the AWS Console because it can be confusing to track each of these services.

Finally, the AWS CLI is a great command-line client for all AWS services. Terminology between the developer console, the CLI, and the underlying Boto SDK is consistent, and actions available in a browser are equally available via the CLI client. This makes it possible to script certain deployment tasks as part of an automated process, and experiment with AWS actions before writing code.

Some other things about Lambda remain a pain.

Stuff That Sucks

- Editing code

- Configuring API Gateway

- Development environments

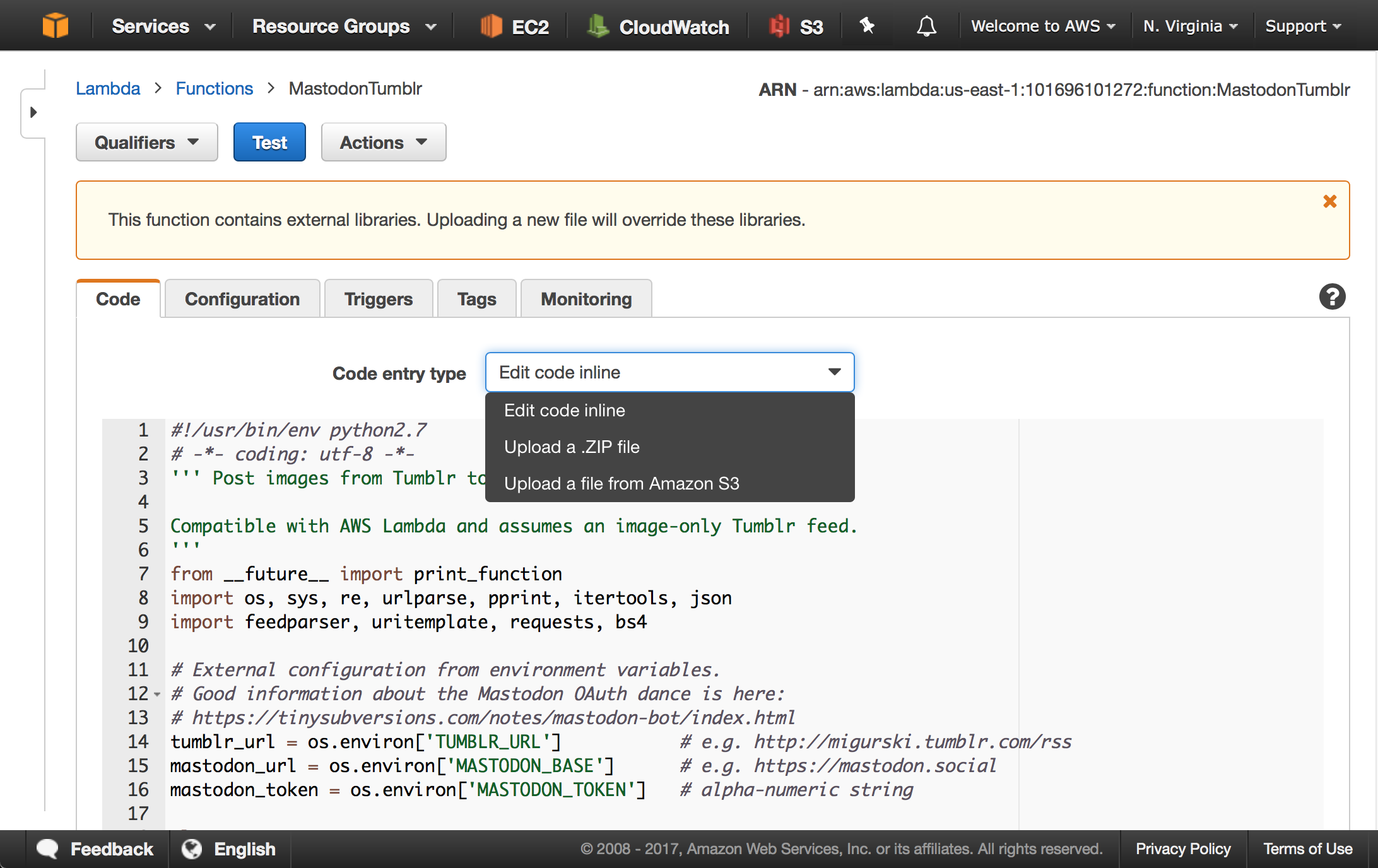

Editing non-trivial code is a bummer. The basic interface to Lambda is an editable text box where you can type (or paste) code directly. In my browser, Safari on OS X, certain operations like copy/paste frequently fail in the text box. A Zip archive upload is provided as an alternative, and the AWS CLI can let you do this programmatically. On slow internet this introduces a prohibitive time delay uploading large function packages. Heroku’s Git model and integration with service like Github feels much more mature and conducive to a smooth development and deployment flow.

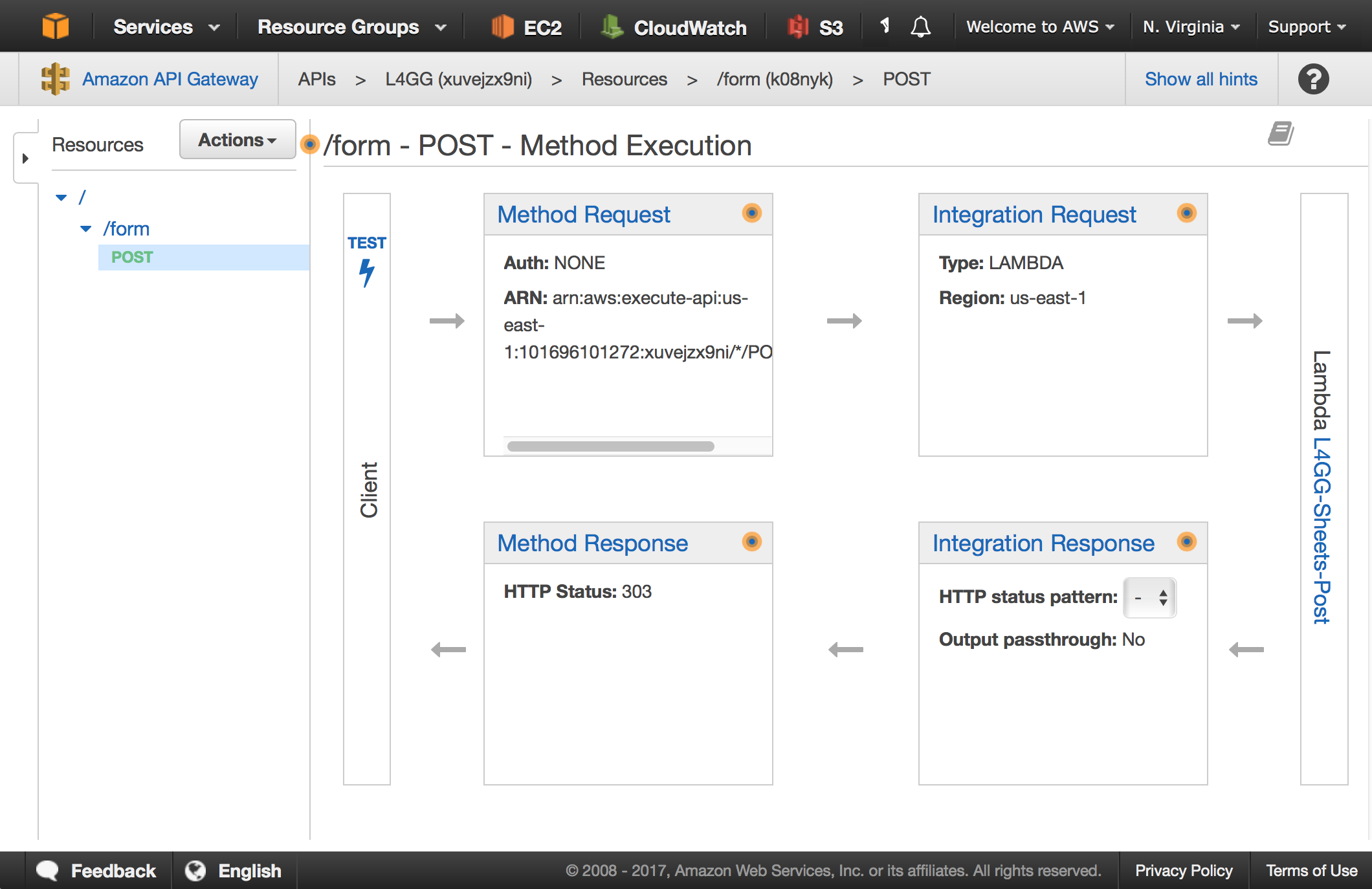

Working with the API Gateway service is unfortunate, with four interlocking pieces of configuration: Method Request, Integration Request, Integration Response, and Method Response. Configured settings in each are interdependent, such as the status code and header behaviors in the two response configurations. Getting “normal” HTTP things like form submissions to work involves some pretty weird Stack Overflow driven development, and generally feels hacky. I have mostly found configuring API Gateway to be a trial-and-error process. I’ve heard that Swagger helps with this in some way, but it also appears to overengineer a lot of unrelated things so I’ve ignored it.

Finally, it’s difficult to spin up a quick dev environment with all these services. I’ve ended up continuously deploying to production as I work, not dissimilar from old-style FTP-based development. Heroku has always done this very well with entire stacks magicked out of Github pull requests, and the recently-departed Skyliner.io platform did a great job with AWS configurations specifically. AWS API Gateway does have the concept of deployment stages, but it’s only one piece of the overall picture. AWS Cloudformation is supposed to help with this, but it’s big and impenetrable and I haven’t yet invested the time to understand if it’s an answer or more questions.

Fortunately, a bunch of other things I thought would be difficult turned out to work really well in Lambda after a bit of effort to learn more about the model.

Stuff I Learned

- Packaging for deployment

- Deploying from a CI service

- Including compiled binaries

- Uploading one well-tested package for multiple functions

- Using Proxy Integration to make requests sane

As soon as I wanted to use Amazon’s Python SDK Boto to talk to other Amazon services from Lambda, I realized I was going to need to build a package larger than a single file. Lambda’s deployment advice shows how to use Pip to build a zip archive for upload to Amazon, so I’ve been adding that to project build scripts. Pip’s target directory option creates the right structure for Lambda’s use, which means that my usual requirements declarations now just work with Lambda.

Once Boto was added to the package, the size immediately ballooned to several megabytes. Boto is pretty big, and doesn’t include an option for building a minimal version. So, I was creating code builds much too large to effectively upload from my home DSL or my mobile tether. I wanted to be able to deploy via incremental Git pushes as Heroku allows, and fortunately Circle CI’s deployment feature made this possible. After adding master branch deployment to my testing configurations, I no longer needed to wait for lengthy network transfers on my local connection.

The next addition that ballooned my package sizes was GDAL, a compiled binary library for working with geographic data. Fortunately, Seth Fitzsimmons and Matthew Perry have each worked on this before and provided details on making GDAL work with Lambda. Seth in particular has become something of an expert in getting hard-to-compile binary software like GDAL and Mapnik working on platforms like Lambda and Heroku. I’m enormously lucky to be able to benefit from his work. I used Seth’s Docker-based build hints to include GDAL in the Lambda packages. With GDAL’s addition and a few other dependencies, the overall package size had increased to 25MB so I was grateful for Circle CI’s role in the process.

The actual differences between different function packages were quite miniscule by this point, so I’ve been uploading a single package to Lambda for multiple functions and using a minimal entry point script to provide handler functions. This organization has helped in a few ways. When Lambda invokes a handler from within a module, it doesn’t allow for relative imports that are useful for building a real package. I looked for ways around this and found a 2007 note from Guido van Rossum arguing that “running scripts that happen to be living inside a module’s directory” is “an antipattern”. Moving those scripts out to a short file outside the module is closer to the spirit of Guido’s intended usage. Also, it makes comprehensive testing of a module easier to complete.

After messing around with API Gateway’s various input and output options, I’ve concluded that Lambda Proxy Integration is the only way to go. Lambda handlers will never see CGI-style HTTP input as Heroku applications do, but API Gateway sends the next best thing with a dictionary of HTTP input details. These are under-documented so it’s taken some trial-and-error to get input working. I’ve considered writing a small Lambda/Flask bridge using these objects in order to write an application that can be fully run locally, and hopefully the example provided in Amazon’s docs is sufficiently comprehensive.

Conclusion

Working with Lambda still feels fairly uphill, and I’m hoping to improve on some of the challenges above. As I’ve been writing an application with no users, it’s been easy to update live Lambda functions. A next step would be to configure a second development environment with all the necessary interconnecting parts. I’m pretty pleased with the tradeoffs, assuming that Lambda’s scaling advantages work as-promised.